Mon, Feb 2, 2026

[Archive]

Volume 16, Issue 51 (2023)

J Med Edu Dev 2023, 16(51): 57-64 |

Back to browse issues page

Ethics code: 08/2022

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

mlika M, dziri C, jallouli M, cheikhrouhou S, el mezni F. Teaching clinical reasoning among undergraduate medical students: A crossover randomized trial. J Med Edu Dev 2023; 16 (51) :57-64

URL: http://edujournal.zums.ac.ir/article-1-1898-en.html

URL: http://edujournal.zums.ac.ir/article-1-1898-en.html

1- University of Tunis El Manar. Faculty of Medicine of Tunis. Center of Trauma and major burn. Ben Arous , mouna.mlika@fmt.utm.tn

2- University of Tunis El Manar. Faculty of Medicine of Tunis

2- University of Tunis El Manar. Faculty of Medicine of Tunis

Full-Text [PDF 510 kb]

(1068 Downloads)

| Abstract (HTML) (1639 Views)

The tutor was asked to empower the students and help them correct their clinical reasoning or obtain some scientific information concerning some diseases to enrich their knowledge. At the end of the session, every student was asked to write a structured clinical summary. The tutor gave them a checklist table to help them construct the structured summary. This checklist was inspired by the checklist published by Tabbane C in 2000 (8)Cliquez ou appuyez ici pour entrer du texte . (Table 2). Then, the participants debriefed about the session with the tutor according to a think-aloud strategy. Two learning sessions were programmed for each group and centered on pulmonary tuberculosis and pleural tuberculosis.

The SNAPPS arm: The scenario of the SNAPPS session was programmed according to the methodology adopted by Wolpaw, et al in 2003 (6). The tutor was asked to deliver the clinical information to the students according to 6 steps as follows: the first step consisted in summarizing the history and physical findings. The second step consisted of narrowing and choosing two to three relevant hypotheses. The third step consisted in Analysing the different hypotheses. The learners initiated a case-focused discussion of the differential diagnoses by comparing the relevant diagnostic possibilities. The fourth step consisted of probing the preceptor and asking about uncertainties. The learners were expected to reveal areas of confusion and knowledge deficits. The fifth step consisted of planning the treatment of the patient. The last step consisted of self-selecting pathologies to assess. The learner was encouraged to read about focused patient-based questions. At the end of every learning session, the students were asked to perform a clinical structured summary illustrating their clinical reasoning (8).

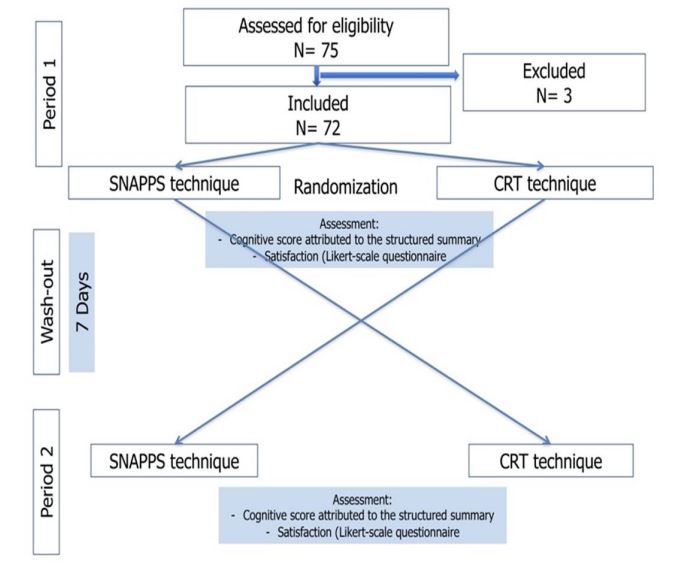

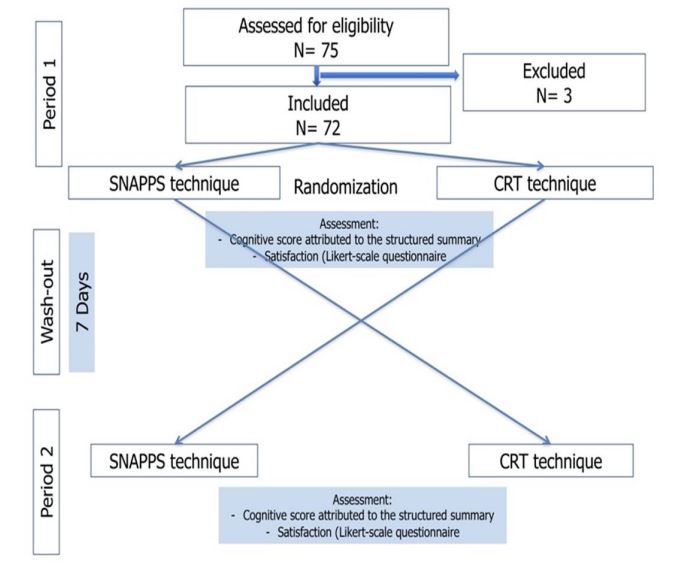

During the washout periods that last 7 days, the students’ activities were centered on the technical skills mentioned in their portfolios and on performing a critical appraisal of medical literature (9).

Participants and sampling

Since 2013, the faculty’s administration staff used to assign students to the Department of Pathology. The assignment of the different students to the different departments was organized according to the students’ ranking. In the Department of Pathology, the students were divided into 7 groups of 3 to 4 students with an interval period of 2 to 3 weeks between the different groups. The students were randomly allocated to the SNAPPS or CRT arm using computerized random number allocation.

Procedures

Two real illness scripts were used. The training period lasts three weeks according to the university’s recommendations. All the students that were assigned by the faculty and who gave their consent to participate in this study were included. The students that were performing their training period in other departments were not included. The students that didn’t want to be enrolled in this study were excluded. The cross-over of every group happened after a washout period of 7 days.

Tools/Instruments

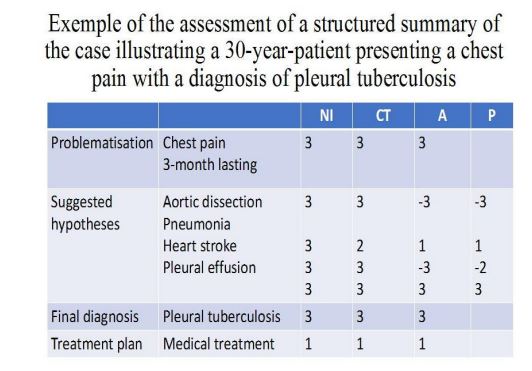

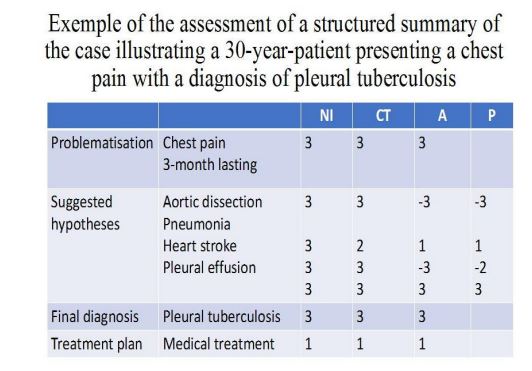

The main judgment criteria consisted of the assessment of the students’ knowledge competencies using the differently structured summaries performed by the students during respectively the SNAPPS or the CRT sessions. To assess the different summaries, the authors used the NICTALOP method (10). This method consisted of the assessment of 5 criteria: NI: the number of key ideas or key diagnoses, CT: the choice of terms, A: the veracity of the concepts or diagnoses, LoP: the length and the position of the ideas and concepts. All these criteria were discussed by 2 experts. The number of key ideas or diagnoses was scored as the sum of ideas’ values fixed by the experts. The choice of terms was based on the adequacy of the words used. The veracity of the concept score was based on the probability of the diagnoses suspected. The choice of terms consisted of the accuracy of the words employed. The LoP of criteria was adapted to our use. The length of the observation is more suitable when assessing medical observation. In our case, we assessed the structured summary and the clinical reasoning so that, we used only the Position criteria because of the importance of the diagnosis prioritization. For this item, the answer of the students was compared to the experts’ answer and scored +1 when correctly positioned and -1 when it was not similar to the hierarchy established by the experts. The total score was rated over 10 for every summary. An example of the assessment of a structured summary is presented in Figure 1. The assessment was performed by the same tutor who was blinded to the written material.

The secondary judgment criteria consisted of the assessment of the students’ satisfaction. This was done using a Likert-scale satisfaction questionnaire. Students were asked about their ability to suggest and validate hypotheses according to specific cases, to ask for complementary tests according to their diagnostic suspicions, and to plan treatment modalities. They were also asked about their preferences concerning both techniques used. The participants rated their agreement with each statement on a 5-point Likert scale (do not agree at all–agree completely). Figure 2 represents the study flowchart.

Data analysis

Statistical tests used to compare the means’ scores between both clinical reasoning learning techniques consisted of the nonparametric Mann Whitney U test in case of absence of normality with alpha levels set at 0.05. The authors used SPSS software (version 20.0). The analysis of the Likert-scale satisfaction questionnaire was based on the percentage of students satisfied or not according to the different questions.

Results

Sample

Seventy-two students were included in this study with a mean age of 21.05 years (SD:1.25). Three students were excluded because they didn’t perform a structured clinical summary after one session.

Comparison of the students' mean scores in the SNAPPS arm versus the CRT arm

One session of CRT centered on pleural tuberculosis was performed. The mean scores reached 4.62, averages [0-10], SD=2.30.

One session of SNAPPS centered on pulmonary tuberculosis was performed. The mean scores reached 4.99, average [2.2, 7.2], SD=1.53.

No significant statistical difference was observed between the mean scores according to the method used (SNAPPS or CRT) (P=0.890). Table 3 represents the detailed results.

Assessment of the Students’ satisfaction

The analysis of the satisfaction questionnaire revealed that 75% of the students (54/72) assumed that they were able to perform the objective structured abstract. 100% of the students (72/72) attributed this ability to the checklist used. 79.2% of the students (57/72) assumed that they were able to prioritize the diagnoses. 83.3% of the students (60/72) considered that they were able to validate and justify the different hypotheses. 66.7% of the students (48/72) considered that they were able to consider exploratory tests according to the different diagnoses. 70.8% of the students (51/72) assumed that they were able to identify cognitive errors. All the students attributed these errors to their lack of knowledge. 75% of the students (54/72) preferred the CRT technique. 13.5% of the students (10/72) preferred the SNAPPS session. 92% of the students (66/72) who preferred the CRT technique attributed their preference to collaborative work.

Discussion

This study showed no statistically significant difference in students’ scores between SNAPPS and CRT techniques. It consisted of a prospective cross-over trial including 72 medical students in their third year of medical education. The judgment variable consisted of students’ scores attributed by the same tutor to the structured summaries of observations performed by every student at the end of the sessions. The secondary objective consisted of the students’ satisfaction according to each technique.

Both kinds of assessments allowed us to reach level 1 (satisfaction) and level 2 (learning assessment) of Kirkpatrick’s evaluation model (6). Levels 3 and 4 related to the long-term effects weren’t assessed in this study. According to the methodology adopted in this study, every group of students was assessed twice. Repeated testing was justified by the utility of repeated testing in learning clinical reasoning. This was reported by Raupach, et al. in 2016 in a cross-over randomized trial where they demonstrated that repeated testing was more effective than repeated case-based learning alone (11). In this study, we compared 2 techniques that are recommended for late learners. We associated both techniques to increase cognitive load. Cognitive load theory describes 2 strains of the learner's cognitive capacity, the intrinsic load which is dependent on the complexity of a given task, and the extrinsic load which depends on learners’ cognitive resources. According to some authors, increasing the cognitive loads, especially based on the intrinsic load improves the competencies of the learners (12). We used real script illnesses to increase the intrinsic load. According to the literature, real clinical material is mandatory for the learning process. Narrative cases including also judgments made in addition to the facts are recommended to be used. Real cases help teach probability theory, threshold concepts, the nature of differential diagnoses, and disease polymorphisms(1) . Known learning theories integrated the cognitive theory based on clinical reasoning. Cognitive theory integrated the concepts of content specificity emphasizing on the role of knowledge in the diagnosis, the script theory focusing on the illness scripts which are mental representations of the signs and symptoms of specific diseases, and a recent threshold concept (13). According to this concept, clinical reasoning is a threshold skill with the following features: transformative, troublesomeness, integrative, associated with practice, and issues with transferability. Every threshold is driven by different learning techniques (13). Because clinical learning may be found troublesome, teachers have to adopt supportive techniques such as think-aloud to concretize and demystify the learning process (14). In our study, a debrief session was planned. In an interview study about student experiences of learning clinical reasoning, Anakin, et al. reported in 2019 that themes identified when interviewing the students about the major challenges faced were the practice with undifferentiated cases, lack of independence, lack of communication, and feedback and confusion from different sources of information (15). In our study, we used a structured summary to assess the clinical reasoning process in association with a satisfaction questionnaire. Assessing clinical reasoning may be performed according to Miller’s Pyramid. The knowledge and applied knowledge levels (knows and knows how) may be assessed using patient management problem or script concordance tests, key features questions or clinical integrative puzzles, or virtual patients. The skills demonstration level (shows how) may be assessed using the objective structured clinical examination. The practice level (does) may be assessed using the one-minute preceptor model or direct observation (13). In this study, the assessment method used was a structured summary. No significant difference was reported between SNAPPS and CRT. Besides, the students preferred the CRT because of the collaborative approach in comparison to the SNAPPS technique. Wolpaw T, et al. reported in 2009, a randomized comparison group trial that SNAPPS greatly facilitates and enhances the expression of diagnostic reasoning (16). The authors recruited also 3-year medical students and compared only the post-tests of the students. In our case, the repeated testing, and the alternation of the techniques may explain the difference observed in the results. Both techniques aimed to help the students achieve both models of clinical thinking: intuitive process and analytical process. Starting the learning process in the third year of education may be adequate because the medical curriculum in the faculty was inspired by the Flexner model and the clerkship period starts in the third year. In another randomized controlled trial performed by Fagundes, et al. in 2020, the authors reported that the SNAPPS technique was more effective than the one-minute preceptor in helping students to take an active role during case presentation (17). The authors included 5-year-education-students. In another clinical trial comparing SNAPPS to a teacher-education study, the authors reported a significant improvement in the clinical skills of students using the SNAPPS technique (18). This study compared a teacher-centered to a learner-centered technique. Even if these techniques may be differently efficient, they aim to help the students achieve both types of reasoning. In our study, the students weren’t asked to observe the patients so they didn’t face disjunctions or grounding judgments. The major limitations of this work consist of the number of students included that couldn’t be determined statistically because the number of students is allocated by the faculty staff. The wah-out period of 7 days may be insufficient to determine the real value of each technique. Besides, we assessed the immediate effect of the 2 training methods but the real impact of the training can be highlighted when students solve other cases autonomously because learning requires consolidation, after a delay.

Conclusion

This study aimed to compare 2 student-centered techniques of clinical reasoning learning including novice students. It showed no significant difference between CRT and SNAPPS but the majority of the students preferred the collaborative process of CRT. On the other hand, it highlighted the role of using clinical reasoning learning techniques to improve the student’s self-perception of competencies.

Ethical considerations

All methods were carried out by relevant guidelines and regulations or the declaration of Helsinki. The ethics committee of the University Abderrahman Mami Hospital approved the research protocol under the reference: 08/2022.

Besides, the participants were made aware of the purpose of the study, the anonymous nature of the purpose, the anonymous nature of the dataset generated, and the option to not respond if they so wished. This information served as the basis for an informed consent from each respondent.

Disclosure

The authors declare that they have no conflict of interest and disclose any financial interest

Author contributions

MM had the idea, made the literature review, and made the statistical analysis, CD, SC, MJ, and FM participated in the literature review and reviewed the final version of the manuscript, CD, and MM supervised the trial, FM contributed to the literature research, read the manuscript and reviewed it.

Data availability statement

An initial version of this manuscript was published as a preprint with a DOI reference: 10.21203/rs.3.rs-1678128/v1

Full-Text: (498 Views)

Abstract

Background & Objective: Many clinical reasoning teaching techniques have been reported in the literature. The authors focused on 2 teaching techniques of clinical reasoning, the technique Summarize, Narrow, Analyze, Probe the preceptor, Plan, Self-selected topic (SNAPPS) and the Clinical Reasoning Technique (CRT), and compared their efficiency to improve the clinical reasoning competencies of third-year undergraduate medical students.

Materials & Methods: The authors performed a prospective randomized, controlled, non-blinded crossover trial including year-3 undergraduate medical students. Judgment criteria consisted of the scores attributed to a test assessing the cognitive competencies of the participants which was a structured summary performed by the students after each session. Besides, a satisfaction Likert-scale questionnaire was fulfilled by the students. Statistical analysis was performed using SPSS software (version 20.0).

Results: Seventy-two students were included with a mean age of 21.03 (SD:2,30) years. The mean scores of the students allocated to the CRT arm reached 4.62 (SD:2.93)versus 4.99 (SD:2.93) for the SNAPPS arm. No significant statistical difference was observed between the mean scores according to the method used. The analysis of the satisfaction questionnaire revealed that 75% of the students preferred CRT because of the collaborative work performed.

Conclusion: This study highlights the need for varying techniques to improve the critical reasoning skills of medical students. Besides, it pointed out students' preference for collaborative approaches illustrating socio-constructivist theories of learning.

Introduction

The clinical reasoning process is complex and includes hypothesis generation, pattern recognition, context formulation, diagnostic tests interpretation, differential diagnosis, and diagnostic verification (1). It is a mandatory competency to achieve in the medical curriculum. Many definitions of clinical reasoning exist in the literature. These definitions differ according to the cognitivist view which considers this process as intra-cerebral and the anthropologist view which considers clinical reasoning as an out-from-the-skull process (2). According to the latter, clinical reasoning is a collaborative inter-professional activity and it is a dialectal-material form of reasoning with a high level of emotional labor and a minimum of two embodied minds at work enhanced by the material environment (2). The notion of “situativity” has been emphasized according to this view. According to cognitive psychologists’ view, clinical reasoning is used to analyze patients’ status and arrive at a medical decision to provide the proper treatment (3). These different definitions highlight the multiplicity of more or less direct teaching techniques categories. Every definition of clinical reasoning is sustained by different teaching techniques. The cognitivist view is sustained by individual techniques with the technique Summarize, Narrow, Analyze, Probe the preceptor, Plan, and Self-selected topic (SNAPPS) representing the most well-known technique (4).

On the other hand, the anthropologist's view is sustained by collaborative techniques in the manner of solving problems. One of the techniques used under the latter view is represented by the clinical Reasoning Teaching (CRT) whose methodology was published mainly in the French literature. SNAPPS is a 6-step technique consisting of Summarizing the case, Narrowing the hypotheses, Analyzing the hypotheses, Probing the preceptor, Planning the treatment modalities, and Self-selecting oriented research (5, 6). The CRT is a kind of collaborative-case presentation integrating a group of 6 to 8 students and consists of presenting clinical and exploratory data of a patient according to the student’s questions. In this technique, students are told to work collectively and summarize the clinical problem, and elaborate and validate diagnostic hypotheses according to the clinical and complementary data. Students learn to elaborate early medical hypotheses, to validate or rule out them based on the clinical exam and exploratory tests. Both techniques are centered on actual illness scripts. Despite the differences between the different techniques, they have all to follow the major steps of clinical reasoning that were reported by Cox, et al. in 2006, and that is represented by problem representation, hypothesis generation, data gathering of illness scripts, and diagnosis (7). Fostering effective clinical reasoning implies adopting adequate teaching strategies to improve knowledge, data-gathering skills, data processing, metacognition and reflection, and professional socialization. The different teaching techniques have rarely been compared in the literature. This study aimed to compare the SNAPPS and the CRT techniques in teaching clinical reasoning among undergraduate students.

Material & Methods

Design and setting(s)

The authors performed a prospective, randomized, controlled, non-blinded crossover trial. This interventional and longitudinal study was performed during 3 years (2017/2018, 2018/2019, and 2019/2020).

An expert committee composed of 2 full professors was assigned to select the illness scripts. They were asked to choose illness scripts with similar difficulties. The cases were selected according to the participants' learning objectives. The teaching clinical reasoning sessions were centered on solving health problems which were as follows: pleural tuberculosis and pulmonary tuberculosis.

The CRT arm: A 60 to the 90-minute session was programmed as follows: one student was briefed concerning the clinical data of the patient. The other students tried to find the diagnosis following these steps: focusing on the major problem (step 1), generating clinical hypotheses (step 2), assessing the validity of the different hypotheses according to the history-taking data (step 3), validating the hypotheses or citing new ones according to the physical examination data (step 4), validating the hypotheses according to the investigations (step 5), retaining a diagnosis (step 6), planning treatment management (step 7). All the steps were represented on a whiteboard. Table 1 illustrates the structure of a whiteboard to be fulfilled by the students.

Background & Objective: Many clinical reasoning teaching techniques have been reported in the literature. The authors focused on 2 teaching techniques of clinical reasoning, the technique Summarize, Narrow, Analyze, Probe the preceptor, Plan, Self-selected topic (SNAPPS) and the Clinical Reasoning Technique (CRT), and compared their efficiency to improve the clinical reasoning competencies of third-year undergraduate medical students.

Materials & Methods: The authors performed a prospective randomized, controlled, non-blinded crossover trial including year-3 undergraduate medical students. Judgment criteria consisted of the scores attributed to a test assessing the cognitive competencies of the participants which was a structured summary performed by the students after each session. Besides, a satisfaction Likert-scale questionnaire was fulfilled by the students. Statistical analysis was performed using SPSS software (version 20.0).

Results: Seventy-two students were included with a mean age of 21.03 (SD:2,30) years. The mean scores of the students allocated to the CRT arm reached 4.62 (SD:2.93)versus 4.99 (SD:2.93) for the SNAPPS arm. No significant statistical difference was observed between the mean scores according to the method used. The analysis of the satisfaction questionnaire revealed that 75% of the students preferred CRT because of the collaborative work performed.

Conclusion: This study highlights the need for varying techniques to improve the critical reasoning skills of medical students. Besides, it pointed out students' preference for collaborative approaches illustrating socio-constructivist theories of learning.

Introduction

The clinical reasoning process is complex and includes hypothesis generation, pattern recognition, context formulation, diagnostic tests interpretation, differential diagnosis, and diagnostic verification (1). It is a mandatory competency to achieve in the medical curriculum. Many definitions of clinical reasoning exist in the literature. These definitions differ according to the cognitivist view which considers this process as intra-cerebral and the anthropologist view which considers clinical reasoning as an out-from-the-skull process (2). According to the latter, clinical reasoning is a collaborative inter-professional activity and it is a dialectal-material form of reasoning with a high level of emotional labor and a minimum of two embodied minds at work enhanced by the material environment (2). The notion of “situativity” has been emphasized according to this view. According to cognitive psychologists’ view, clinical reasoning is used to analyze patients’ status and arrive at a medical decision to provide the proper treatment (3). These different definitions highlight the multiplicity of more or less direct teaching techniques categories. Every definition of clinical reasoning is sustained by different teaching techniques. The cognitivist view is sustained by individual techniques with the technique Summarize, Narrow, Analyze, Probe the preceptor, Plan, and Self-selected topic (SNAPPS) representing the most well-known technique (4).

On the other hand, the anthropologist's view is sustained by collaborative techniques in the manner of solving problems. One of the techniques used under the latter view is represented by the clinical Reasoning Teaching (CRT) whose methodology was published mainly in the French literature. SNAPPS is a 6-step technique consisting of Summarizing the case, Narrowing the hypotheses, Analyzing the hypotheses, Probing the preceptor, Planning the treatment modalities, and Self-selecting oriented research (5, 6). The CRT is a kind of collaborative-case presentation integrating a group of 6 to 8 students and consists of presenting clinical and exploratory data of a patient according to the student’s questions. In this technique, students are told to work collectively and summarize the clinical problem, and elaborate and validate diagnostic hypotheses according to the clinical and complementary data. Students learn to elaborate early medical hypotheses, to validate or rule out them based on the clinical exam and exploratory tests. Both techniques are centered on actual illness scripts. Despite the differences between the different techniques, they have all to follow the major steps of clinical reasoning that were reported by Cox, et al. in 2006, and that is represented by problem representation, hypothesis generation, data gathering of illness scripts, and diagnosis (7). Fostering effective clinical reasoning implies adopting adequate teaching strategies to improve knowledge, data-gathering skills, data processing, metacognition and reflection, and professional socialization. The different teaching techniques have rarely been compared in the literature. This study aimed to compare the SNAPPS and the CRT techniques in teaching clinical reasoning among undergraduate students.

Material & Methods

Design and setting(s)

The authors performed a prospective, randomized, controlled, non-blinded crossover trial. This interventional and longitudinal study was performed during 3 years (2017/2018, 2018/2019, and 2019/2020).

An expert committee composed of 2 full professors was assigned to select the illness scripts. They were asked to choose illness scripts with similar difficulties. The cases were selected according to the participants' learning objectives. The teaching clinical reasoning sessions were centered on solving health problems which were as follows: pleural tuberculosis and pulmonary tuberculosis.

The CRT arm: A 60 to the 90-minute session was programmed as follows: one student was briefed concerning the clinical data of the patient. The other students tried to find the diagnosis following these steps: focusing on the major problem (step 1), generating clinical hypotheses (step 2), assessing the validity of the different hypotheses according to the history-taking data (step 3), validating the hypotheses or citing new ones according to the physical examination data (step 4), validating the hypotheses according to the investigations (step 5), retaining a diagnosis (step 6), planning treatment management (step 7). All the steps were represented on a whiteboard. Table 1 illustrates the structure of a whiteboard to be fulfilled by the students.

Table 1: Example of the structure of the whiteboard used during the controlled randomized trial session

| Major symptom/Problem | A 30-year-old smoker man presenting a 3-month-lasting chest pain | ||||

| Diagnostic hypotheses | Myocardial infarction | Endocarditis | aortic dissection | Pneumothorax | Pleural effusion (infectious or tumoral origin) |

| Diagnostic hypotheses after detailed medical history | X (possible hypothesis) |

X (possible hypothesis) |

Hypothesis to rule out because of the long-lasting pain (3 months) | Hypothesis to rule because of the pain characteristics | X (possible hypothesis) |

| Diagnostic hypotheses after physical examination | Hypothesis to rule out because of the absence of electric signs | X (possible hypothesis) |

X (possible hypothesis because of the reduced tactile vocal fremitus, dullness on percussion, shifting dullness, and diminished or absent breath sounds) |

||

| Diagnostic hypotheses after complementary tests | Hypothesis to rule out because of the normality of the blood tests and the echocardiogram | Diagnosis to retain because of the chest-X-ray findings | |||

| Diagnostic hypotheses after positive tests | Pleural tuberculosis after the positive pleural biopsy showing granulomas with necrosis | ||||

| Management | Medical treatment: 6 months of antibiotic therapy | ||||

The tutor was asked to empower the students and help them correct their clinical reasoning or obtain some scientific information concerning some diseases to enrich their knowledge. At the end of the session, every student was asked to write a structured clinical summary. The tutor gave them a checklist table to help them construct the structured summary. This checklist was inspired by the checklist published by Tabbane C in 2000 (8)

Table 2: An example of a checklist used for the structured summary after a clinical reasoning teaching session

| Student name: Student MM | Stage: department of Pathology in Hospital X | Chief of department: Prof X | |||

| Main patient’s problem | Three-month lasting chest pain | ||||

| Patient’s name: Mr. XX | Age: 30 years | Address: street X | |||

| Admission date: 07/03/2023 | Date of discharge: 12/03/2023 | ||||

| Symptoms and signs | chest pain lasting 3 months | ||||

| History data in favor of the positive diagnosis | The pain lasting 3 months was in favor of a pleural effusion | ||||

| Physical exam data in favor of the positive diagnosis | Reduced tactile vocal fremitus, dullness on percussion, shifting dullness, and diminished or absent breath sounds | ||||

| Complementary tests in favor of the positive diagnosis | Chest-X-ray showing a pleural effusion | ||||

| Diagnostic justifications | Pleural biopsy confirmed the diagnosis of pleural tuberculosis | ||||

| Other diagnoses discussed and ruled out | Myocardial infarction | Pneumothorax | Aortic dissection | Endocarditis | |

| Past medical history | No particular past medical history | ||||

| Psychological state of the patient | Good general state. | ||||

| Immediate prognosis | No immediate bad prognostic elements | ||||

| Therapeutic management plan | 6 months of antibiotic therapy | ||||

| Prognostic elements | Good general state. | ||||

The SNAPPS arm: The scenario of the SNAPPS session was programmed according to the methodology adopted by Wolpaw, et al in 2003 (6). The tutor was asked to deliver the clinical information to the students according to 6 steps as follows: the first step consisted in summarizing the history and physical findings. The second step consisted of narrowing and choosing two to three relevant hypotheses. The third step consisted in Analysing the different hypotheses. The learners initiated a case-focused discussion of the differential diagnoses by comparing the relevant diagnostic possibilities. The fourth step consisted of probing the preceptor and asking about uncertainties. The learners were expected to reveal areas of confusion and knowledge deficits. The fifth step consisted of planning the treatment of the patient. The last step consisted of self-selecting pathologies to assess. The learner was encouraged to read about focused patient-based questions. At the end of every learning session, the students were asked to perform a clinical structured summary illustrating their clinical reasoning (8).

During the washout periods that last 7 days, the students’ activities were centered on the technical skills mentioned in their portfolios and on performing a critical appraisal of medical literature (9).

Participants and sampling

Since 2013, the faculty’s administration staff used to assign students to the Department of Pathology. The assignment of the different students to the different departments was organized according to the students’ ranking. In the Department of Pathology, the students were divided into 7 groups of 3 to 4 students with an interval period of 2 to 3 weeks between the different groups. The students were randomly allocated to the SNAPPS or CRT arm using computerized random number allocation.

Procedures

Two real illness scripts were used. The training period lasts three weeks according to the university’s recommendations. All the students that were assigned by the faculty and who gave their consent to participate in this study were included. The students that were performing their training period in other departments were not included. The students that didn’t want to be enrolled in this study were excluded. The cross-over of every group happened after a washout period of 7 days.

Tools/Instruments

The main judgment criteria consisted of the assessment of the students’ knowledge competencies using the differently structured summaries performed by the students during respectively the SNAPPS or the CRT sessions. To assess the different summaries, the authors used the NICTALOP method (10). This method consisted of the assessment of 5 criteria: NI: the number of key ideas or key diagnoses, CT: the choice of terms, A: the veracity of the concepts or diagnoses, LoP: the length and the position of the ideas and concepts. All these criteria were discussed by 2 experts. The number of key ideas or diagnoses was scored as the sum of ideas’ values fixed by the experts. The choice of terms was based on the adequacy of the words used. The veracity of the concept score was based on the probability of the diagnoses suspected. The choice of terms consisted of the accuracy of the words employed. The LoP of criteria was adapted to our use. The length of the observation is more suitable when assessing medical observation. In our case, we assessed the structured summary and the clinical reasoning so that, we used only the Position criteria because of the importance of the diagnosis prioritization. For this item, the answer of the students was compared to the experts’ answer and scored +1 when correctly positioned and -1 when it was not similar to the hierarchy established by the experts. The total score was rated over 10 for every summary. An example of the assessment of a structured summary is presented in Figure 1. The assessment was performed by the same tutor who was blinded to the written material.

Figure 1: This is an example of an assessment of a structured summary. NI: number of ideas, CT: choice of terms, A: veracity, P: position. NI and CT scores were rated from 0 to 3, A was rated from -3 to +3 according to the veracity of the diagnoses in accordance with the physical exam and the diagnostic tests.

The secondary judgment criteria consisted of the assessment of the students’ satisfaction. This was done using a Likert-scale satisfaction questionnaire. Students were asked about their ability to suggest and validate hypotheses according to specific cases, to ask for complementary tests according to their diagnostic suspicions, and to plan treatment modalities. They were also asked about their preferences concerning both techniques used. The participants rated their agreement with each statement on a 5-point Likert scale (do not agree at all–agree completely). Figure 2 represents the study flowchart.

Figure 2: The flowchart of the study.

Data analysis

Statistical tests used to compare the means’ scores between both clinical reasoning learning techniques consisted of the nonparametric Mann Whitney U test in case of absence of normality with alpha levels set at 0.05. The authors used SPSS software (version 20.0). The analysis of the Likert-scale satisfaction questionnaire was based on the percentage of students satisfied or not according to the different questions.

Results

Sample

Seventy-two students were included in this study with a mean age of 21.05 years (SD:1.25). Three students were excluded because they didn’t perform a structured clinical summary after one session.

Comparison of the students' mean scores in the SNAPPS arm versus the CRT arm

One session of CRT centered on pleural tuberculosis was performed. The mean scores reached 4.62, averages [0-10], SD=2.30.

One session of SNAPPS centered on pulmonary tuberculosis was performed. The mean scores reached 4.99, average [2.2, 7.2], SD=1.53.

No significant statistical difference was observed between the mean scores according to the method used (SNAPPS or CRT) (P=0.890). Table 3 represents the detailed results.

Table 3: The students’ mean scores in CRT and SNAPPS sessions

| CRTb | SNAPPS c | |

| Mean score | 5.09 | 5.17 |

| SDa | 2.93 | 2.93 |

| CI 95% | [4.40-5.70] | [4.40-5.80] |

| Sig. | U = …48 , p = 0.890 | |

a: Standard deviation; CI 95%, confidence interval 95%; b: Controlled randomized trial

c: Summarize Narrow Analyze Probe the preceptor Plan Self-select topic

c: Summarize Narrow Analyze Probe the preceptor Plan Self-select topic

Assessment of the Students’ satisfaction

The analysis of the satisfaction questionnaire revealed that 75% of the students (54/72) assumed that they were able to perform the objective structured abstract. 100% of the students (72/72) attributed this ability to the checklist used. 79.2% of the students (57/72) assumed that they were able to prioritize the diagnoses. 83.3% of the students (60/72) considered that they were able to validate and justify the different hypotheses. 66.7% of the students (48/72) considered that they were able to consider exploratory tests according to the different diagnoses. 70.8% of the students (51/72) assumed that they were able to identify cognitive errors. All the students attributed these errors to their lack of knowledge. 75% of the students (54/72) preferred the CRT technique. 13.5% of the students (10/72) preferred the SNAPPS session. 92% of the students (66/72) who preferred the CRT technique attributed their preference to collaborative work.

Discussion

This study showed no statistically significant difference in students’ scores between SNAPPS and CRT techniques. It consisted of a prospective cross-over trial including 72 medical students in their third year of medical education. The judgment variable consisted of students’ scores attributed by the same tutor to the structured summaries of observations performed by every student at the end of the sessions. The secondary objective consisted of the students’ satisfaction according to each technique.

Both kinds of assessments allowed us to reach level 1 (satisfaction) and level 2 (learning assessment) of Kirkpatrick’s evaluation model (6). Levels 3 and 4 related to the long-term effects weren’t assessed in this study. According to the methodology adopted in this study, every group of students was assessed twice. Repeated testing was justified by the utility of repeated testing in learning clinical reasoning. This was reported by Raupach, et al. in 2016 in a cross-over randomized trial where they demonstrated that repeated testing was more effective than repeated case-based learning alone (11). In this study, we compared 2 techniques that are recommended for late learners. We associated both techniques to increase cognitive load. Cognitive load theory describes 2 strains of the learner's cognitive capacity, the intrinsic load which is dependent on the complexity of a given task, and the extrinsic load which depends on learners’ cognitive resources. According to some authors, increasing the cognitive loads, especially based on the intrinsic load improves the competencies of the learners (12). We used real script illnesses to increase the intrinsic load. According to the literature, real clinical material is mandatory for the learning process. Narrative cases including also judgments made in addition to the facts are recommended to be used. Real cases help teach probability theory, threshold concepts, the nature of differential diagnoses, and disease polymorphisms

Conclusion

This study aimed to compare 2 student-centered techniques of clinical reasoning learning including novice students. It showed no significant difference between CRT and SNAPPS but the majority of the students preferred the collaborative process of CRT. On the other hand, it highlighted the role of using clinical reasoning learning techniques to improve the student’s self-perception of competencies.

Ethical considerations

All methods were carried out by relevant guidelines and regulations or the declaration of Helsinki. The ethics committee of the University Abderrahman Mami Hospital approved the research protocol under the reference: 08/2022.

Besides, the participants were made aware of the purpose of the study, the anonymous nature of the purpose, the anonymous nature of the dataset generated, and the option to not respond if they so wished. This information served as the basis for an informed consent from each respondent.

Disclosure

The authors declare that they have no conflict of interest and disclose any financial interest

Author contributions

MM had the idea, made the literature review, and made the statistical analysis, CD, SC, MJ, and FM participated in the literature review and reviewed the final version of the manuscript, CD, and MM supervised the trial, FM contributed to the literature research, read the manuscript and reviewed it.

Data availability statement

An initial version of this manuscript was published as a preprint with a DOI reference: 10.21203/rs.3.rs-1678128/v1

Article Type : Orginal Research |

Subject:

Medical Education

Received: 2023/03/30 | Accepted: 2023/05/2 | Published: 2023/08/15

Received: 2023/03/30 | Accepted: 2023/05/2 | Published: 2023/08/15

References

1. Kassirer JP. Teaching Clinical Reasoning: Case-Based and Coached. Academic Medicine. 2010;85:1118-24. [PubMed]

2. Bleakley A. Re-visioning clinical reasoning, or stepping out from the skull. Medical Teacher. 2021;43(4):456-462. [DOI]

3. Shin HS. Reasoning processes in clinical reasoning: From the perspective of cognitive psychology. Korean Journal of Medical Education. 2019;31(4):299-308. [DOI]

4. Zairi I, Mzoughi K, Ben Dhiab M, et al. Evaluation of clinical reasoning teaching for third year medical students. La Tunisie Médicale. 2017 ;95 :1-5 [PubMed]

5. Horner P, Hunukumbure D, Fox J, et al. Learning in the Out-Patient Setting Outpatient Learning Perspectives at a UK Hospital. The Clinical Teacher. 2020;17:680-87 [PubMed]

6. Wolpaw TM, Wolpaw DR, Papp KK. SNAPPS: A Learner-Centered Model for Outpatient Education. Academic Medicine. 2003;78:893-97. [PubMed]

7. Cox M, Irby DM, Bowen JL. Educational Strategies to Promote Clinical Diagnostic Reasoning. New England Journal Of Medicine. 2006;355:2217-2225. [Article]

8. Tabbane C. Introduction elements to medical pedagogical workshops. Tunisia: Centre de Publications Universitaires; 2000.

9. Mlika M, Hassine L, Braham E, et al. ISSN 2347-954X (Print) About the Association of Different Methods of Learning in the Training of Medical Students in a Pathology Lab. Scholars Journal of Applied Medical Sciences. 2015;3:1149-53. [Article]

10. Bonnemains L, Marcon F, Braun M. Faisabilité de l’évaluation d’une observation médicale par combinaison de multiples critères : méthode NICTALOP. Pédagogie Médicale. 2013;14(2):119-132. [DOI]

11. Raupach T, Andresen JC, Meyer K, et al. Test-enhanced learning of clinical reasoning: a crossover randomised trial. Medical Education. 2016;50(7):711-720. [DOI]

12. Klein M, Otto B, Fischer MR, et al. Fostering medical students’ clinical reasoning by learning from errors in clinical case vignettes: effects and conditions of additional prompting procedures to foster self-explanations. Advances in Health Sciences Education. 2019;24(2):331-351. [DOI]

13. Kiesewetter J, Sailer M, Jung VM, et al. Learning clinical reasoning: How virtual patient case format and prior knowledge interact. BMC Medical Education. 2020;20(1). [DOI]

14. Pinnock R, Anakin M, Jouart M. Clinical reasoning as a threshold skill. Medical Teacher. 2019;41(6):683-689. [DOI]

15. Anakin M, Jouart M, Timmermans J, et al. Student Experiences of Learning Clinical Reasoning. The Clinical Teacher. 2019;16:1-6. [Article]

16. Wolpaw T, Papp KK, Bordage G. Using SNAPPS to Facilitate the Expression of Clinical Reasoning and Uncertainties: A Randomized Comparison Group Trial. Academic Medicine. 2009;84:517-24. [Article]

17. Fagundes EDT, Ibiapina CC, Alvim CG, et al. Case presentation methods: A randomized controlled trial of the one-minute preceptor versus SNAPPS in a controlled setting. Perspectives In Medical Education. 2020;9(4):245-250. [DOI]

18. Barangard H, Afshari P, Abedi P. The effect of the SNAPPS (summarize, narrow, analyze, probe, plan, and select) method versus teacher-centered education on the clinical gynecology skills of midwifery students in Iran. Journal of Education And Evaluation In Health and Professionalism. 2016;13:41. [DOI]

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |