Tue, Feb 24, 2026

[Archive]

Volume 18, Issue 3 (2025)

J Med Edu Dev 2025, 18(3): 109-117 |

Back to browse issues page

Ethics code: As per the Tbilisi State Medical University Biomedical Research Ethics Committee, the study does not

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

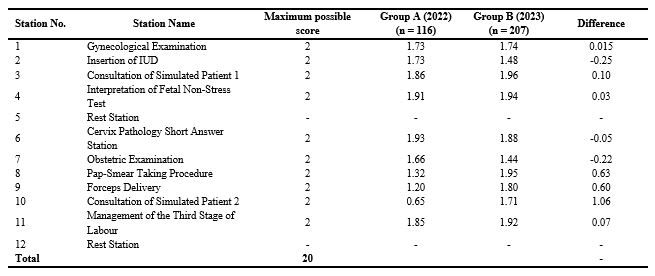

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Chitaishvili D, Manjavidze I, Nozadze P, Otiashvili L. Impact of objective structured clinical examination scheduling (OSCE) timing on medical students' performance in obstetrics and gynecology. J Med Edu Dev 2025; 18 (3) :109-117

URL: http://edujournal.zums.ac.ir/article-1-2403-en.html

URL: http://edujournal.zums.ac.ir/article-1-2403-en.html

1- Tbilisi State Medical Univerisity , d.chitaishvili@tsmu.edu

2- Tbilisi State Medical Univerisity

2- Tbilisi State Medical Univerisity

Keywords: objective structured clinical examination, medical education, student performance, assessment timing, educational strategies

Full-Text [PDF 521 kb]

(391 Downloads)

| Abstract (HTML) (825 Views)

Full-Text: (30 Views)

Abstract

Background & Objective: This study checked whether the timing of the Objective Structured Clinical Examination (OSCE)—immediately following an Obstetrics and Gynecology (OB-GYN) clinical rotation versus at the end of the semester—affects medical students' performance.

Materials & Methods: A quasi-experimental, mixed-methods study was conducted at the Faculty of Medicine, Tbilisi State Medical University (TSMU), among seventh-semester medical students during the period 19-09-2022 to 01-03-2024. Participants completed an OB-GYN rotation and were assigned to one of two groups based on OSCE scheduling. Group B (n = 203) took the OSCE immediately following the rotation (3–6 days post-rotation), while Group A (n = 116) took the exam at the end of the semester (5–116 days post-rotation). Group A data were from the 2022–2023 academic year, while Group B followed the new scheduling system introduced in September 2023. Performance scores were compared between groups, and surveys captured perceptions of scheduling among students, faculty, and staff.

Results: While Group B achieved a higher average OSCE score (16.38) compared to Group A (15.85), the difference was not statistically significant (p > 0.05). However, immediate scheduling was linked to a much higher attendance rate in Group B (96.7%) compared to Group A (72.96%), suggesting improved engagement. Additionally, survey data showed that students in both groups reported similar levels of stress and perceived preparation time, showing that earlier scheduling did not adversely affect them. These findings suggest that although performance scores were comparable, immediate OSCE scheduling may offer practical advantages without hurting student experience.

Conclusion: While OSCE timing did not yield a statistically significant difference in student performance, immediate scheduling was linked to practical benefits, such as higher attendance rates. Survey responses also showed comparable stress levels and perceived preparation time between groups. These findings support flexible OSCE scheduling to increase participation and match student preferences without lowering academic standards.

Introduction

Summative assessments at the end of a training course are essential for evaluating students’ progress and motivating them toward achieving clinical competence. This is particularly critical in medical education, as clinical skills must be rigorously assessed to ensure

readiness for real-world practice. The Objective Structured Clinical Examination (OSCE) is considered the “gold standard” for evaluating clinical competence due to its structured, standardized format and emphasis on practical, communication, and procedural skills in simulated clinical scenarios. Although the OSCE is well established, limited research exists on how its timing—whether given immediately after a clinical rotation or at the end of the semester—affects student performance and satisfaction. Existing literature shows that both approaches have different teaching advantages. Immediate testing uses the recency effect, helping students recall and apply clinical knowledge while it is still fresh [1, 4]. In contrast, delayed tests may benefit from distributed practice and retrieval-based learning, supporting better long-term retention and combining theoretical knowledge [5–9]. However, most studies on testing effects focus on theoretical or non-clinical subjects [13,17], showing a gap in understanding how timing affects OSCE outcomes, which uniquely check hands-on clinical and interpersonal skills. While some research has looked into how timing affects stress, preparation, and performance [11,12], few studies have looked into these factors in the context of OSCEs, especially within Obstetrics and Gynecology (OB-GYN) rotations.

This study aims to address this gap by checking the impact of OSCE timing on student performance and satisfaction at Tbilisi State Medical University (TSMU). Specifically, it compares outcomes between students who took the OSCE immediately after their rotation and those checked at the end of the semester. In addition, it includes feedback from students, faculty, and staff to help make evidence-based improvements in OSCE scheduling. The key objectives of this study were to compare overall OSCE scores between the two study groups, study OSCE scores by individual stations between the groups, and check perceptions of OSCE timing among students, faculty, and staff.

Materials & Methods

Design and setting(s)

This study used a quasi-experimental, mixed-methods design done at the Faculty of Medicine of Tbilisi State Medical University (TSMU), during the period 19-09-2022 to 01-03-2024, comparing two natural cohorts of seventh-semester medical students based on the timing of their OB-GYN OSCE. Group A (2022–2023 academic year) completed the OSCE at the end of the semester, while Group B (2023–2024 academic year) completed it immediately after their clinical rotation, following the institutional scheduling change launched in September 2023. Group assignment was set by the institutional academic calendar and administrative scheduling limits; therefore, randomization was not possible.

The quantitative phase involved the collection and study of numerical data, including OSCE scores and structured survey responses, to compare student performance and perceptions between the two groups. The qualitative phase looked into experiences and perceptions regarding OSCE timing through open-ended feedback gathered from students, OSCE examiners, and administrative staff. Together, these approaches provided a complete evaluation of how exam timing may affect performance outcomes, logistical efficiency, and stakeholder satisfaction. The OSCE scenarios were made by academic staff to ensure consistency and alignment with learning outcomes. Identical scenarios were used for both groups, and the same examiners checked all students to maintain fairness and reliability. The study was not fully blinded. However, partial blinding was done in specific aspects of the study. For example, data study was done without knowledge of group assignment, ensuring that the evaluation of OSCE performance was not affected by group membership. However, since the feedback from staff and students was not blinded, there remains a possibility of response bias.

Participants and sampling

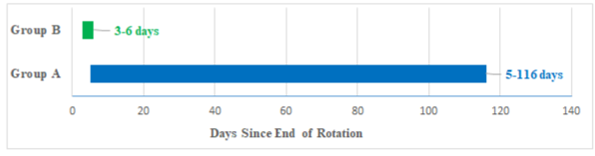

The distribution of OSCE timing intervals across the two groups highlights the contrast between the immediate post-rotation assessment and the delayed end-of-semester exam schedules.

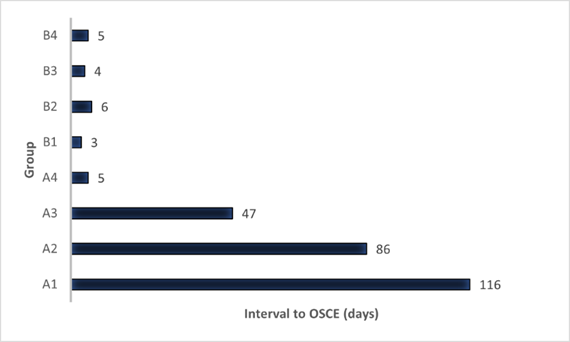

Figure 1b. Comparison of OSCE scheduling policy for Group A and Group B

Timeline comparison of OSCE scheduling: 5–116 days post-rotation for Group A vs. 3–6 days for Group B.

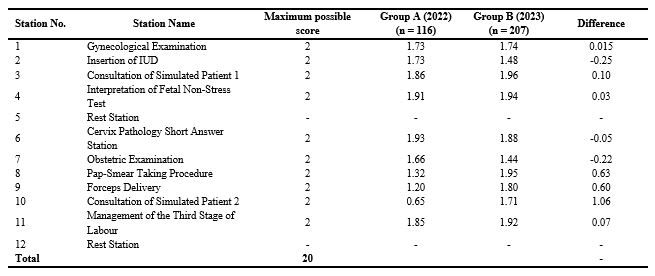

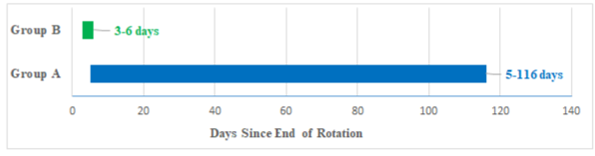

Table 1. OSCE scores by station in group A and Group B

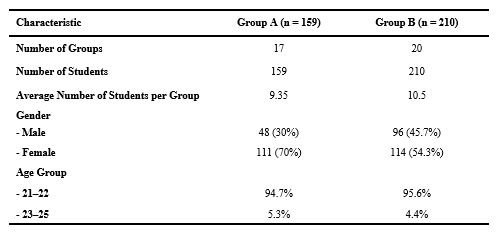

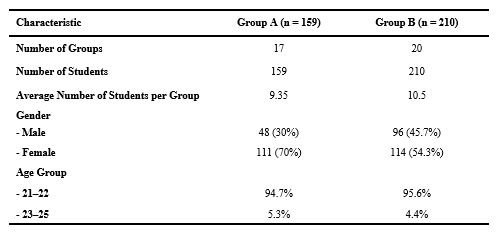

Table 2. Distribution of students and demographic characteristics across two study groups

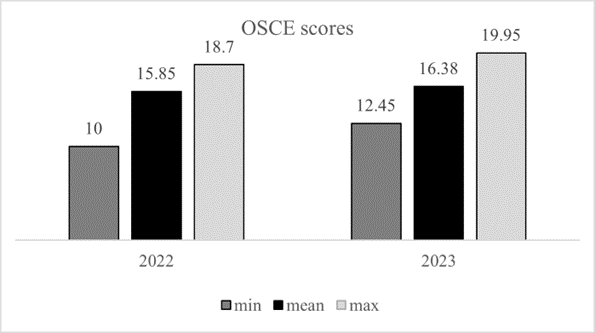

Figure 2. Distribution of overall OSCE scores for Group a and Group b.

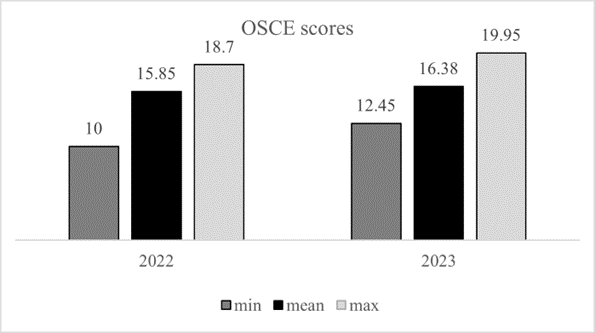

A comparison of minimum, mean, and maximum OSCE scores in both groups, offering a summary view of performance and basic score range

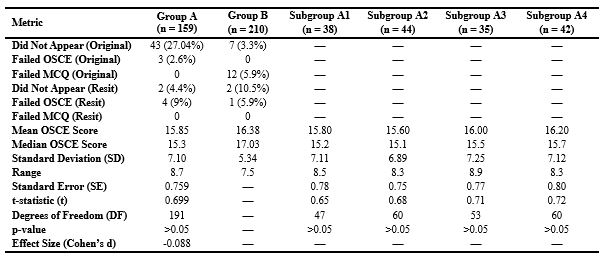

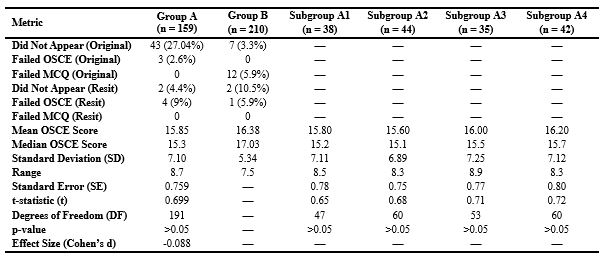

Table 3. Summary of OSCE Exam Results and Subgroup Analysis for Group A and Group B

Abbreviations: n, number of participants; OSCE, objective structured clinical examination; MCQ, multiple choice questions; SD, standard deviation; SE, standard error; t, t-statistics; DF, degrees of freedom; p-value, probability value.

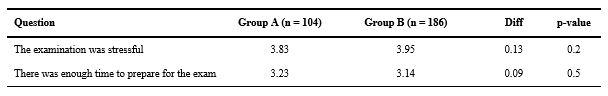

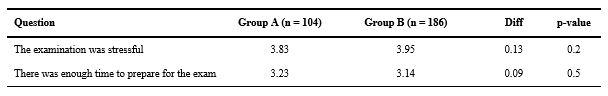

Table 4. Likert scale responses on exam stress and preparation adequacy

While these comments reflect perceived improvements in workflow and manageability, no formal study of cost, examiner hours, or resource use was done. These observations are qualitative and based on the practical experience of the OSCE organizing team.

In contrast, academic faculty expressed a preference for the previous system, citing scheduling constraints and clinical obligations, noting that "it’s challenging to coordinate the availability of 10 doctors from the university clinic once a month due to their busy schedules," "it’s more practical to gather 20 doctors at the end of February and do the exam over 2–3 days using two circuits of stations," and "leaving my patients frequently is difficult; I would prefer to attend the exam for 2–3 days once a semester in February rather than coming monthly to the examination center."

Discussion

Formative and summative assessments play complementary roles in medical education. While formative assessments help skill acquisition through ongoing feedback [1,2], summative assessments such as the OSCE assess competency at key milestones [3,4]. This study looked at whether the timing of summative OSCEs, either immediately after the OB-GYN rotation (Group B) or at the end of the semester (Group A), affects student performance, consistency, attendance, and psychological readiness [5, 6]. Although the difference in mean OSCE scores between groups was not statistically significant (p > 0.05), Group B, checked immediately post-rotation, demonstrated more consistent performance (SD = 5.34 vs. 7.10) and slightly higher average scores (16.38 vs. 15.85). These findings align with prior research suggesting that assessments done shortly after clinical experience may help skill retention and stabilize performance outcomes [1, 2, 12, 17]. Notably, Group B did better than Group A in procedural stations such as Pap-smear taking (Station 8), forceps delivery (Station 9), and simulated patient interaction (Station 10), while Group A scored higher on knowledge-based tasks like IUD insertion (Station 2), cervix pathology (Station 6), and obstetric examination (Station 7). This pattern aligns with established theories of skill decay, which suggest that procedural memory deteriorates more rapidly without reinforcement, making immediate assessment more suitable for checking hands-on competencies [4, 10]. In contrast, delayed assessment may help better performance in reasoning-based tasks due to the benefits of distributed practice and retrieval-based learning [6, 7].

Attendance was significantly higher in Group B (96.7%) than in Group A (72.96%), likely due to improved engagement and immediacy when assessments follow clinical exposure [8, 13]. Higher participation may reduce the need for resits and improve exam efficiency. Psychological factors appeared to be minimally affected by exam timing. Both groups reported comparable levels of stress and perceived enough preparation time. However, Group B’s lower score variability may reflect a more predictable and focused performance environment, potentially linked to reduced cognitive load or higher confidence [4].

The wide range of OSCE timing in Group A (5–116 days) may have caused uncontrolled variability in retention and readiness. Though subgroup analysis was done, the lack of significance highlights the need for more standardized timing in future studies.

The consistency observed in Group B may also stem from stronger formative assessment during the rotation, helping learning before summative evaluation. This supports the combination of formative and summative approaches to strengthen clinical competence [7, 14, 15].

Faculty and staff feedback highlighted the logistical trade-offs between the two OSCE timing models. While immediate post-rotation assessments may offer educational advantages—such as better alignment with recently acquired clinical skills—they also pose challenges related to clinician availability. Faculty generally preferred the traditional, centralized model to minimize disruption to clinical duties, whereas organizing staff favored the revised model for its improved efficiency and manageability [5, 6]. Balancing pedagogical benefits with institutional feasibility will be essential for sustainable implementation.

Although staff described the revised format as easier to coordinate and less burdensome in smaller batches, these impressions were based on subjective experience. We did not do a formal cost-effectiveness or time-use analysis. Future studies could look at the impact of OSCE scheduling on resource allocation, faculty workload, and institutional costs to more fully check its operational implications. Although our original aim was to look at whether OSCE timing would affect student performance scores, we did not anticipate the substantial improvement in attendance observed in Group B. This unplanned but meaningful finding suggests that assessment timing can affect not only academic outcomes but also student engagement and logistical efficiency—factors that are equally important in the design and delivery of medical education programs. This study has several limitations. First, no a priori power analysis was done, as the sample size was limited by institutional enrollment. The post hoc power was low (13%), indicating a high risk of Type II error. This suggests the study may have failed to detect small but meaningful differences between groups. Future studies should include a priori power calculations and larger or multi-institutional samples to improve statistical power and generalizability. Second, the variation in OSCE timing for Group A may have caused performance variability that subgroup analysis could not fully address. Third, although examiner calibration and partial double scoring were done to help scoring consistency, no formal inter-rater reliability statistics (e.g., Intraclass Correlation Coefficient [ICC] or Cohen’s κ) were calculated or reported. As a result, the consistency of scoring between evaluators could not be formally confirmed, which is a key limitation in checking the objectivity of OSCE outcomes. Fourth, while score analysis was blinded, qualitative feedback from students and staff was not, introducing potential response bias. Finally, the study was conducted within a single institution and specialty, which may limit the applicability of findings to other settings or disciplines.

Conclusion

This study initially set out to look at whether the timing of OSCE administration would affect student performance. While no statistically significant difference in average scores was found between the two groups, an important and unanticipated finding emerged: students checked immediately after their rotation demonstrated substantially higher attendance and more consistent performance. Immediate post-rotation OSCEs were associated with lower score variability and enhanced operational efficiency, indicating potential benefits for both learners and educators. These results suggest that timing may affect engagement and logistical outcomes more than exam scores alone. Group B’s lower score variability shows that assessments closely following rotations may stabilize outcomes. Stress and perceived preparation did not differ significantly between groups, indicating that psychological readiness may depend more on individual factors than exam timing. Higher attendance in Group B reflects greater student engagement when assessment is temporally aligned with learning. Although immediate assessments offer educational advantages, they require careful planning to avoid overburdening clinical staff and resources. Institutions aiming to improve the impact and efficiency of summative assessments may benefit from flexible scheduling models that align closely with clinical exposure. Future research should look at how assessment timing interacts with long-term skill retention, formative feedback, and scalable OSCE implementation. Overall, immediate post-rotation OSCEs help improve performance consistency, engagement, and logistical efficiency without negatively impacting psychological readiness or overall exam scores.

Ethical considerations

This study was reviewed by the Chair of the Biomedical Research Ethics Committee of TSMU, who concluded that full committee review was not required, as the project did not qualify as biomedical research involving human participants. A formal waiver of ethical approval was granted on 15 May 2025 and subsequently presented to the committee during its meeting on 28 May 2025 (Meeting No. #2-2025/116). The study involved secondary analysis of anonymized educational assessment data and anonymous survey feedback. It was done in accordance with the institutional guidelines for educational research.

Artificial intelligence utilization for article writing

AI tools were used to help in research. Specifically, ChatGPT (OpenAI) assisted with drafting and editing, improving efficiency, accuracy, and organization. The following ethical guidelines were followed: transparency, originality, bias prevention, human oversight, and confidentiality.

Acknowledgment

We wish to extend our sincere appreciation to the students and faculty of TSMU for their invaluable participation and contribution to this study.

We would also like to express our profound gratitude to the Departments of Obstetrics and Gynecology and Clinical Skills and Multidisciplinary Simulation for their unwavering support and work together, which were essential to the successful doing of this research.

Conflict of interest statement

The authors declare that they have no conflicts of interest related to this study and affirm their adherence to ethical research practices throughout the course of the research.

Author contributions

DC and IM led the conceptualization and design of the study. DC coordinated the overall study method. Data collection was done by DC, IM, PN, and LO. Quantitative data analysis was done by DC and IM, while qualitative feedback was looked at and analyzed by PN and LO.

The manuscript was drafted by DC and critically revised for important intellectual content by IM. All authors reviewed and approved the final version of the manuscript.

Funding

This research did not receive any specific grants, equipment, or other external resources.

Data availability statement

The data supporting the findings of this study are available from the Clinical Skills and Multidisciplinary Simulation Centre at TSMU, upon reasonable request.

Background & Objective: This study checked whether the timing of the Objective Structured Clinical Examination (OSCE)—immediately following an Obstetrics and Gynecology (OB-GYN) clinical rotation versus at the end of the semester—affects medical students' performance.

Materials & Methods: A quasi-experimental, mixed-methods study was conducted at the Faculty of Medicine, Tbilisi State Medical University (TSMU), among seventh-semester medical students during the period 19-09-2022 to 01-03-2024. Participants completed an OB-GYN rotation and were assigned to one of two groups based on OSCE scheduling. Group B (n = 203) took the OSCE immediately following the rotation (3–6 days post-rotation), while Group A (n = 116) took the exam at the end of the semester (5–116 days post-rotation). Group A data were from the 2022–2023 academic year, while Group B followed the new scheduling system introduced in September 2023. Performance scores were compared between groups, and surveys captured perceptions of scheduling among students, faculty, and staff.

Results: While Group B achieved a higher average OSCE score (16.38) compared to Group A (15.85), the difference was not statistically significant (p > 0.05). However, immediate scheduling was linked to a much higher attendance rate in Group B (96.7%) compared to Group A (72.96%), suggesting improved engagement. Additionally, survey data showed that students in both groups reported similar levels of stress and perceived preparation time, showing that earlier scheduling did not adversely affect them. These findings suggest that although performance scores were comparable, immediate OSCE scheduling may offer practical advantages without hurting student experience.

Conclusion: While OSCE timing did not yield a statistically significant difference in student performance, immediate scheduling was linked to practical benefits, such as higher attendance rates. Survey responses also showed comparable stress levels and perceived preparation time between groups. These findings support flexible OSCE scheduling to increase participation and match student preferences without lowering academic standards.

Introduction

readiness for real-world practice. The Objective Structured Clinical Examination (OSCE) is considered the “gold standard” for evaluating clinical competence due to its structured, standardized format and emphasis on practical, communication, and procedural skills in simulated clinical scenarios. Although the OSCE is well established, limited research exists on how its timing—whether given immediately after a clinical rotation or at the end of the semester—affects student performance and satisfaction. Existing literature shows that both approaches have different teaching advantages. Immediate testing uses the recency effect, helping students recall and apply clinical knowledge while it is still fresh [1, 4]. In contrast, delayed tests may benefit from distributed practice and retrieval-based learning, supporting better long-term retention and combining theoretical knowledge [5–9]. However, most studies on testing effects focus on theoretical or non-clinical subjects [13,17], showing a gap in understanding how timing affects OSCE outcomes, which uniquely check hands-on clinical and interpersonal skills. While some research has looked into how timing affects stress, preparation, and performance [11,12], few studies have looked into these factors in the context of OSCEs, especially within Obstetrics and Gynecology (OB-GYN) rotations.

This study aims to address this gap by checking the impact of OSCE timing on student performance and satisfaction at Tbilisi State Medical University (TSMU). Specifically, it compares outcomes between students who took the OSCE immediately after their rotation and those checked at the end of the semester. In addition, it includes feedback from students, faculty, and staff to help make evidence-based improvements in OSCE scheduling. The key objectives of this study were to compare overall OSCE scores between the two study groups, study OSCE scores by individual stations between the groups, and check perceptions of OSCE timing among students, faculty, and staff.

Materials & Methods

Design and setting(s)

This study used a quasi-experimental, mixed-methods design done at the Faculty of Medicine of Tbilisi State Medical University (TSMU), during the period 19-09-2022 to 01-03-2024, comparing two natural cohorts of seventh-semester medical students based on the timing of their OB-GYN OSCE. Group A (2022–2023 academic year) completed the OSCE at the end of the semester, while Group B (2023–2024 academic year) completed it immediately after their clinical rotation, following the institutional scheduling change launched in September 2023. Group assignment was set by the institutional academic calendar and administrative scheduling limits; therefore, randomization was not possible.

The quantitative phase involved the collection and study of numerical data, including OSCE scores and structured survey responses, to compare student performance and perceptions between the two groups. The qualitative phase looked into experiences and perceptions regarding OSCE timing through open-ended feedback gathered from students, OSCE examiners, and administrative staff. Together, these approaches provided a complete evaluation of how exam timing may affect performance outcomes, logistical efficiency, and stakeholder satisfaction. The OSCE scenarios were made by academic staff to ensure consistency and alignment with learning outcomes. Identical scenarios were used for both groups, and the same examiners checked all students to maintain fairness and reliability. The study was not fully blinded. However, partial blinding was done in specific aspects of the study. For example, data study was done without knowledge of group assignment, ensuring that the evaluation of OSCE performance was not affected by group membership. However, since the feedback from staff and students was not blinded, there remains a possibility of response bias.

Participants and sampling

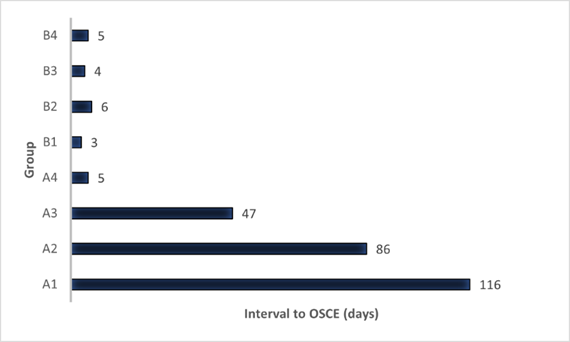

All seventh-semester medical students from TSMU who completed the OB-GYN rotation during the 2022–2023 and 2023–2024 academic years were eligible for inclusion in this study. Based on the academic year in which they completed their OB-GYN rotation, there were two groups: Group A (Fall 2022–2023): Students in this cohort followed the traditional approach, with the OSCE given once at the end of the semester, after all clinical rotations had ended. Accordingly, students in this group took the exam 5 to 116 days after completing their OB-GYN rotation. Group B (Fall 2023–2024): Following a scheduling reform introduced in response to student and faculty feedback, this cohort took the OSCE immediately after completing their rotation, typically within 3 to 6 days. This revised model aimed to improve retention, reduce logistical delays, and increase participation (Figure 1a and 1b). Students in each academic year were enrolled in standard educational groups, often referred to as “flows,” consistent with the structure of clinical education at TSMU. These groups followed a staggered rotation schedule based on institutional logistics, unrelated to the study design. As this study compared two naturally occurring cohorts defined by the academic calendar, the sample size was determined by total eligible enrollment and was not calculated ahead of time.

Figure 1a. Time Interval Between OB-GYN Rotation End and OSCE Dates.

The distribution of OSCE timing intervals across the two groups highlights the contrast between the immediate post-rotation assessment and the delayed end-of-semester exam schedules.

Figure 1b. Comparison of OSCE scheduling policy for Group A and Group B

Timeline comparison of OSCE scheduling: 5–116 days post-rotation for Group A vs. 3–6 days for Group B.

Tools/Instruments

The OSCE consisted of 12 clinical stations, each lasting 6 minutes. Ten were active, scored stations, while two (Stations 5 and 12) were rest stations and not included in the performance check. The stations looked at various competencies, including gynecological and obstetric procedures, communication with simulated patients, and clinical reasoning (Table 1).

To check students' perceptions of the exam schedule, we used two questions from an existing post-course Likert-scale questionnaire: “The examination was stressful” and “There was enough time to prepare for the exam.” These items gave insight into student experiences related to OSCE timing. A separate questionnaire was given to OSCE coordinators, examiners, supervisors, and technicians to get feedback on organizational aspects and the scheduling change. To ensure content validity, all OSCE scenarios were made and reviewed by a panel of experienced OB-GYN faculty members for alignment with the intended learning objectives and clinical competencies. Station content was tested during faculty development sessions and revised based on feedback. To help inter-rater reliability, all evaluators took part in a standardized training session that included calibration exercises using sample performances and a detailed scoring rubric. A subset of about 20% of students was independently checked by two evaluators to help consistent scoring practices; inter-rater reliability was not formally checked using metrics such as the Intraclass Correlation Coefficient (ICC) or Cohen’s κ.

The OSCE consisted of 12 clinical stations, each lasting 6 minutes. Ten were active, scored stations, while two (Stations 5 and 12) were rest stations and not included in the performance check. The stations looked at various competencies, including gynecological and obstetric procedures, communication with simulated patients, and clinical reasoning (Table 1).

To check students' perceptions of the exam schedule, we used two questions from an existing post-course Likert-scale questionnaire: “The examination was stressful” and “There was enough time to prepare for the exam.” These items gave insight into student experiences related to OSCE timing. A separate questionnaire was given to OSCE coordinators, examiners, supervisors, and technicians to get feedback on organizational aspects and the scheduling change. To ensure content validity, all OSCE scenarios were made and reviewed by a panel of experienced OB-GYN faculty members for alignment with the intended learning objectives and clinical competencies. Station content was tested during faculty development sessions and revised based on feedback. To help inter-rater reliability, all evaluators took part in a standardized training session that included calibration exercises using sample performances and a detailed scoring rubric. A subset of about 20% of students was independently checked by two evaluators to help consistent scoring practices; inter-rater reliability was not formally checked using metrics such as the Intraclass Correlation Coefficient (ICC) or Cohen’s κ.

Table 1. OSCE scores by station in group A and Group B

Abbreviations: n, number of participants.

Data collection methods

Students' OSCE scores were collected, including both total scores and individual station scores.

The pass mark was set at 60%, with 40 points equally divided between multiple-choice questions (MCQs) and OSCE performance.

After the examination, students from both groups (A and B) completed a questionnaire checking their stress levels and perceived enough preparation time. Additionally, OSCE examiners, coordinators, and administrative staff were surveyed at the end of the Fall 2023 semester to get feedback on the scheduling change and its organizational impact. Attendance records were also reviewed to check participation rates across cohorts. Although all examiners took part in calibration training and about 20% of student performances were checked by two evaluators to help scoring consistency, formal inter-rater reliability statistics, such as ICC or Cohen’s κ, were not calculated. This is acknowledged as a methodological limitation.

Data analysis

A post hoc power analysis was done to check the sample size for detecting small effects. Using the observed effect size (Cohen’s d = -0.088), the analysis revealed that the statistical power was about 13%, suggesting the study was underpowered to detect small differences between the groups. Although the study was adequately powered for medium to large effects, the small effect size indicates that further research with a larger sample size may be necessary to detect subtle differences.

Descriptive statistics, including means, medians, and standard deviations, were calculated for OSCE scores. Independent t-tests compared performance between Group A and Group B, with the significance level set at p < 0.05.

Likert-scale survey responses were studied to determine differences in perceptions between the groups. OSCE staff feedback was looked at qualitatively to identify scheduling challenges and benefits.

Results

There were two study groups: Group A included 17 flows (n = 159) from the Fall 2022–2023 OB-GYN rotation, and Group B included 20 flows (n = 210) from Fall 2023–2024. In Group A, 94.7% of students were aged 21–22, and 70% were female. In Group B, 95.6% were aged 21–22, with 54.3% female (Table 2).

Group A had a 27.04% non-participation rate (43 students missed the original OSCE), compared to only 3.3% in Group B (7 students), suggesting improved engagement under the revised scheduling. This corresponds to participation rates of 72.96% for Group A and 96.7% for Group B.

Group B's mean OSCE score (16.38) was slightly higher than Group A's (15.85), though not statistically significant (p > 0.05). However, Group B showed lower score variability (SD = 5.34 vs. 7.10), indicating more consistent performance.

Students' OSCE scores were collected, including both total scores and individual station scores.

The pass mark was set at 60%, with 40 points equally divided between multiple-choice questions (MCQs) and OSCE performance.

After the examination, students from both groups (A and B) completed a questionnaire checking their stress levels and perceived enough preparation time. Additionally, OSCE examiners, coordinators, and administrative staff were surveyed at the end of the Fall 2023 semester to get feedback on the scheduling change and its organizational impact. Attendance records were also reviewed to check participation rates across cohorts. Although all examiners took part in calibration training and about 20% of student performances were checked by two evaluators to help scoring consistency, formal inter-rater reliability statistics, such as ICC or Cohen’s κ, were not calculated. This is acknowledged as a methodological limitation.

Data analysis

A post hoc power analysis was done to check the sample size for detecting small effects. Using the observed effect size (Cohen’s d = -0.088), the analysis revealed that the statistical power was about 13%, suggesting the study was underpowered to detect small differences between the groups. Although the study was adequately powered for medium to large effects, the small effect size indicates that further research with a larger sample size may be necessary to detect subtle differences.

Descriptive statistics, including means, medians, and standard deviations, were calculated for OSCE scores. Independent t-tests compared performance between Group A and Group B, with the significance level set at p < 0.05.

Likert-scale survey responses were studied to determine differences in perceptions between the groups. OSCE staff feedback was looked at qualitatively to identify scheduling challenges and benefits.

Results

There were two study groups: Group A included 17 flows (n = 159) from the Fall 2022–2023 OB-GYN rotation, and Group B included 20 flows (n = 210) from Fall 2023–2024. In Group A, 94.7% of students were aged 21–22, and 70% were female. In Group B, 95.6% were aged 21–22, with 54.3% female (Table 2).

Group A had a 27.04% non-participation rate (43 students missed the original OSCE), compared to only 3.3% in Group B (7 students), suggesting improved engagement under the revised scheduling. This corresponds to participation rates of 72.96% for Group A and 96.7% for Group B.

Group B's mean OSCE score (16.38) was slightly higher than Group A's (15.85), though not statistically significant (p > 0.05). However, Group B showed lower score variability (SD = 5.34 vs. 7.10), indicating more consistent performance.

Table 2. Distribution of students and demographic characteristics across two study groups

Abbreviations: n, number of participants

Subgroup analysis of Group A (A1–A4) showed minor score variations (15.60–16.20) without statistical significance, and the overall OSCE score distributions for Groups A and B are illustrated (Table 3, Figure 2). The longer and variable interval between rotation and the exam in Group A may have helped contribute to this performance variability. Analysis of individual OSCE stations (previously listed in Table 1) showed that Group B outperformed Group A in procedural and communication-based tasks, especially at Stations 8 (Pap-smear), 9 (Forceps delivery), and 10 (Simulated patient consultation), while Group A scored slightly higher at Stations 2 (IUD insertion), 6 (Cervix pathology), and 7 (Obstetric examination). Survey results showed no significant differences between groups regarding perceived stress or enough preparation time (Table 4). Group A and B reported similar responses, suggesting that timing did not affect students' psychological readiness.

Figure 2. Distribution of overall OSCE scores for Group a and Group b.

A comparison of minimum, mean, and maximum OSCE scores in both groups, offering a summary view of performance and basic score range

Table 3. Summary of OSCE Exam Results and Subgroup Analysis for Group A and Group B

Abbreviations: n, number of participants; OSCE, objective structured clinical examination; MCQ, multiple choice questions; SD, standard deviation; SE, standard error; t, t-statistics; DF, degrees of freedom; p-value, probability value.

Table 4. Likert scale responses on exam stress and preparation adequacy

Abbreviations: n, number of participants; Diff, difference; p-value, probability value.

OSCE organizing staff generally preferred the revised, more frequent format, citing efficiency and manageability, noting that "it's less tiring," "it is done more frequently, but since it’s done on a single circuit of stations and lasts just one day, it is more efficient," and "managing an exam for 50–60 students is much easier and more efficient than handling 250–270 students all at once."While these comments reflect perceived improvements in workflow and manageability, no formal study of cost, examiner hours, or resource use was done. These observations are qualitative and based on the practical experience of the OSCE organizing team.

In contrast, academic faculty expressed a preference for the previous system, citing scheduling constraints and clinical obligations, noting that "it’s challenging to coordinate the availability of 10 doctors from the university clinic once a month due to their busy schedules," "it’s more practical to gather 20 doctors at the end of February and do the exam over 2–3 days using two circuits of stations," and "leaving my patients frequently is difficult; I would prefer to attend the exam for 2–3 days once a semester in February rather than coming monthly to the examination center."

Discussion

Formative and summative assessments play complementary roles in medical education. While formative assessments help skill acquisition through ongoing feedback [1,2], summative assessments such as the OSCE assess competency at key milestones [3,4]. This study looked at whether the timing of summative OSCEs, either immediately after the OB-GYN rotation (Group B) or at the end of the semester (Group A), affects student performance, consistency, attendance, and psychological readiness [5, 6]. Although the difference in mean OSCE scores between groups was not statistically significant (p > 0.05), Group B, checked immediately post-rotation, demonstrated more consistent performance (SD = 5.34 vs. 7.10) and slightly higher average scores (16.38 vs. 15.85). These findings align with prior research suggesting that assessments done shortly after clinical experience may help skill retention and stabilize performance outcomes [1, 2, 12, 17]. Notably, Group B did better than Group A in procedural stations such as Pap-smear taking (Station 8), forceps delivery (Station 9), and simulated patient interaction (Station 10), while Group A scored higher on knowledge-based tasks like IUD insertion (Station 2), cervix pathology (Station 6), and obstetric examination (Station 7). This pattern aligns with established theories of skill decay, which suggest that procedural memory deteriorates more rapidly without reinforcement, making immediate assessment more suitable for checking hands-on competencies [4, 10]. In contrast, delayed assessment may help better performance in reasoning-based tasks due to the benefits of distributed practice and retrieval-based learning [6, 7].

Attendance was significantly higher in Group B (96.7%) than in Group A (72.96%), likely due to improved engagement and immediacy when assessments follow clinical exposure [8, 13]. Higher participation may reduce the need for resits and improve exam efficiency. Psychological factors appeared to be minimally affected by exam timing. Both groups reported comparable levels of stress and perceived enough preparation time. However, Group B’s lower score variability may reflect a more predictable and focused performance environment, potentially linked to reduced cognitive load or higher confidence [4].

The wide range of OSCE timing in Group A (5–116 days) may have caused uncontrolled variability in retention and readiness. Though subgroup analysis was done, the lack of significance highlights the need for more standardized timing in future studies.

The consistency observed in Group B may also stem from stronger formative assessment during the rotation, helping learning before summative evaluation. This supports the combination of formative and summative approaches to strengthen clinical competence [7, 14, 15].

Faculty and staff feedback highlighted the logistical trade-offs between the two OSCE timing models. While immediate post-rotation assessments may offer educational advantages—such as better alignment with recently acquired clinical skills—they also pose challenges related to clinician availability. Faculty generally preferred the traditional, centralized model to minimize disruption to clinical duties, whereas organizing staff favored the revised model for its improved efficiency and manageability [5, 6]. Balancing pedagogical benefits with institutional feasibility will be essential for sustainable implementation.

Although staff described the revised format as easier to coordinate and less burdensome in smaller batches, these impressions were based on subjective experience. We did not do a formal cost-effectiveness or time-use analysis. Future studies could look at the impact of OSCE scheduling on resource allocation, faculty workload, and institutional costs to more fully check its operational implications. Although our original aim was to look at whether OSCE timing would affect student performance scores, we did not anticipate the substantial improvement in attendance observed in Group B. This unplanned but meaningful finding suggests that assessment timing can affect not only academic outcomes but also student engagement and logistical efficiency—factors that are equally important in the design and delivery of medical education programs. This study has several limitations. First, no a priori power analysis was done, as the sample size was limited by institutional enrollment. The post hoc power was low (13%), indicating a high risk of Type II error. This suggests the study may have failed to detect small but meaningful differences between groups. Future studies should include a priori power calculations and larger or multi-institutional samples to improve statistical power and generalizability. Second, the variation in OSCE timing for Group A may have caused performance variability that subgroup analysis could not fully address. Third, although examiner calibration and partial double scoring were done to help scoring consistency, no formal inter-rater reliability statistics (e.g., Intraclass Correlation Coefficient [ICC] or Cohen’s κ) were calculated or reported. As a result, the consistency of scoring between evaluators could not be formally confirmed, which is a key limitation in checking the objectivity of OSCE outcomes. Fourth, while score analysis was blinded, qualitative feedback from students and staff was not, introducing potential response bias. Finally, the study was conducted within a single institution and specialty, which may limit the applicability of findings to other settings or disciplines.

Conclusion

This study initially set out to look at whether the timing of OSCE administration would affect student performance. While no statistically significant difference in average scores was found between the two groups, an important and unanticipated finding emerged: students checked immediately after their rotation demonstrated substantially higher attendance and more consistent performance. Immediate post-rotation OSCEs were associated with lower score variability and enhanced operational efficiency, indicating potential benefits for both learners and educators. These results suggest that timing may affect engagement and logistical outcomes more than exam scores alone. Group B’s lower score variability shows that assessments closely following rotations may stabilize outcomes. Stress and perceived preparation did not differ significantly between groups, indicating that psychological readiness may depend more on individual factors than exam timing. Higher attendance in Group B reflects greater student engagement when assessment is temporally aligned with learning. Although immediate assessments offer educational advantages, they require careful planning to avoid overburdening clinical staff and resources. Institutions aiming to improve the impact and efficiency of summative assessments may benefit from flexible scheduling models that align closely with clinical exposure. Future research should look at how assessment timing interacts with long-term skill retention, formative feedback, and scalable OSCE implementation. Overall, immediate post-rotation OSCEs help improve performance consistency, engagement, and logistical efficiency without negatively impacting psychological readiness or overall exam scores.

Ethical considerations

This study was reviewed by the Chair of the Biomedical Research Ethics Committee of TSMU, who concluded that full committee review was not required, as the project did not qualify as biomedical research involving human participants. A formal waiver of ethical approval was granted on 15 May 2025 and subsequently presented to the committee during its meeting on 28 May 2025 (Meeting No. #2-2025/116). The study involved secondary analysis of anonymized educational assessment data and anonymous survey feedback. It was done in accordance with the institutional guidelines for educational research.

Artificial intelligence utilization for article writing

AI tools were used to help in research. Specifically, ChatGPT (OpenAI) assisted with drafting and editing, improving efficiency, accuracy, and organization. The following ethical guidelines were followed: transparency, originality, bias prevention, human oversight, and confidentiality.

Acknowledgment

We wish to extend our sincere appreciation to the students and faculty of TSMU for their invaluable participation and contribution to this study.

We would also like to express our profound gratitude to the Departments of Obstetrics and Gynecology and Clinical Skills and Multidisciplinary Simulation for their unwavering support and work together, which were essential to the successful doing of this research.

Conflict of interest statement

The authors declare that they have no conflicts of interest related to this study and affirm their adherence to ethical research practices throughout the course of the research.

Author contributions

DC and IM led the conceptualization and design of the study. DC coordinated the overall study method. Data collection was done by DC, IM, PN, and LO. Quantitative data analysis was done by DC and IM, while qualitative feedback was looked at and analyzed by PN and LO.

The manuscript was drafted by DC and critically revised for important intellectual content by IM. All authors reviewed and approved the final version of the manuscript.

Funding

This research did not receive any specific grants, equipment, or other external resources.

Data availability statement

The data supporting the findings of this study are available from the Clinical Skills and Multidisciplinary Simulation Centre at TSMU, upon reasonable request.

Article Type : Orginal Research |

Subject:

Medical Education

Received: 2025/01/31 | Accepted: 2025/09/9 | Published: 2025/10/1

Received: 2025/01/31 | Accepted: 2025/09/9 | Published: 2025/10/1

References

1. Dünne AA, Wilhelm T, Ramaswamy A, Zapf S, Hamer HM, Müller HH. Teaching and assessment in otolaryngology and neurology: does the timing of clinical courses matter? Eur Arch Otorhinolaryngol. 2006;263(7):630-4. [DOI:10.1007/s00405-006-0114-y] [PMID]

2. Harden RM, Gleeson FA. Assessment of clinical competence: an overview of recent developments. Med Educ. 1979;13(2):134-41. [DOI:10.1111/j.1365-2923.1979.tb00918.x]

3. Salerno A, Euerle BD, Witting MD. Transesophageal echocardiography training of emergency physicians through an e-learning system. J Emerg Med. 2020;58(6):947-52. [DOI:10.1016/j.jemermed.2020.03.036] [PMID]

4. Kovacs E, Birkás E, Takács J. The timing of testing influences skill retention after basic life support training: a prospective quasi-experimental study. BMC Med Educ. 2019;19:452. [DOI:10.1186/s12909-019-1881-7] [PMID] []

5. Boud D, Falchikov N. Rethinking assessment in higher education: learning for the longer term. London: Routledge; 2007. [DOI:10.4324/9780203964309]

6. Cepeda NJ, Pashler H, Vul E, Wixted JT, Rohrer D. Distributed practice in verbal recall tasks: a review and quantitative synthesis. Psychol Bull. 2006;132(3):354-80. [DOI:10.1037/0033-2909.132.3.354] [PMID]

7. Roediger HL, Butler AC. The critical role of retrieval practice in long-term retention. Trends Cogn Sci. 2011;15(1):20-7. [DOI:10.1016/j.tics.2010.09.003] [PMID]

8. Karpicke JD, Roediger HL. The critical importance of retrieval for learning. Science. 2008;319(5865):966-8. [DOI:10.1126/science.1152408] [PMID]

9. Balch WR, Acheson KA. The effects of retrieval practice on long-term memory: the influence of test timing and learning condition. J Exp Psychol Learn Mem Cogn. 2000;26(6):1714-25.

10. Baddeley AD, Hitch G. The recency effect: implicit learning with explicit retrieval? Mem Cognit. 1993;21(2):146-55. [DOI:10.3758/BF03202726] [PMID]

11. Alhamad H, Jaber D, Nusair MB, Albahar F, Edaily SM, Al-Hamad NQ, et al. Implementing OSCE exam for undergraduate pharmacy students: a two-institutional mixed-method study. Jordan J Pharm Sci. 2023;16(2):217-34. [DOI:10.35516/jjps.v16i2.1322]

12. Ariga R. Decrease anxiety among students who will do the objective structured clinical examination with deep breathing relaxation techniques. Open Access Maced J Med Sci. 2019;7(16):2619-22. [DOI:10.3889/oamjms.2019.409] [PMID] []

13. Chisnall B, Vince T, Hall S, Tribe R. Evaluation of outcomes of a formative objective structured clinical examination for second-year UK medical students. Int J Med Educ. 2015;6:76-83. [DOI:10.5116/ijme.5572.a534] [PMID] []

14. Cornelison B, Zerr B. Experiences and perceptions of pharmacy students and pharmacists with a community pharmacy‐based objective structured clinical examination. J Am Coll Clin Pharm. 2021;4(9):1085-92. [DOI:10.1002/jac5.1472]

15. Couto LB, Durand MT, Wolff ACD, Restini CBA, Faria M Jr, Romão GS, et al. Formative assessment scores in tutorial sessions correlate with OSCE and progress testing scores in a PBL medical curriculum. Med Educ Online. 2019;24(1):1560862. [DOI:10.1080/10872981.2018.1560862] [PMID] []

16. Rushood M, Al-Eisa A. Factors predicting students' performance in the final pediatrics OSCE. PLoS One. 2020;15(9):e0236484. [DOI:10.1371/journal.pone.0236484] [PMID] []

17. Zaric S, Belfield LA. Objective structured clinical examination (OSCE) with immediate feedback in early (Preclinical) stages of the dental curriculum. Creat Educ. 2015;6(6):585. [DOI:10.4236/ce.2015.66058]

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |