Fri, Jan 30, 2026

[Archive]

Volume 18, Issue 3 (2025)

J Med Edu Dev 2025, 18(3): 14-25 |

Back to browse issues page

Ethics code: NU/COMHS/EBS0032/2022

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Vilas Hawal M, Archana Simon M, Hasan Abdulla Husain N, Khamis Rashid Al-Harrasi S, Nasser Said Al-Hinai L. Bridging artificial intelligence and ethics in medical education: a comprehensive perspective from students and faculty. J Med Edu Dev 2025; 18 (3) :14-25

URL: http://edujournal.zums.ac.ir/article-1-2389-en.html

URL: http://edujournal.zums.ac.ir/article-1-2389-en.html

Manjiri Vilas Hawal *1  , Miriam Archana Simon2

, Miriam Archana Simon2  , Noor Hasan Abdulla Husain3

, Noor Hasan Abdulla Husain3  , Salima Khamis Rashid Al-Harrasi4

, Salima Khamis Rashid Al-Harrasi4  , Lora Nasser Said Al-Hinai4

, Lora Nasser Said Al-Hinai4

, Miriam Archana Simon2

, Miriam Archana Simon2  , Noor Hasan Abdulla Husain3

, Noor Hasan Abdulla Husain3  , Salima Khamis Rashid Al-Harrasi4

, Salima Khamis Rashid Al-Harrasi4  , Lora Nasser Said Al-Hinai4

, Lora Nasser Said Al-Hinai4

1- Department of Pathology, College of Medicine and Health Sciences, National University of Science and Technology, Sohar, Oman , manjiri@nu.edu.om

2- Department of Psychiatry and Behavioural Science, College of Medicine and Health Sciences, National University of Science and Technology, Sohar, Oman

3- College of Medicine and Health Sciences, National University of Science and Technology, Sohar, Oman.

4- MD6 student, College of Medicine and Health Sciences, National University of Science and Technology, Sohar, Oman.

2- Department of Psychiatry and Behavioural Science, College of Medicine and Health Sciences, National University of Science and Technology, Sohar, Oman

3- College of Medicine and Health Sciences, National University of Science and Technology, Sohar, Oman.

4- MD6 student, College of Medicine and Health Sciences, National University of Science and Technology, Sohar, Oman.

Full-Text [PDF 748 kb]

(546 Downloads)

| Abstract (HTML) (951 Views)

Full-Text: (31 Views)

Abstract

Background & Objective: This study aims to explore the perceptions of undergraduate medical students and faculty members regarding their knowledge of Artificial Intelligence (AI), the integration of AI into medical education, and the ethical issues associated with its use..

Materials & Methods: A cross-sectional survey was conducted among undergraduate medical students and faculty of the College of Medicine and Health Sciences, National University, Oman. The study used a questionnaire previously validated by authors of a Canadian study.

Results: A total of 271 medical students and 22 faculty participated in the study. The majority of the students showed unfamiliarity with the technical terms of AI. 56.8% of them believed that AI would impact their choice of specialization in the future. Many students and faculty expressed concerns about the ethical and social challenges posed by AI. They emphasized the need to incorporate AI and ethics into medical education in Oman to train better and prepare medical professionals for an AI-powered healthcare system.

Conclusion: Medical students in Oman seem to be enthusiastic about AI, which is currently in the spotlight. However, they lack proper knowledge of AI, which limits their understanding and usage of the technology. Both undergraduate students and faculty members recognize the growing importance of AI in healthcare and support the inclusion of AI and ethics education in the medical curriculum. Hence, incorporating AI-related topics in medical education can be a promising start.

Introduction

Artificial Intelligence (AI) simulates human intelligence, solves problems, and performs speedy calculations and evaluations based on previously assessed data [1]. It can perform automated tasks without requiring every step in the process to be explicitly programmed by a human [2]. Machine Learning (ML), a subset of artificial intelligence, uses algorithms that distinguish patterns in data without explicit programming. Deep Learning (DL) is a branch of machine learning that uses artificial neural networks as algorithms to learn from data and make predictions or judgments. It is especially helpful for tasks like audio and image recognition [3]. The groundbreaking technology of AI has increasingly infiltrated numerous fields, including industries such as agriculture, manufacturing, fashion, and sports, with healthcare and the medical system being significant among them [4]. Artificial intelligence has emerged as a powerful tool in the medical field, revolutionizing the health industry and enhancing patient care. The nexus between artificial intelligence and medicine has aroused both excitement and fear in the medical community in recent years. Thus far, AI has impacted a range of health specialties like radiology, neurology [5-7], pathology [8], dermatology [9], surgery [10], and cardiology [11]. It has supported a wide range of activities that include disease diagnosis, clinical decision-making, and drug development. AI is also recommending personalized medicine and therapy for patients with comorbid conditions [12, 13]. ML has also been used in various ways within the medical field to analyse neuroimaging data, aiding in the early detection, prognosis, and treatment of brain disorders [14].

Requisite knowledge of AI is crucial to prevent misuse and ensure its effective deployment [15]. This highlights the necessity for our healthcare leaders and future clinicians to be aware of the potential applications and limitations of AI and ML. Physicians who lack this knowledge may deliver substandard results for their patients due to their inability to select and incorporate these technologies appropriately in patient care situations [16].

A key platform for educating and training medical professionals is to integrate AI-based competencies into the undergraduate curriculum. The reason this is not addressed is the constantly expanding curriculum and the lack of qualified faculty to teach new subjects effectively [17].

The paths of current medical education and AI are expected to intersect in the foreseeable future. To adequately prepare students for the coming era, medical school faculty members need to embrace these technological innovations with open minds. A study conducted by Pinto Dos Santos et al. assessed the views of 263 medical students from three major universities in Germany and found that the majority agreed that AI should be included in medical training [18]. A study by Sit et al found that most respondents believe AI will be crucial in healthcare and that learning AI would benefit their careers [19]. With the crucial need to familiarize the medical students with AI and ML, various institutes globally offer courses on these topics [20].

AI holds great promise for transforming the healthcare sector, but it also brings a myriad of ethical considerations that require careful navigation to ensure patient safety, privacy, and equity. Concerns surrounding AI have sparked a rush toward "AI Ethics," focusing on how the technology can be developed and implemented ethically [21-23].

The World Health Organization (WHO) believes AI can greatly benefit public health and medicine but stresses the need to address ethical challenges first [2]. AI faces several challenges, including patient privacy, data protection, medical consultation, social gaps, empathy, informed consent, and the impact of automation on employment [24-26].

Hence, before AI is integrated into practice, it is highly important to consider the ethical implications [27,28]. Studies show that AI advancements discourage medical students from pursuing certain specializations due to fears of being replaced [29].

A study conducted at Sultan Qaboos University in Oman reports that the healthcare system at the primary, secondary, and tertiary levels underwent a digital transformation, becoming fully digitized between 2008 and 2009.

The authors also mention that there is an ongoing discussion to introduce an AI-oriented learning objective in the medical curriculum at both the undergraduate and postgraduate levels in Oman [30].

As AI transforms medical practice, focus is on preparing the workforce and understanding the views of physicians and students [12]. The need is particularly critical in the present circumstances to know the outlook of medical students regarding the use of AI in medicine. This study aims to understand the knowledge and ethical concerns of medical faculty and students regarding artificial intelligence in the field of medicine in Oman and their perceptions on the integration of AI training into the medical curriculum. These aspects of AI in healthcare have been analysed only to a limited extent in Oman, and this study seeks to address the current gap in the literature.

Materials & Methods

Design and setting(s)

This cross-sectional study was carried out at the College of Medicine and Health Sciences, National University of Science and Technology (COMHS, NU), Oman, from September 1, 2022, to May 31, 2023.

Participants and sampling

The study was conducted among the medical students (from Doctor of Medicine Year 1 (MD1) to Doctor of Medicine Year 4 (MD4)) and the faculty members. All the students from MD1 to MD4 in the preclinical years of study were invited to participate via in-class announcements and emails.

This was an observational study that valued the anonymity and autonomy of each participant. To ensure confidentiality, no email address or name was included in the study. It was ensured that the privacy of each participant was adequately protected. Informed consent was taken from all the participants before completing the survey.

Inclusion criteria: All the preclinical students and the faculty of the college were included.

Exclusion criteria: The clinical students, those who did

not consent to participate, and those who did not complete the entire survey were excluded from the study.

Convenience sampling was employed for the study. The estimated sample size for the study was 235. Out of a total of around 600 students registered in the preclinical phase of the MD program, 271 students consented to participate in the study.

The response rate for student participants was 45%. All faculty members at COMHS, NU were also requested to participate in the study via in-person invitations. The response rate for faculty members was 29%.

Tools/Instruments

The study instrument used was the survey developed and validated by the authors of a study done in Toronto, Canada, titled 'Medical Artificial Intelligence Readiness Scale for Medical Students' [31]. A version of the same questionnaire that was adapted and used in the Asian context [13] was used for the survey in this study. This questionnaire, which has a Cronbach's alpha value of 0.60, indicating moderate reliability and established face validity, was used after obtaining permission from the authors. The original questionnaire [13] included 28 items across eight sections. However, corresponding to the objectives of the current study, the authors included 16 survey items (across four sections). Face validity was established. The questionnaire was scrutinized by the authors for changes in terminology wherever applicable for its suitability according to the geographical and cultural context. Pilot testing was carried out for around 10% of the estimated sample size (20 respondents).

Data collection methods

The study data were collected through two survey methods – online and paper-and-pencil.

The questionnaire focused mainly on analyzing four domains, which include Knowledge of AI, Impact of AI on Medical Profession, AI ethics, and lastly, the need of including AI in medical education.

Data analysis

Data obtained were analysed using IBM's statistical package for the social sciences version 29. Descriptive statistics were employed to analyse participants' responses to survey items. Reliability analysis was carried out using Cronbach's alpha to assess internal consistency. The Kolmogorov-Smirnov test was used to test normality. Non-parametric tests such as the Kruskal-Wallis and the Wilcoxon Signed Rank tests were used to analyze differences. The Spearman's Correlation method was employed to interpret the association. Linear regression analysis was also carried out to explore the strength of association and to investigate the various predictive factors that may impact the perception of participants regarding AI in medical education

Results

Survey results are discussed in two sections: students and faculty.

Two hundred seventy-one medical students participated in the study. 88.6% (n = 240) were females and 11.4% (n = 31) were males. The average age of participants was 20.60 years (SD = 1.89).

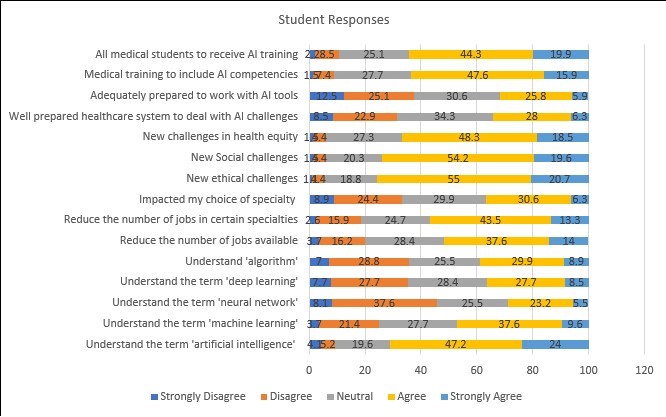

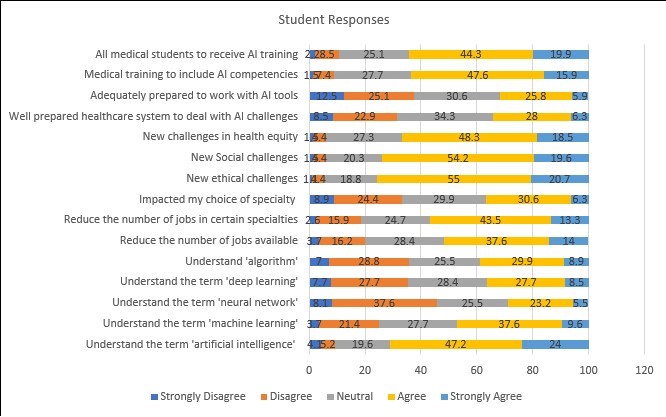

All students were from the preclinical years. 29.5% (n = 80) were from MD1, 10.7% (n = 29) were from MD2, 34.3% (n = 93) were from MD3 and 25.5% (n = 69) were from MD4. Cronbach's Alpha value for the survey questionnaire used was 0.608, indicating moderate reliability. The results of the Kolmogorov-Smirnov test indicated that the data were not normally distributed (p < 0.001) for all test items, leading to the use of non-parametric tests for further analysis. Participants' responses to the survey items are shown in Figure 1.

Students reported unfamiliarity with the technical terms commonly associated with artificial intelligence, including machine learning (25.1%), neural network (45.7%), deep learning (35.4%), and algorithms (35.8%). Around 51.6% of participants felt that AI would reduce the number of jobs available to them, especially in certain specialties (56.8%), and had also impacted their choice of specialization (36.9%).

Students also believed that AI in medicine would raise new challenges in ethical (75.7%), social (73.8%), and health equity (66.8%) domains. A majority of participants (65.7%) were unsure if the current healthcare system was adequately prepared to deal with AI-related challenges. Participants also felt the AI competencies should be included in medical training (63.5%) and that all medical students should receive AI-related training (64.2%). A majority of participants (55.4%) felt that training in AI competencies should commence at the undergraduate level.

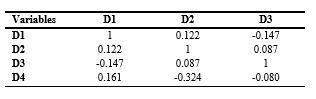

Participant responses were also analysed based on four domains: D1- Knowledge of AI, D2- Impact on the Medical Profession, D3- AI Ethics, and D4- AI and Medical Education. Correlations among domains are shown in Table 1.

Table 1. Correlations among study domains for students

Note: Spearman's correlation analysis was used to explore the association among study domains for students. ** p < 0.01.

Abbreviations: D1, Domain 1; D2, Domain 2; D3, Domain 3; D4, Domain 4; p, probability-value.

Significant correlations are seen. Knowledge of AI is strongly related to the preference for AI competencies to be included in medical education.

Understanding the various aspects of artificial intelligence, machine learning, and deep learning is strongly associated with participants' inclination towards AI training. Perceptions of the ethical implications of AI applications are also closely related to professional expectations and academic competencies.

Awareness of potential risks in the ethical, social, and healthcare domains enhances the openness of participants to the inclusion of AI-based competency training.

In addition, a preference for including AI competencies as part of undergraduate medical education is related to participants' knowledge of AI and perceived ethical implications in future medical practice.

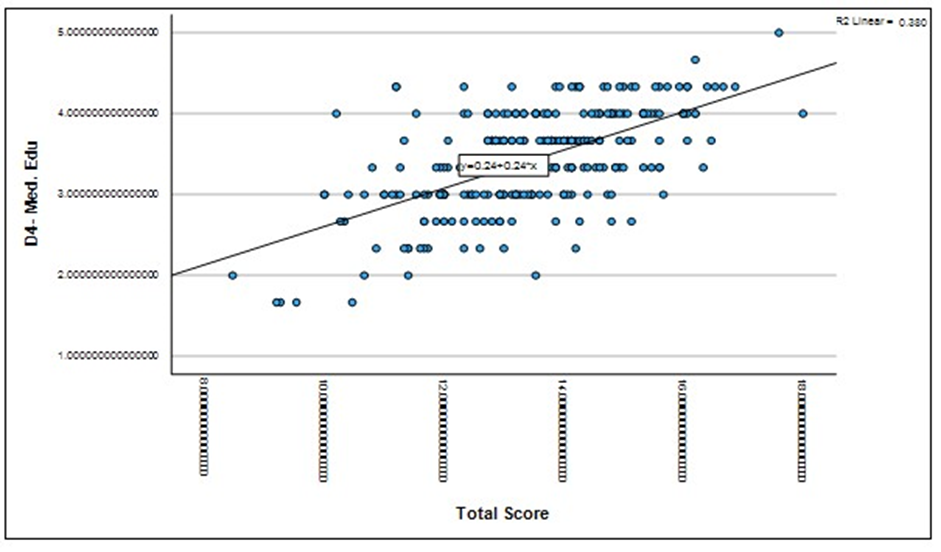

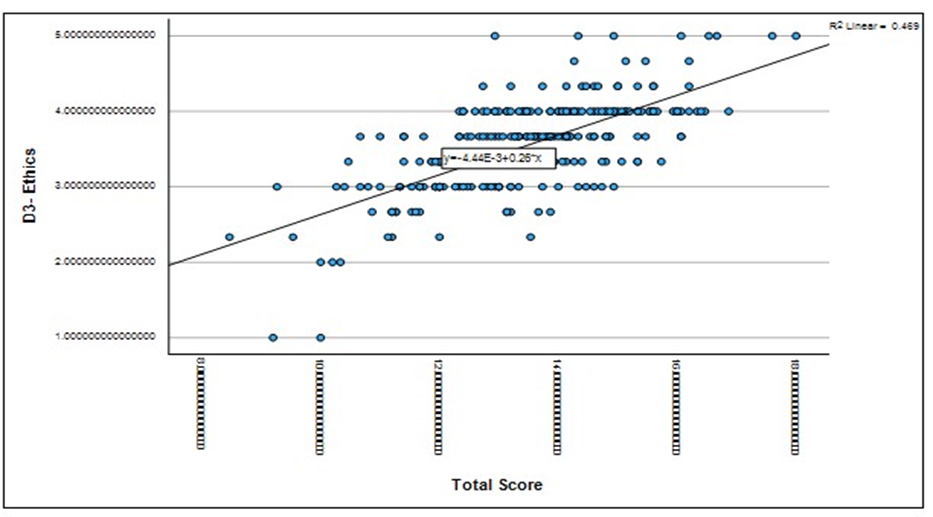

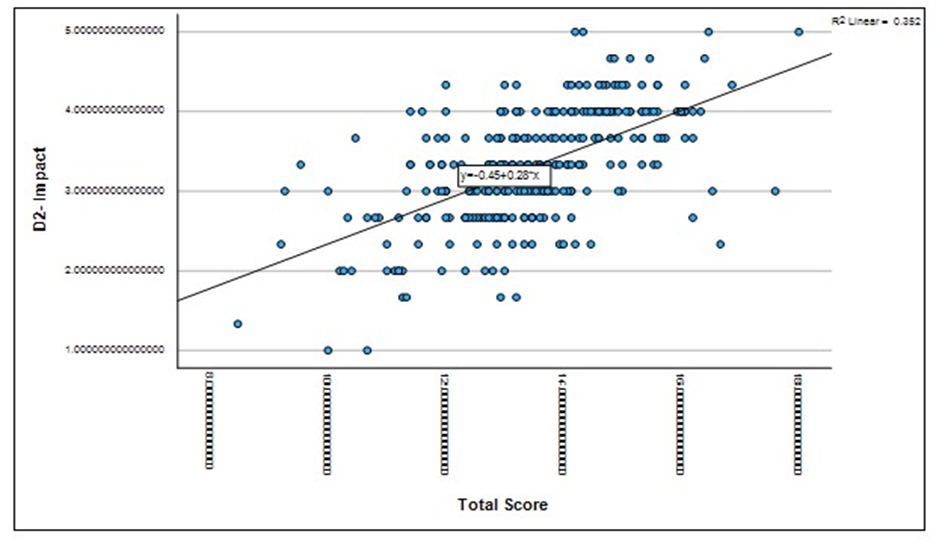

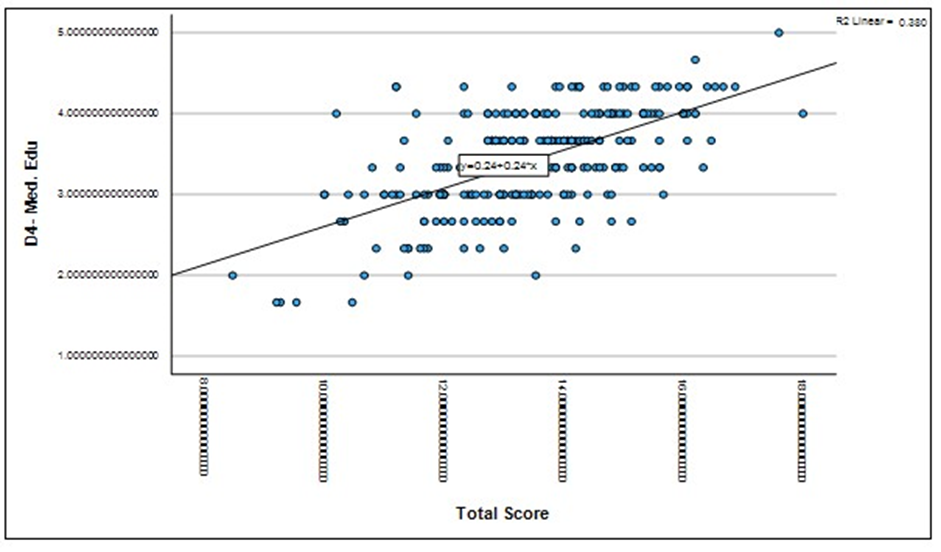

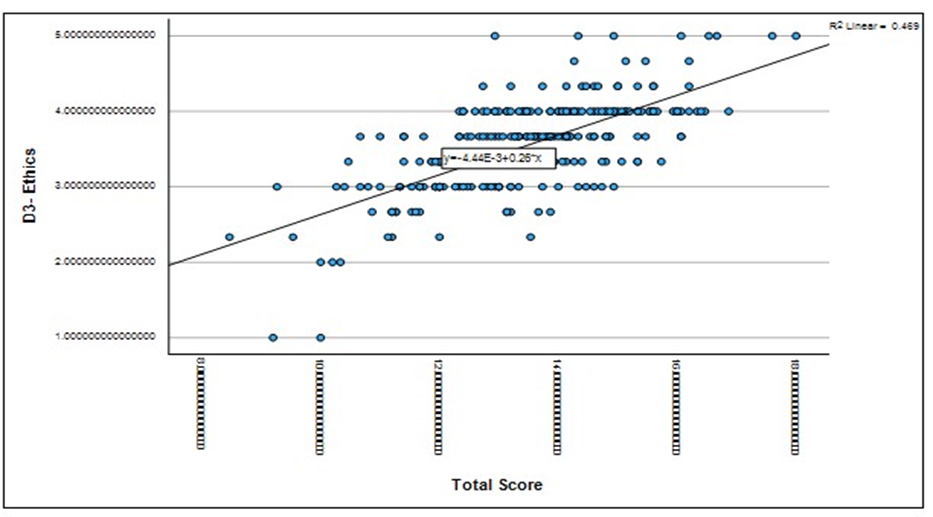

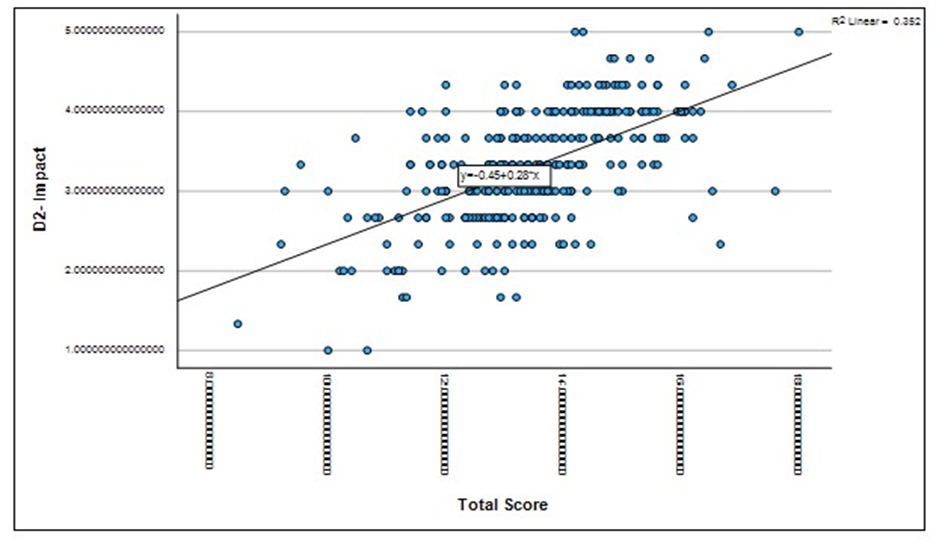

Regression analysis, as illustrated in Figure 2, indicates that students' perceptions of AI in medical education are moderately predicted by their beliefs about the impact of AI on the medical profession (R² = 0.352), the ethical implications of AI for future medical practice (R² = 0.469), as shown in Figure 3, and their preference for including AI in competency training (R² = 0.380), as shown in Figure 4.

These results highlight students' willingness to adapt to the changing landscape of healthcare delivery in this digital era and the need for preparedness regarding AI-based competency training.

Figure 2. Regression analysis -student belief on impact of AI on medical profession.

Figure 3. Regression analysis- student responses on ethical implications of AI.

Figure 4. Regression analysis- student preferences for AI in medical training

Results on the Kruskal-Wallis test indicate that a significant difference exists among the participants' perceptions relating to D2- Impact on the Medical Profession (p = 0.007). Analysis suggests that students in the earlier years of study (MD1 and MD2) perceived a greater impact of AI on the medical profession (including the availability of jobs, choice of specialty) when compared to students in the later years of medical training (MD3 and MD4).

Twenty-two faculty members participated in the study. 27.3% (n = 6) were females and 72.7% (n = 16) were males. 31.8% (n = 7) were from clinical specialties and 68.2% (n = 15) taught basic sciences.

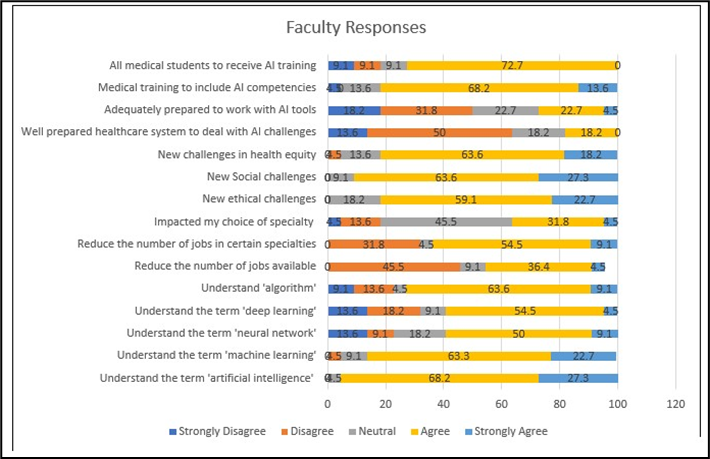

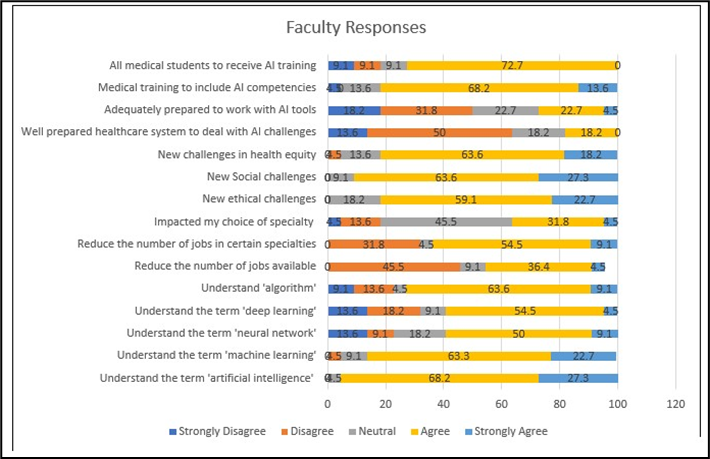

The Kolmogorov-Smirnov test indicated that the data were not normally distributed (p < 0.001) for all test items, which necessitated the use of non-parametric tests. Participants' responses to the survey items are shown in Figure 5.

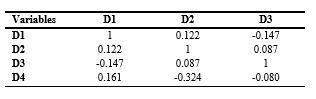

Participant responses were also analysed based on four domains: D1- Knowledge of AI, D2- Impact on the Medical Profession, D3- AI Ethics, and D4- AI and Medical Education. Correlations among domains are shown in Table 2.

Table 2. Correlations among study domains for faculty

Note: Spearman's correlation analysis was used to explore the association among study domains for faculty. No statistically significant correlations were found at p < 0.05 level.

Abbreviations: D1, Domain 1; D2, Domain 2; D3, Domain 3; D4, Domain 4; p, probability-value.

No significant correlations are seen among survey domains for faculty members. However, a negative relationship was found between knowledge of AI, perceived ethical implications, and the preference to include AI competencies in medical education.

Results on the Kruskal-Wallis test indicate that no significant difference (p = 0.778) exists in responses among faculty from different departments.

Results on the Wilcoxon Signed Ranks test indicate no significant differences in the responses on the survey domains and total score (p = 0.249) among students and faculty members.

Discussion

AI has revolutionized the healthcare system, emerging as a transformative force that promises unparalleled advancements in patient care. By utilizing algorithms based on human intelligence, AI enables the accurate and efficient completion of various tasks [32]. ML, a subset of AI, relies on algorithms that use pre-calculated data. In contrast, deep learning represents a more advanced approach that eliminates the need for such pre-designed classifications and features [33]. This study aims to analyse the understanding of these terms among future clinicians and their training faculty at a medical college in Oman. Additionally, it will explore the impact of AI on the medical system, particularly regarding the ethical issues it raises and the necessity of including AI training in medical education. The results of this study are consistent with findings from other surveys on AI conducted globally, where it was found that over half of the student respondents were unfamiliar with the various terminologies of AI, ML, and deep learning [18,29]. The correlation between the domains also suggested that the perceptions of AI may vary with their knowledge of the subject. Unlike students, a majority of faculty members were aware of most of the terminologies related to AI. A study done in the UK in 2020 stated that a third of their medical students had basic knowledge of AI [19]. Another similar study done in 2021 in Ontario showed that the respondents understood the term AI but were unfamiliar with machine learning or neural networks [31]. Similarly, a study done in Germany found relatively low awareness among medical students regarding AI in medicine [12]. A study conducted in London involving 98 health professionals from the National Health Service (NHS) concluded that two-fifths of participants were not familiar with ML and DL [34]. There is a limited understanding of AI among healthcare students, highlighting an urgent need for education and exposure to these topics [35]. In Canada, research indicated that medical students in their early years of training held less favourable views of AI compared to their more advanced peers. This discrepancy may be attributed to the increased clinical exposure that occurs in later years, which can help dispel misconceptions regarding the clinical utility of AI technology [36, 37]. Our study aligns with these findings, as students in their first (MD1) and second (MD2) years perceived a greater impact of AI on the medical profession compared to students in their third (MD3) and fourth (MD4) years. This difference can be attributed to limited exposure in the earlier years, coupled with a growing realization of AI's role as students’ progress through their training. A Nepalian study states that the student perception of AI improved as they progressed to higher years of study, which may be due to exposure to social media and news [13]. A systematic review by Whyte and Hennessy stated that social media has become one of the most influential means of communication and education in today's world [38]. A study by Nisar et al mentions that both students and teachers utilize social media as a platform for communication, learning, and expression of knowledge. This holds significant potential to improve the function within educational environments [39]. A survey in the United States also found that 79.4% of medical students use social media for learning [40]. These studies suggest that during their years through the medical college, students are exposed to a wide range of facts and acquire knowledge about current situations. This, in turn, may influence technology-based learning readiness among students.

Medical students expressed a mix of hope and concern regarding the role of AI in medicine, which may stem from their limited understanding of the technology. This sentiment has also been shared by students in the UK, as well as by medical professionals from France and the United States [32, 41]. The concern regarding the incorporation of AI into healthcare is the potential for the replacement of health professionals [29]. Similar ideas were reflected in our medical student participants expressing their worries about the impact on their choice of specialization and future employment in healthcare. This is consistent with a study done in Canada in 2021 with a total of 2167 students across 10 different health professionals from 18 different universities around Canada [35]. According to a UK report, 35 percent of UK jobs may become automated over the next ten to twenty years [42]. It has been asserted that individuals in the healthcare industry, particularly those working in fields such as radiology, pathology, and digital information, may be at greater risk of job loss compared to professionals on the clinical side who interact directly with patients [43]. Postgraduate students are reluctant to choose careers in these at-risk branches due to the pervasive belief that these fields may be in danger. However, the faculty was not more concerned about the potential reduction in job opportunities in the future but expressed that it would affect the choice of specialization.

65.7% of our medical student participants were unsure if the current healthcare system is adequately prepared to train them regarding how to deal with AI. The majority of students and faculty respondents expressed the need to include AI training in the medical curriculum, preferably at the undergraduate level. This is supported by a web-based survey done in Germany, Austria, and Switzerland, which stated that 88% of their medical students perceived that their current AI education within their medical curriculum is insufficient [44]. A study done in Pakistan in May 2021 stated that the majority of their participants agreed on the inclusion of AI in medical schools [45]. Previous research suggests there is a larger emphasis on AI in higher education, with most studies focusing on undergraduate over postgraduate students [31].

In a study by Yang et al., the Waseda-Kyoto-Kagaka suture no. 2 refined II (WKS-2RII) AI-assisted training system was used to enhance suturing and ligating skills. The group trained with experts and AI showed significantly better technical performance scores than other groups [46]. In another study, AI-assisted training notably enhanced simulated surgical skills in medical students compared to their baseline performance. AI feedback led to significantly higher Expertise Scores and better overall performance than expert feedback or control groups, demonstrating AI's effectiveness in improving surgical skill acquisition.[47] Medical students at Duke Institute for Health Innovation collaborate with data experts to create care-enhanced technologies for doctors. At Stanford University Centre for AI in Medicine and Imaging, graduate and postgraduate students use machine learning to tackle healthcare issues. The radiology department at the University of Florida worked with a tech firm to develop computer-aided detection for mammography. Carle Illinois College of Medicine offers a course led by a scientist, clinical scientist, and engineer to teach new technologies. The University of Virginia Centre for Engineering in Medicine places medical students in engineering labs to innovate in healthcare [48,49].

These findings demonstrate the effectiveness of AI-assisted education in improving students' knowledge and skill development through in-class sessions, workshops, student projects, and specialized training courses.

A study by Fares J et al. explains that healthcare systems in Arab countries, particularly within the Gulf Cooperation Council (GCC), are complex as they strive to balance the quality and cost of care, technological advancement, and the preservation of humanity [50]. Some GCC countries, including Oman, have identified the integration of AI in healthcare applications as a core objective of their national vision [51, 52]. These strategies position AI as a central element in the country's development and growth. The effective use of AI in healthcare depends on medical experts' awareness and understanding of its applications, further emphasizing the need for AI to be included in the medical curriculum [53].

Given that the vast majority of healthcare students anticipate that AI will be integrated into medicine within the next five to ten years, it is particularly concerning that AI is not currently part of healthcare training. The combination of student interest, perceived impact on the future, and their self-reported lack of understanding suggests a significant gap in the current medical curriculum [54].

This has been observed internationally in previous studies. By incorporating AI into health care education, misconceptions and fears about the technology replacing medical professionals could be eliminated [35]. However, integrating AI into an already crowded medical curriculum will be a challenge [54]. The study conducted in Oman concluded that the majority of participants expressed a strong eagerness to learn about and apply AI tools, provided that dedicated courses and workshops are offered and that AI is included in international guidelines and formal training. In line with the national vision, there is a pressing need to incorporate AI into healthcare training and create dedicated courses and training programs for physicians and medical students. Additionally, a regulatory and multidimensional framework outlining stakeholders' responsibilities, legislation, and logistics for future AI applications is necessary [30].

Another challenge presented by these new technologies is the ethical issues associated with their use. The incorporation of AI in the healthcare system presents multifaceted ethical challenges that demand meticulous consideration.

The benefits of using AI in medicine are numerous; however, some factors can negatively impact the field, particularly if we remain unaware of them. The perceived insufficiency of the medical education extends to AI ethics as well. The majority of our participants, both students and faculty, expressed concern about the ethical, social, and health equity challenges associated with AI.

These results underscore the clear need to incorporate AI into medical education, addressing not only technical aspects but also the nuanced ethical considerations that students must navigate [55]. There has already been a push to increase the general ethical awareness of those developing AI technology; many prestigious universities and research centres now explicitly include ethics in their technical curricula to increase developers', programmers', and engineers' capacities for critical reasoning [56, 57]. The publication by Quinn et al has suggested some specific ideas for the instruction and incorporation of AI ethics into medical education [15]. Our research aimed to provide a foundational understanding of the inclusion of an AI curriculum and the associated awareness of ethical issues in Oman. This study may have some limitations. It included perspectives from the medical personnel of a single institution in Oman, and the findings may not be representative of the entire country's healthcare system. As only a portion of the original survey questionnaire was used in the current study, this may have contributed to the moderate reliability value of 0.06. Though global trends related to AI are increasing and young adults are greatly exposed to tech-rich environments, those who have an interest, knowledge, and prior exposure to AI may have been more inclined to participate in the study. To facilitate generalizability and to improve external validity, it is recommended to assess the attitude of medical faculty and students toward AI and robotics in other institutions and medical curricula.

Conclusion

In this study, we found that many medical students lacked detailed knowledge of AI and its applications in medicine. Yet, they showed a positive attitude and willingness to use it practically. More than half of the participants, including both students and faculty, believed that AI would have a significant impact on their choice of specialization, careers, and employment opportunities shortly. A large number of respondents emphasized the urgent need to include AI training in the medical curriculum to ensure that future doctors are adequately prepared to face both the challenges and opportunities that AI presents. This deficiency in AI education within medical curricula has also been noted in various international surveys.

Additionally, our participants expressed concerns regarding the ethical and social challenges associated with AI, highlighting the importance of incorporating hands-on AI training alongside education on its ethical implications. Therefore, careful and thorough curriculum changes are essential to provide students with a balanced understanding of AI's potential benefits, limitations, and ethical considerations. Both AI and AI ethics must be combined into medical education to equip students to use AI responsibly and competently in their future clinical practice.

Ethical considerations

The study was approved by the institutional Ethics and Biosafety Committee (NU/COMHS/EBS0032/2022).

Artificial intelligence utilization for article writing

No generative AI tools were used in the preparation, writing, or editing of this article

Acknowledgment

The authors would like to thank Dr. Nishila Mehta from the University of Toronto, Canada [30], for providing permission to use the questionnaire prepared by them for the study.

Conflict of interest statement

The authors declare no conflict of interest.

Author contributions

Conceptualization, MAS; methodology, MAS, MVH, NHA, SKR, LNS, DAM; formal analysis, MAS; writing—original draft preparation, MVH, MAS; writing review and editing, MVH, MAS; project administration, MAS. All authors have critically reviewed and approved the final draft and are responsible for the content and similarity index of the manuscript.

Funding

This research received no external funding.

Data availability statement

All information is available in the article.

Background & Objective: This study aims to explore the perceptions of undergraduate medical students and faculty members regarding their knowledge of Artificial Intelligence (AI), the integration of AI into medical education, and the ethical issues associated with its use..

Materials & Methods: A cross-sectional survey was conducted among undergraduate medical students and faculty of the College of Medicine and Health Sciences, National University, Oman. The study used a questionnaire previously validated by authors of a Canadian study.

Results: A total of 271 medical students and 22 faculty participated in the study. The majority of the students showed unfamiliarity with the technical terms of AI. 56.8% of them believed that AI would impact their choice of specialization in the future. Many students and faculty expressed concerns about the ethical and social challenges posed by AI. They emphasized the need to incorporate AI and ethics into medical education in Oman to train better and prepare medical professionals for an AI-powered healthcare system.

Conclusion: Medical students in Oman seem to be enthusiastic about AI, which is currently in the spotlight. However, they lack proper knowledge of AI, which limits their understanding and usage of the technology. Both undergraduate students and faculty members recognize the growing importance of AI in healthcare and support the inclusion of AI and ethics education in the medical curriculum. Hence, incorporating AI-related topics in medical education can be a promising start.

Introduction

Artificial Intelligence (AI) simulates human intelligence, solves problems, and performs speedy calculations and evaluations based on previously assessed data [1]. It can perform automated tasks without requiring every step in the process to be explicitly programmed by a human [2]. Machine Learning (ML), a subset of artificial intelligence, uses algorithms that distinguish patterns in data without explicit programming. Deep Learning (DL) is a branch of machine learning that uses artificial neural networks as algorithms to learn from data and make predictions or judgments. It is especially helpful for tasks like audio and image recognition [3]. The groundbreaking technology of AI has increasingly infiltrated numerous fields, including industries such as agriculture, manufacturing, fashion, and sports, with healthcare and the medical system being significant among them [4]. Artificial intelligence has emerged as a powerful tool in the medical field, revolutionizing the health industry and enhancing patient care. The nexus between artificial intelligence and medicine has aroused both excitement and fear in the medical community in recent years. Thus far, AI has impacted a range of health specialties like radiology, neurology [5-7], pathology [8], dermatology [9], surgery [10], and cardiology [11]. It has supported a wide range of activities that include disease diagnosis, clinical decision-making, and drug development. AI is also recommending personalized medicine and therapy for patients with comorbid conditions [12, 13]. ML has also been used in various ways within the medical field to analyse neuroimaging data, aiding in the early detection, prognosis, and treatment of brain disorders [14].

Requisite knowledge of AI is crucial to prevent misuse and ensure its effective deployment [15]. This highlights the necessity for our healthcare leaders and future clinicians to be aware of the potential applications and limitations of AI and ML. Physicians who lack this knowledge may deliver substandard results for their patients due to their inability to select and incorporate these technologies appropriately in patient care situations [16].

A key platform for educating and training medical professionals is to integrate AI-based competencies into the undergraduate curriculum. The reason this is not addressed is the constantly expanding curriculum and the lack of qualified faculty to teach new subjects effectively [17].

The paths of current medical education and AI are expected to intersect in the foreseeable future. To adequately prepare students for the coming era, medical school faculty members need to embrace these technological innovations with open minds. A study conducted by Pinto Dos Santos et al. assessed the views of 263 medical students from three major universities in Germany and found that the majority agreed that AI should be included in medical training [18]. A study by Sit et al found that most respondents believe AI will be crucial in healthcare and that learning AI would benefit their careers [19]. With the crucial need to familiarize the medical students with AI and ML, various institutes globally offer courses on these topics [20].

AI holds great promise for transforming the healthcare sector, but it also brings a myriad of ethical considerations that require careful navigation to ensure patient safety, privacy, and equity. Concerns surrounding AI have sparked a rush toward "AI Ethics," focusing on how the technology can be developed and implemented ethically [21-23].

The World Health Organization (WHO) believes AI can greatly benefit public health and medicine but stresses the need to address ethical challenges first [2]. AI faces several challenges, including patient privacy, data protection, medical consultation, social gaps, empathy, informed consent, and the impact of automation on employment [24-26].

Hence, before AI is integrated into practice, it is highly important to consider the ethical implications [27,28]. Studies show that AI advancements discourage medical students from pursuing certain specializations due to fears of being replaced [29].

A study conducted at Sultan Qaboos University in Oman reports that the healthcare system at the primary, secondary, and tertiary levels underwent a digital transformation, becoming fully digitized between 2008 and 2009.

The authors also mention that there is an ongoing discussion to introduce an AI-oriented learning objective in the medical curriculum at both the undergraduate and postgraduate levels in Oman [30].

As AI transforms medical practice, focus is on preparing the workforce and understanding the views of physicians and students [12]. The need is particularly critical in the present circumstances to know the outlook of medical students regarding the use of AI in medicine. This study aims to understand the knowledge and ethical concerns of medical faculty and students regarding artificial intelligence in the field of medicine in Oman and their perceptions on the integration of AI training into the medical curriculum. These aspects of AI in healthcare have been analysed only to a limited extent in Oman, and this study seeks to address the current gap in the literature.

Materials & Methods

Design and setting(s)

This cross-sectional study was carried out at the College of Medicine and Health Sciences, National University of Science and Technology (COMHS, NU), Oman, from September 1, 2022, to May 31, 2023.

Participants and sampling

The study was conducted among the medical students (from Doctor of Medicine Year 1 (MD1) to Doctor of Medicine Year 4 (MD4)) and the faculty members. All the students from MD1 to MD4 in the preclinical years of study were invited to participate via in-class announcements and emails.

This was an observational study that valued the anonymity and autonomy of each participant. To ensure confidentiality, no email address or name was included in the study. It was ensured that the privacy of each participant was adequately protected. Informed consent was taken from all the participants before completing the survey.

Inclusion criteria: All the preclinical students and the faculty of the college were included.

Exclusion criteria: The clinical students, those who did

not consent to participate, and those who did not complete the entire survey were excluded from the study.

Convenience sampling was employed for the study. The estimated sample size for the study was 235. Out of a total of around 600 students registered in the preclinical phase of the MD program, 271 students consented to participate in the study.

The response rate for student participants was 45%. All faculty members at COMHS, NU were also requested to participate in the study via in-person invitations. The response rate for faculty members was 29%.

Tools/Instruments

The study instrument used was the survey developed and validated by the authors of a study done in Toronto, Canada, titled 'Medical Artificial Intelligence Readiness Scale for Medical Students' [31]. A version of the same questionnaire that was adapted and used in the Asian context [13] was used for the survey in this study. This questionnaire, which has a Cronbach's alpha value of 0.60, indicating moderate reliability and established face validity, was used after obtaining permission from the authors. The original questionnaire [13] included 28 items across eight sections. However, corresponding to the objectives of the current study, the authors included 16 survey items (across four sections). Face validity was established. The questionnaire was scrutinized by the authors for changes in terminology wherever applicable for its suitability according to the geographical and cultural context. Pilot testing was carried out for around 10% of the estimated sample size (20 respondents).

Data collection methods

The study data were collected through two survey methods – online and paper-and-pencil.

The questionnaire focused mainly on analyzing four domains, which include Knowledge of AI, Impact of AI on Medical Profession, AI ethics, and lastly, the need of including AI in medical education.

Data analysis

Data obtained were analysed using IBM's statistical package for the social sciences version 29. Descriptive statistics were employed to analyse participants' responses to survey items. Reliability analysis was carried out using Cronbach's alpha to assess internal consistency. The Kolmogorov-Smirnov test was used to test normality. Non-parametric tests such as the Kruskal-Wallis and the Wilcoxon Signed Rank tests were used to analyze differences. The Spearman's Correlation method was employed to interpret the association. Linear regression analysis was also carried out to explore the strength of association and to investigate the various predictive factors that may impact the perception of participants regarding AI in medical education

Results

Survey results are discussed in two sections: students and faculty.

Two hundred seventy-one medical students participated in the study. 88.6% (n = 240) were females and 11.4% (n = 31) were males. The average age of participants was 20.60 years (SD = 1.89).

All students were from the preclinical years. 29.5% (n = 80) were from MD1, 10.7% (n = 29) were from MD2, 34.3% (n = 93) were from MD3 and 25.5% (n = 69) were from MD4. Cronbach's Alpha value for the survey questionnaire used was 0.608, indicating moderate reliability. The results of the Kolmogorov-Smirnov test indicated that the data were not normally distributed (p < 0.001) for all test items, leading to the use of non-parametric tests for further analysis. Participants' responses to the survey items are shown in Figure 1.

Figure 1. Student response to survey items.

Students reported unfamiliarity with the technical terms commonly associated with artificial intelligence, including machine learning (25.1%), neural network (45.7%), deep learning (35.4%), and algorithms (35.8%). Around 51.6% of participants felt that AI would reduce the number of jobs available to them, especially in certain specialties (56.8%), and had also impacted their choice of specialization (36.9%).

Students also believed that AI in medicine would raise new challenges in ethical (75.7%), social (73.8%), and health equity (66.8%) domains. A majority of participants (65.7%) were unsure if the current healthcare system was adequately prepared to deal with AI-related challenges. Participants also felt the AI competencies should be included in medical training (63.5%) and that all medical students should receive AI-related training (64.2%). A majority of participants (55.4%) felt that training in AI competencies should commence at the undergraduate level.

Participant responses were also analysed based on four domains: D1- Knowledge of AI, D2- Impact on the Medical Profession, D3- AI Ethics, and D4- AI and Medical Education. Correlations among domains are shown in Table 1.

Table 1. Correlations among study domains for students

Note: Spearman's correlation analysis was used to explore the association among study domains for students. ** p < 0.01.

Abbreviations: D1, Domain 1; D2, Domain 2; D3, Domain 3; D4, Domain 4; p, probability-value.

Significant correlations are seen. Knowledge of AI is strongly related to the preference for AI competencies to be included in medical education.

Understanding the various aspects of artificial intelligence, machine learning, and deep learning is strongly associated with participants' inclination towards AI training. Perceptions of the ethical implications of AI applications are also closely related to professional expectations and academic competencies.

Awareness of potential risks in the ethical, social, and healthcare domains enhances the openness of participants to the inclusion of AI-based competency training.

In addition, a preference for including AI competencies as part of undergraduate medical education is related to participants' knowledge of AI and perceived ethical implications in future medical practice.

Regression analysis, as illustrated in Figure 2, indicates that students' perceptions of AI in medical education are moderately predicted by their beliefs about the impact of AI on the medical profession (R² = 0.352), the ethical implications of AI for future medical practice (R² = 0.469), as shown in Figure 3, and their preference for including AI in competency training (R² = 0.380), as shown in Figure 4.

These results highlight students' willingness to adapt to the changing landscape of healthcare delivery in this digital era and the need for preparedness regarding AI-based competency training.

Figure 2. Regression analysis -student belief on impact of AI on medical profession.

Figure 3. Regression analysis- student responses on ethical implications of AI.

Figure 4. Regression analysis- student preferences for AI in medical training

Results on the Kruskal-Wallis test indicate that a significant difference exists among the participants' perceptions relating to D2- Impact on the Medical Profession (p = 0.007). Analysis suggests that students in the earlier years of study (MD1 and MD2) perceived a greater impact of AI on the medical profession (including the availability of jobs, choice of specialty) when compared to students in the later years of medical training (MD3 and MD4).

Twenty-two faculty members participated in the study. 27.3% (n = 6) were females and 72.7% (n = 16) were males. 31.8% (n = 7) were from clinical specialties and 68.2% (n = 15) taught basic sciences.

The Kolmogorov-Smirnov test indicated that the data were not normally distributed (p < 0.001) for all test items, which necessitated the use of non-parametric tests. Participants' responses to the survey items are shown in Figure 5.

Figure 5. Faculty responses to survey items.

A majority of faculty members were aware of the various terms associated with artificial intelligence, including machine learning (86%), neural network (59.1%), deep learning (59%), and algorithms (72.7%). 50% of faculty members believed that AI would not reduce the number of available jobs but felt that this impact may be for certain specialities (63.6%). Participants thought that AI in medicine would raise new challenges in ethical (81.8%), social (90.9%), and health equity (81.8%) areas. Around 63.6% of faculty members felt that the present healthcare system is not fully equipped to cope with the challenges related to AI. A majority of faculty members (81.8%) felt that medical training should include training on AI competencies and that this training should commence at the undergraduate level (77.3%). Participant responses were also analysed based on four domains: D1- Knowledge of AI, D2- Impact on the Medical Profession, D3- AI Ethics, and D4- AI and Medical Education. Correlations among domains are shown in Table 2.

Table 2. Correlations among study domains for faculty

Note: Spearman's correlation analysis was used to explore the association among study domains for faculty. No statistically significant correlations were found at p < 0.05 level.

Abbreviations: D1, Domain 1; D2, Domain 2; D3, Domain 3; D4, Domain 4; p, probability-value.

No significant correlations are seen among survey domains for faculty members. However, a negative relationship was found between knowledge of AI, perceived ethical implications, and the preference to include AI competencies in medical education.

Results on the Kruskal-Wallis test indicate that no significant difference (p = 0.778) exists in responses among faculty from different departments.

Results on the Wilcoxon Signed Ranks test indicate no significant differences in the responses on the survey domains and total score (p = 0.249) among students and faculty members.

Discussion

AI has revolutionized the healthcare system, emerging as a transformative force that promises unparalleled advancements in patient care. By utilizing algorithms based on human intelligence, AI enables the accurate and efficient completion of various tasks [32]. ML, a subset of AI, relies on algorithms that use pre-calculated data. In contrast, deep learning represents a more advanced approach that eliminates the need for such pre-designed classifications and features [33]. This study aims to analyse the understanding of these terms among future clinicians and their training faculty at a medical college in Oman. Additionally, it will explore the impact of AI on the medical system, particularly regarding the ethical issues it raises and the necessity of including AI training in medical education. The results of this study are consistent with findings from other surveys on AI conducted globally, where it was found that over half of the student respondents were unfamiliar with the various terminologies of AI, ML, and deep learning [18,29]. The correlation between the domains also suggested that the perceptions of AI may vary with their knowledge of the subject. Unlike students, a majority of faculty members were aware of most of the terminologies related to AI. A study done in the UK in 2020 stated that a third of their medical students had basic knowledge of AI [19]. Another similar study done in 2021 in Ontario showed that the respondents understood the term AI but were unfamiliar with machine learning or neural networks [31]. Similarly, a study done in Germany found relatively low awareness among medical students regarding AI in medicine [12]. A study conducted in London involving 98 health professionals from the National Health Service (NHS) concluded that two-fifths of participants were not familiar with ML and DL [34]. There is a limited understanding of AI among healthcare students, highlighting an urgent need for education and exposure to these topics [35]. In Canada, research indicated that medical students in their early years of training held less favourable views of AI compared to their more advanced peers. This discrepancy may be attributed to the increased clinical exposure that occurs in later years, which can help dispel misconceptions regarding the clinical utility of AI technology [36, 37]. Our study aligns with these findings, as students in their first (MD1) and second (MD2) years perceived a greater impact of AI on the medical profession compared to students in their third (MD3) and fourth (MD4) years. This difference can be attributed to limited exposure in the earlier years, coupled with a growing realization of AI's role as students’ progress through their training. A Nepalian study states that the student perception of AI improved as they progressed to higher years of study, which may be due to exposure to social media and news [13]. A systematic review by Whyte and Hennessy stated that social media has become one of the most influential means of communication and education in today's world [38]. A study by Nisar et al mentions that both students and teachers utilize social media as a platform for communication, learning, and expression of knowledge. This holds significant potential to improve the function within educational environments [39]. A survey in the United States also found that 79.4% of medical students use social media for learning [40]. These studies suggest that during their years through the medical college, students are exposed to a wide range of facts and acquire knowledge about current situations. This, in turn, may influence technology-based learning readiness among students.

Medical students expressed a mix of hope and concern regarding the role of AI in medicine, which may stem from their limited understanding of the technology. This sentiment has also been shared by students in the UK, as well as by medical professionals from France and the United States [32, 41]. The concern regarding the incorporation of AI into healthcare is the potential for the replacement of health professionals [29]. Similar ideas were reflected in our medical student participants expressing their worries about the impact on their choice of specialization and future employment in healthcare. This is consistent with a study done in Canada in 2021 with a total of 2167 students across 10 different health professionals from 18 different universities around Canada [35]. According to a UK report, 35 percent of UK jobs may become automated over the next ten to twenty years [42]. It has been asserted that individuals in the healthcare industry, particularly those working in fields such as radiology, pathology, and digital information, may be at greater risk of job loss compared to professionals on the clinical side who interact directly with patients [43]. Postgraduate students are reluctant to choose careers in these at-risk branches due to the pervasive belief that these fields may be in danger. However, the faculty was not more concerned about the potential reduction in job opportunities in the future but expressed that it would affect the choice of specialization.

65.7% of our medical student participants were unsure if the current healthcare system is adequately prepared to train them regarding how to deal with AI. The majority of students and faculty respondents expressed the need to include AI training in the medical curriculum, preferably at the undergraduate level. This is supported by a web-based survey done in Germany, Austria, and Switzerland, which stated that 88% of their medical students perceived that their current AI education within their medical curriculum is insufficient [44]. A study done in Pakistan in May 2021 stated that the majority of their participants agreed on the inclusion of AI in medical schools [45]. Previous research suggests there is a larger emphasis on AI in higher education, with most studies focusing on undergraduate over postgraduate students [31].

In a study by Yang et al., the Waseda-Kyoto-Kagaka suture no. 2 refined II (WKS-2RII) AI-assisted training system was used to enhance suturing and ligating skills. The group trained with experts and AI showed significantly better technical performance scores than other groups [46]. In another study, AI-assisted training notably enhanced simulated surgical skills in medical students compared to their baseline performance. AI feedback led to significantly higher Expertise Scores and better overall performance than expert feedback or control groups, demonstrating AI's effectiveness in improving surgical skill acquisition.[47] Medical students at Duke Institute for Health Innovation collaborate with data experts to create care-enhanced technologies for doctors. At Stanford University Centre for AI in Medicine and Imaging, graduate and postgraduate students use machine learning to tackle healthcare issues. The radiology department at the University of Florida worked with a tech firm to develop computer-aided detection for mammography. Carle Illinois College of Medicine offers a course led by a scientist, clinical scientist, and engineer to teach new technologies. The University of Virginia Centre for Engineering in Medicine places medical students in engineering labs to innovate in healthcare [48,49].

These findings demonstrate the effectiveness of AI-assisted education in improving students' knowledge and skill development through in-class sessions, workshops, student projects, and specialized training courses.

A study by Fares J et al. explains that healthcare systems in Arab countries, particularly within the Gulf Cooperation Council (GCC), are complex as they strive to balance the quality and cost of care, technological advancement, and the preservation of humanity [50]. Some GCC countries, including Oman, have identified the integration of AI in healthcare applications as a core objective of their national vision [51, 52]. These strategies position AI as a central element in the country's development and growth. The effective use of AI in healthcare depends on medical experts' awareness and understanding of its applications, further emphasizing the need for AI to be included in the medical curriculum [53].

Given that the vast majority of healthcare students anticipate that AI will be integrated into medicine within the next five to ten years, it is particularly concerning that AI is not currently part of healthcare training. The combination of student interest, perceived impact on the future, and their self-reported lack of understanding suggests a significant gap in the current medical curriculum [54].

This has been observed internationally in previous studies. By incorporating AI into health care education, misconceptions and fears about the technology replacing medical professionals could be eliminated [35]. However, integrating AI into an already crowded medical curriculum will be a challenge [54]. The study conducted in Oman concluded that the majority of participants expressed a strong eagerness to learn about and apply AI tools, provided that dedicated courses and workshops are offered and that AI is included in international guidelines and formal training. In line with the national vision, there is a pressing need to incorporate AI into healthcare training and create dedicated courses and training programs for physicians and medical students. Additionally, a regulatory and multidimensional framework outlining stakeholders' responsibilities, legislation, and logistics for future AI applications is necessary [30].

Another challenge presented by these new technologies is the ethical issues associated with their use. The incorporation of AI in the healthcare system presents multifaceted ethical challenges that demand meticulous consideration.

The benefits of using AI in medicine are numerous; however, some factors can negatively impact the field, particularly if we remain unaware of them. The perceived insufficiency of the medical education extends to AI ethics as well. The majority of our participants, both students and faculty, expressed concern about the ethical, social, and health equity challenges associated with AI.

These results underscore the clear need to incorporate AI into medical education, addressing not only technical aspects but also the nuanced ethical considerations that students must navigate [55]. There has already been a push to increase the general ethical awareness of those developing AI technology; many prestigious universities and research centres now explicitly include ethics in their technical curricula to increase developers', programmers', and engineers' capacities for critical reasoning [56, 57]. The publication by Quinn et al has suggested some specific ideas for the instruction and incorporation of AI ethics into medical education [15]. Our research aimed to provide a foundational understanding of the inclusion of an AI curriculum and the associated awareness of ethical issues in Oman. This study may have some limitations. It included perspectives from the medical personnel of a single institution in Oman, and the findings may not be representative of the entire country's healthcare system. As only a portion of the original survey questionnaire was used in the current study, this may have contributed to the moderate reliability value of 0.06. Though global trends related to AI are increasing and young adults are greatly exposed to tech-rich environments, those who have an interest, knowledge, and prior exposure to AI may have been more inclined to participate in the study. To facilitate generalizability and to improve external validity, it is recommended to assess the attitude of medical faculty and students toward AI and robotics in other institutions and medical curricula.

Conclusion

In this study, we found that many medical students lacked detailed knowledge of AI and its applications in medicine. Yet, they showed a positive attitude and willingness to use it practically. More than half of the participants, including both students and faculty, believed that AI would have a significant impact on their choice of specialization, careers, and employment opportunities shortly. A large number of respondents emphasized the urgent need to include AI training in the medical curriculum to ensure that future doctors are adequately prepared to face both the challenges and opportunities that AI presents. This deficiency in AI education within medical curricula has also been noted in various international surveys.

Additionally, our participants expressed concerns regarding the ethical and social challenges associated with AI, highlighting the importance of incorporating hands-on AI training alongside education on its ethical implications. Therefore, careful and thorough curriculum changes are essential to provide students with a balanced understanding of AI's potential benefits, limitations, and ethical considerations. Both AI and AI ethics must be combined into medical education to equip students to use AI responsibly and competently in their future clinical practice.

Ethical considerations

The study was approved by the institutional Ethics and Biosafety Committee (NU/COMHS/EBS0032/2022).

Artificial intelligence utilization for article writing

No generative AI tools were used in the preparation, writing, or editing of this article

Acknowledgment

The authors would like to thank Dr. Nishila Mehta from the University of Toronto, Canada [30], for providing permission to use the questionnaire prepared by them for the study.

Conflict of interest statement

The authors declare no conflict of interest.

Author contributions

Conceptualization, MAS; methodology, MAS, MVH, NHA, SKR, LNS, DAM; formal analysis, MAS; writing—original draft preparation, MVH, MAS; writing review and editing, MVH, MAS; project administration, MAS. All authors have critically reviewed and approved the final draft and are responsible for the content and similarity index of the manuscript.

Funding

This research received no external funding.

Data availability statement

All information is available in the article.

Article Type : Orginal Research |

Subject:

Medical Education

Received: 2025/01/12 | Accepted: 2025/08/31 | Published: 2025/10/1

Received: 2025/01/12 | Accepted: 2025/08/31 | Published: 2025/10/1

References

1. Amisha, Malik P, Pathania M, Rathaur VK. Overview of artificial intelligence in medicine. J Family Med Prim Care. 2019;8(7):2328-2331. [DOI:10.4103/jfmpc.jfmpc_440_19] [PMID] []

2. World Health Organization. Ethics and governance of artificial intelligence for health: WHO guidance. Geneva: World Health Organization; 2021.

3. Janiesch C, Zschech P, Heinrich K. Machine learning and deep learning. Electron Mark. 2021;31(4):685-695. [DOI:10.1007/s12525-021-00475-2]

4. Farhud DD, Zokaei S. Ethical issues of artificial intelligence in medicine and healthcare. Iran J Public Health. 2021;50(11):2109-2114. [DOI:10.18502/ijph.v50i11.7600]

5. Chilamkurthy S, Ghosh R, Tanamala S, Biviji M, Campeau NG, Venugopal VK, et al. Deep learning algorithms for detection of critical findings in head CT scans: a retrospective study. Lancet. 2018;392(10162):2388-2396. [DOI:10.1016/S0140-6736(18)31645-3] [PMID]

6. Singh R, Kalra MK, Nitiwarangkul C, Patti JA, Homayounieh F, Padole A, et al. Deep learning in chest radiography: detection of findings and presence of change. PLoS One. 2018;13(7):e0204155. [DOI:10.1371/journal.pone.0204155] [PMID] []

7. Titano JJ, Badgeley M, Schefflein J, Pain M, Su A, Cai M, et al. Automated deep-neural-network surveillance of cranial images for acute neurologic events. Nat Med. 2018;24(10):1337-1341. [DOI:10.1038/s41591-018-0147-y] [PMID]

8. Capper D, Jones DT, Sill M, Hovestadt V, Schrimpf D, Sturm D, et al. DNA methylation-based classification of central nervous system tumours. Nature. 2018;555(7697):469-474. [DOI:10.1038/nature26000] [PMID] []

9. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115-118. [DOI:10.1038/nature21056] [PMID] []

10. Rimmer L, Howard C, Picca L, Bashir M. The automaton as a surgeon: the future of artificial intelligence in emergency and general surgery. Eur J Trauma Emerg Surg. 2020;47(5):757-762. [DOI:10.1007/s00068-020-01444-8] [PMID]

11. Zhang J, Gajjala S, Agrawal P, Tison GH, Hallock LA, Beussink-Nelson L, et al. Fully automated echocardiogram interpretation in clinical practice. Circulation. 2018;138(16):1623-1635. [DOI:10.1161/CIRCULATIONAHA.118.034338] [PMID] []

12. McLennan S, Meyer A, Schreyer K, Buyx A. German medical students' views regarding artificial intelligence in medicine: a cross-sectional survey. PLOS Digit Health. 2022;1(10):e0000114. [DOI:10.1371/journal.pdig.0000114] [PMID] []

13. Jha N, Shankar PR, Al-Betar MA, Mukhia R, Hada K, Palaian S. Undergraduate medical students' and interns' knowledge and perception of artificial intelligence in medicine. Adv Med Educ Pract. 2022;13:927-937. [DOI:10.2147/AMEP.S368519] [PMID] []

14. Singh NM, Harrod JB, Subramanian S, Robinson M, Chang K, Cetin-Karayumak S, et al. How machine learning is powering neuroimaging to improve brain health. Neuroinformatics. 2022;20(4):943-964. [DOI:10.1007/s12021-022-09572-9] [PMID] []

15. Quinn T, Coghlan S. Readying medical students for medical AI: The need to embed AI ethics education. arXiv. 2021 Sep 7. []

16. Yu KH, Beam AL, Kohane IS. Artificial intelligence in healthcare. Nat Biomed Eng. 2018;2(10):719-731. [DOI:10.1038/s41551-018-0305-z] [PMID]

17. Kolachalama VB, Garg PS. Machine learning and medical education. NPJ Digit Med. 2018;1:54. [DOI:10.1038/s41746-018-0061-1] [PMID] []

18. Pinto dos Santos D, Giese D, Brodehl S, Chon SH, Staab W, Kleinert R, et al. Medical students' attitude towards artificial intelligence: a multicentre survey. Eur Radiol. 2019;29(4):1640-1646. [DOI:10.1007/s00330-018-5601-1] [PMID]

19. Sit C, Srinivasan R, Amlani A, Muthuswamy K, Azam A, Monzon L, et al. Attitudes and perceptions of UK medical students towards artificial intelligence and radiology: a multicentre survey. Insights Imaging. 2020;11(1):14. [DOI:10.1186/s13244-019-0830-7] [PMID] []

20. Brouillette M. AI added to the curriculum for doctors-to-be. Nat Med. 2019;25(12):1808-1809. [DOI:10.1038/s41591-019-0648-3] [PMID]

21. Jobin A, Ienca M, Vayena E. The global landscape of AI ethics guidelines. Nat Mach Intell. 2019;1:389-399. [DOI:10.1038/s42256-019-0088-2]

22. European Commission. Ethics guidelines for trustworthy AI [Internet]. European Commission. Available from: [cited 2023 Nov 15]. []

23. Floridi L, Cowls J, Beltrametti M, Chatila R, Chazerand P, Dignum V, et al. AI4People-an ethical framework for a good AI society: opportunities, risks, principles, and recommendations. Minds Mach. 2018;28:689-707. [DOI:10.1007/s11023-018-9482-5] [PMID] []

24. Coeckelbergh M. Ethics of artificial intelligence: some ethical issues and regulatory challenges. Technol Reg. 2019:31-34. [DOI:10.71265/a9yxhg88]

25. Bostrom N, Yudkowsky E. The ethics of artificial intelligence. In: Frankish K, Ramsey WM, editors. The Cambridge handbook of artificial intelligence. Cambridge: Cambridge University Press; 2014. p. 316-334. [DOI:10.1017/CBO9781139046855.020]

26. Whittlestone J, Nyrup R, Alexandrova A, Dihal K, Cave S. Ethical and societal implications of algorithms, data, and artificial intelligence: a roadmap for research. London: Nuffield Foundation; 2019.

27. Jeyaraman M, Balaji S, Jeyaraman N, Yadav S. Unraveling the ethical enigma: artificial intelligence in healthcare. Cureus. 2023;15(8):e43262. [DOI:10.7759/cureus.43262]

29. Gong B, Nugent JP, Guest W, Parker W, Chang PJ, Khosa F, et al. Influence of artificial intelligence on Canadian medical students' preference for radiology specialty: a national survey study. Acad Radiol. 2019;26(4):566-577. [DOI:10.1016/j.acra.2018.10.007] [PMID]

30. Al Zaabi A, Al Maskari S, Al Abdulsalam A. Are physicians and medical students ready for artificial intelligence applications in healthcare? Digit Health. 2023;9:20552076231152167. [DOI:10.1177/20552076231152167] [PMID] []

31. Mehta N, Harish V, Bilimoria K, Morgado F, Ginsburg S, Law M, et al. Knowledge and attitudes on artificial intelligence in healthcare: a provincial survey study of medical students. MedEdPublish. 2021;10:75. [DOI:10.15694/mep.2021.000075.1]

32. Laï MC, Brian M, Mamzer M. Perceptions of artificial intelligence in healthcare: findings from a qualitative survey study among actors in France. J Transl Med. 2020;18(1):14. [DOI:10.1186/s12967-019-02204-y] [PMID] []

33. Ooi SK, Makmur A, Soon AY, Fook-Chong S, Liew C, Sia SY, et al. Attitudes toward artificial intelligence in radiology with learner needs assessment within radiology residency programmes: a national multi-programme survey. Singapore Med J. 2021;62:126. [DOI:10.11622/smedj.2019141] [PMID] []

34. Castagno S, Khalifa M. Perceptions of artificial intelligence among healthcare staff: a qualitative survey study. Front Artif Intell. 2020;3:84. [DOI:10.3389/frai.2020.578983] [PMID] []

35. Teng M, Singla R, Yau O, Lamoureux D, Gupta A, Hu Z, et al. Health care students' perspectives on artificial intelligence: countrywide survey in Canada. JMIR Med Educ. 2022;8(1):e33390. [DOI:10.2196/33390] [PMID] []

36. Alsiö Å, Wennström B, Landström B, Silén C. Implementing clinical education of medical students in hospital communities: experiences of healthcare professionals. Int J Med Educ. 2019;10:54-61. [DOI:10.5116/ijme.5c83.cb08] [PMID] []

37. Dhillon J, Salimi A, ElHawary H. Impact of COVID-19 on Canadian medical education: pre-clerkship and clerkship students affected differently. J Med Educ Curric Dev. 2020;7:2382120520965247. [DOI:10.1177/2382120520965247] [PMID] []

38. Whyte W, Hennessy C. Social media use within medical education: a systematic review to develop a pilot questionnaire on how social media can be best used at BSMS. MedEdPublish. 2017;6. [DOI:10.15694/mep.2017.000083] [PMID] []

39. Nisar S, Alshanberi AM, Mousa AH, El Said M, Hassan F, Rehman A, et al. Trend of social media use by undergraduate medical students; a comparison between medical students and educators. Ann Med Surg [Internet]. 2022;81:104420. [DOI:10.1016/j.amsu.2022.104420] [PMID] []

40. Fitzgerald RT, Radmanesh A, Hawkins CM. Social media in medical education. AJNR Am J Neuroradiol. 2015;36:1814-1815. [DOI:10.3174/ajnr.A4136] [PMID] []

41. Cho S, Han B, Hur K, Mun J. Perceptions and attitudes of medical students regarding artificial intelligence in dermatology. J Eur Acad Dermatol Venereol. 2021;35(1):72-73. [DOI:10.1111/jdv.16812] [PMID]

42. UserTesting. Healthcare chatbot apps are on the rise but the overall customer experience (CX) falls short according to a UserTesting report [Internet]. UserTesting. Available from: [cited 2022 Mar 1] []

43. Manyika J, Chui M, Miremadi M, Bughin J, George K, Willmott P, et al. A future that works: automation, employment, and productivity [Internet]. McKinsey Global Institute. Available from: [cited 2024 Aug 12] []

44. Weidener L, Fischer M. Artificial intelligence in medicine: cross-sectional study among medical students on application, education, and ethical aspects. JMIR Med Educ. 2024;10:e51247. [DOI:10.2196/51247] [PMID] []

45. Ahmed Z, Bhinder KK, Tariq A, Tahir MJ, Mehmood Q, Tabassum MS, et al. Knowledge, attitude, and practice of artificial intelligence among doctors and medical students in Pakistan: a cross-sectional online survey. Ann Med Surg. 2022;76:103493. [DOI:10.1016/j.amsu.2022.103493] [PMID] []

46. Yang YY, Shulruf B. Expert-led and artificial intelligence (AI) system-assisted tutoring course increase confidence of Chinese medical interns on suturing and ligature skills: prospective pilot study. J Educ Eval Health Prof. 2019;16:7. [DOI:10.3352/jeehp.2019.16.7] [PMID] []

47. Fazlollahi AM, Bakhaidar M, Alsayegh A, Yilmaz R, Winkler-Schwartz A, Mirchi N, et al. Effect of artificial intelligence tutoring vs expert instruction on learning simulated surgical skills among medical students: a randomized clinical trial. JAMA Netw Open. 2022;5(2):e2149008. [DOI:10.1001/jamanetworkopen.2021.49008] [PMID] []

48. Mir MM, Mir GM, Raina NT, Mir SM, Mir SM, Miskeen E, et al. Application of artificial intelligence in medical education: current scenario and future perspectives. J Adv Med Educ Prof. 2023;11(3):133-140. []

49. Paranjape K, Schinkel M, Panday RN, Car J, Nanayakkara P. Introducing artificial intelligence training in medical education. JMIR Med Educ. 2019;5(2):e16048. [DOI:10.2196/16048] [PMID] []

50. Fares J, Salhab HA, Fares MY, Khachfe HH, Hamadeh RS, Fares Y. Academic medicine and the development of future leaders in healthcare. In: Laher I, editor. Handbook of healthcare in the Arab world. Cham: Springer International Publishing; 2020. p. 1-18. [DOI:10.1007/978-3-319-74365-3_167-1] []

51. KSA. Vision 2030 Kingdom of Saudi Arabia. Government of Saudi Arabia [Internet]. Riyadh: Government of Saudi Arabia; 2023. Available from: https://vision2030.gov.sa/download/file/fid/417 [cited 2023 Nov 28]. []

52. Government of Oman. Oman Vision 2040 [Internet]. Muscat: Government of Oman; 2020. Available from: [cited 2023 Dec 15]. []

53. Dabdoub F, Colangelo M, Aljumah M. Artificial intelligence in healthcare and biotechnology: a review of the Saudi experience. J Artif Intell Cloud Comput. 2022;1:2-6. [DOI:10.47363/JAICC/2022(1)107]

54. Stewart J, Lu J, Gahungu N, Goudie A, Fegan PG, Bennamoun M, Sprivulis P, Dwivedi G. Western Australian medical students' attitudes towards artificial intelligence in healthcare. PLoS One. 2023;18(8):e0290642. [DOI:10.1371/journal.pone.0290642] [PMID] []

55. Bisdas S, Topriceanu CC, Zakrzewska Z, Irimia AV, Shakallis L, Subhash J, et al. Artificial intelligence in medicine: a multinational multi-center survey on the medical and dental students' perception. Front Public Health. 2021;9:795284. [DOI:10.3389/fpubh.2021.795284] [PMID] []

56. Grosz BJ, Grant DG, Vredenburgh K, Behrends J, Hu L, Simmons A, et al. Embedded ethics: integrating ethics broadly across computer science education. Commun ACM. 2018;62(3):54-61. [DOI:10.1145/3330794]

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |