Mon, Feb 2, 2026

[Archive]

Volume 18, Issue 2 (2025)

J Med Edu Dev 2025, 18(2): 129-143 |

Back to browse issues page

Ethics code: 4000739

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Salmani N, Bagheri I. A systematic review of methods for assessing clinical reasoning in nursing students. J Med Edu Dev 2025; 18 (2) :129-143

URL: http://edujournal.zums.ac.ir/article-1-2352-en.html

URL: http://edujournal.zums.ac.ir/article-1-2352-en.html

1- National Agency for Strategic Research in Medical Education, Tehran, Iran.

2- Faculty of Nursing and Midwifery, Research Center for Nursing and Midwifery Care, Non-communicable Diseases Research Institute, Shahid Sadoughi University of Medical Sciences, Yazd, Iran. ,imane.bagheri66@gmail.com

2- Faculty of Nursing and Midwifery, Research Center for Nursing and Midwifery Care, Non-communicable Diseases Research Institute, Shahid Sadoughi University of Medical Sciences, Yazd, Iran. ,

Keywords: nursing students, clinical reasoning, systematic review, clinical reasoning tools, nursing education

Full-Text [PDF 534 kb]

(2982 Downloads)

| Abstract (HTML) (2317 Views)

Full-Text: (161 Views)

Abstract

Background & Objective: Nursing instructors can play a crucial role in enhancing students' clinical reasoning skills by evaluating them and offering timely, constructive feedback. Therefore, identifying effective clinical reasoning assessment tools is vital for accomplishing this objective. To this end, this study aimed to review the methods for assessing clinical reasoning in nursing students.

Materials & Methods: This systematic review was conducted in May 2024 using the keywords "Clinical Reasoning" and "Nursing Students." Eligible articles published in both English and Persian were systematically searched in various national and international online databases, including SID, Magiran, Scopus, Web of Science, PubMed, and ProQuest.

Results: A total of 2893 articles were retrieved from the initial search findings. After removing duplicates and irrelevant articles based on the inclusion criteria, a qualitative assessment was conducted using the Critical Appraisal Skills Programme (CASP), the Mixed Methods Appraisal Tool (MMAT), and the Joanna Briggs Institute (JBI) checklists. Ultimately, 20 articles on clinical reasoning assessment tools for nursing students were reviewed. The findings revealed that researchers utilize a range of tools to assess clinical reasoning, with the most common being the Nurses Clinical Reasoning Scale (NCRS), Script Concordance Tests (SCTs), key feature tests, Outcome-Present State Test (OPT), rubrics, and the triple jump exercise. However, the validity and reliability of the tools used and their acceptability and cost-effectiveness have not been assessed in the literature.

Conclusion: The findings indicated that the NCRS is the most commonly used assessment tool. Therefore, conducting psychometric evaluations of this tool in Iran is recommended. Furthermore, longitudinal studies are needed to evaluate the impact of clinical reasoning assessment tools on nursing students and explore how these tools can be effectively integrated into nursing curricula.

Clinical reasoning helps nurses make the most accurate decisions in clinical settings and provide person-centered, high-quality, effective, and safe patient care [7-10]. In other words, nurses with adequate clinical reasoning skills positively impact patient care outcomes [11]. However, a literature review suggests nurses often have limited clinical reasoning skills and use various cognitive strategies [12, 13]. The most commonly used skill was checking for accuracy and reliability. The reasoning process of nurses encompasses the phases of assessment, analysis, diagnosis, planning/implementation, and evaluation [14].

Nurses with inadequate clinical reasoning skills find decision-making complex, with their ability to triage patients showing accuracy rates ranging from 22% to 89% [12, 13]. These nurses often cannot recognize situations where the patient's condition deteriorates and fails to save the patient [11]. Poor clinical reasoning skills can lead to poor diagnosis, failure to provide effective treatment, inappropriate management, and adverse patient outcomes [15]. In other words, the occurrence of hospital complications in patients is directly related to the quality of care and the clinical reasoning skills of caregivers [16]. Therefore, developing clinical reasoning skills before nurses enter the clinical field is necessary [7, 8].

Strengthening clinical reasoning for nursing students is a goal of nursing education [5] and is considered an important topic in nursing programs and learning outcomes [13]. Nursing instructors are responsible for assessing students' understanding of the logic of clinical actions, and one of the primary goals of clinical nursing educators is to develop clinical reasoning skills in students and bridge the gap between theoretical and practical education [8]. Students must learn to behave in critical situations and make wise decisions [17]. Thus, clinical reasoning is an important learning outcome that requires accurate assessment [3, 18]. Determining the clinical reasoning skills of nursing students can be a window to assessing their ability to make accurate clinical judgments and, thus, will help develop appropriate teaching and learning strategies that promote the clinical reasoning skills of nursing students [19]. Accordingly, assessing clinical reasoning and decision-making is essential to prepare for future professional tasks, and a test that aims to assess clinical competence should be able to measure, among other things, the student's ability to reason clinically [20].

However, there are difficulties in finding an effective method for assessing students' clinical reasoning processes for diagnosis and treatment [21]. This is because clinical reasoning is highly complex, and its assessment poses significant challenges.

Additionally, measuring internal mental processes is inherently difficult since they are not directly observable [22]. There is currently a wide range of clinical reasoning assessments, and the literature on these instruments is widely dispersed, making it challenging for instructors to select and carry out assessments aligned with their specific goals, needs, and resources. These assessments have often been designed in different contexts [23]. Hence, the large number and variety of clinical reasoning assessment methods present challenges in selecting assessments directed at a specific goal [24].

Furthermore, when developing assessments, principles such as assessment objectives, what should be assessed, how to assess, reliability and validity of tests, educational impact, cost-effectiveness, and acceptability should be considered [25-28]. Utilizing a standard guideline for objectively assessing clinical reasoning enhances evaluations' accuracy.

Effective measurement makes it possible to determine the extent to which a researcher's intervention has led to change [29]. Therefore, a combination of the existing evidence is necessary to advance the assessment of this basic competency, and the value of the findings of this study can be better understood through the lens of competency-based education [24]. To this end, the present study aimed to identify methods for assessing clinical reasoning in nursing students.

Materials & Methods

Design and setting(s)

This systematic review was conducted using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) checklist.

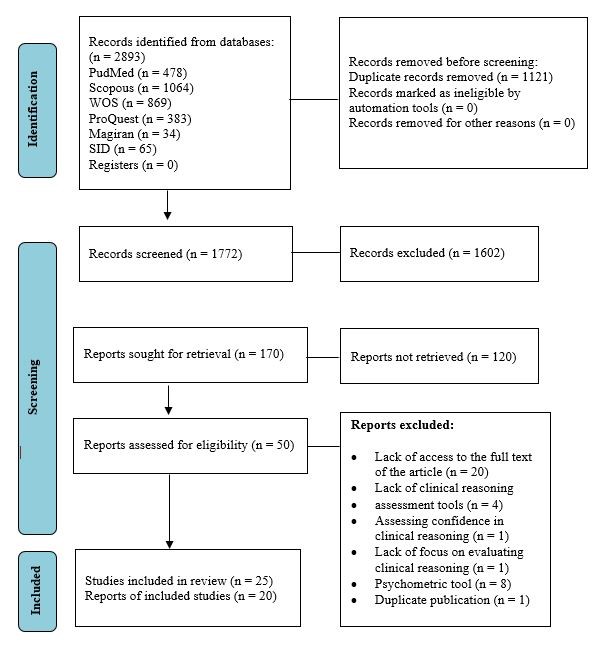

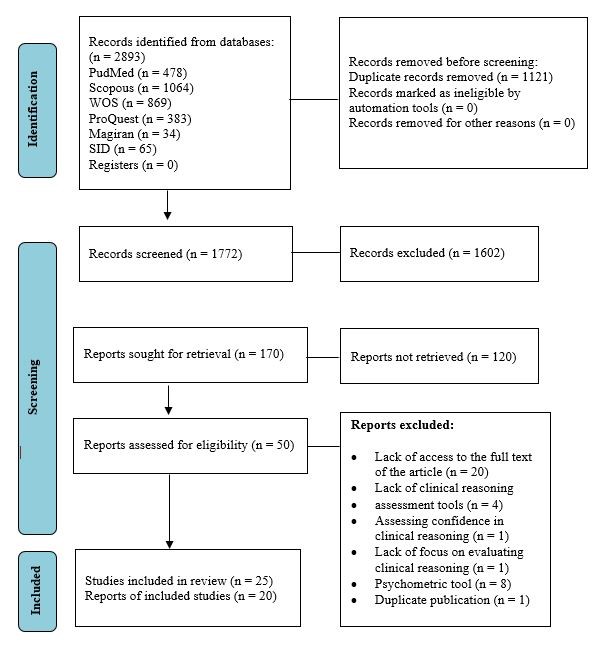

Data collection methods (Figure 1)

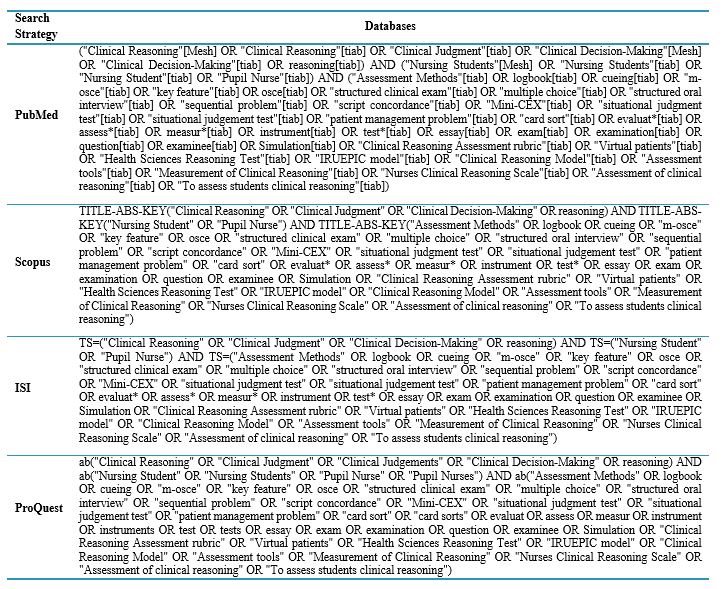

In this systematic review, the MESH, SNOMED, and EMBASE thesauruses and related literature were examined to select relevant keywords.

Figure 1. PRISMA 2020 flow diagram

Two thousand eight hundred ninety-three articles were retrieved from all databases and imported into EndNote-9 software. After removing duplicates, two researchers screened the titles and abstracts for relevance and adherence to the inclusion criteria. The articles were independently assessed by both researchers using the appropriate tools, and the results of these evaluations were compared and confirmed during a joint session. In cases of disagreement, a third researcher was consulted for further clarification. The inclusion criteria were original quantitative (Descriptive, analytical, and intervention studies), qualitative, and mixed-methods articles published in English or Persian. To maximize retrieval, no restrictions were applied based on the year of publication, and articles were searched from inception until May 2024. This study included and reviewed research articles that examined clinical reasoning as a variable or primary focus in nursing student populations. Articles that assessed confidence in clinical reasoning or articles that did not focus on clinical reasoning assessment and did not use clinical reasoning assessment tools were excluded from the study. Additionally, short communication articles, letters to the editor, book reviews, review articles, and articles for which the full text was not available were excluded from the study.

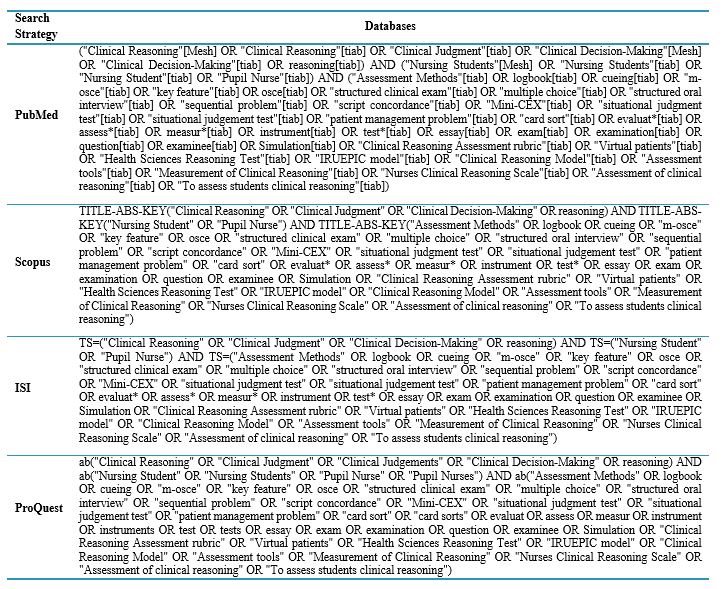

Table 1. Keywords and search strategies

Tools/Instruments

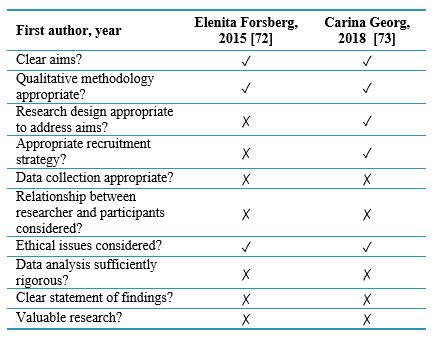

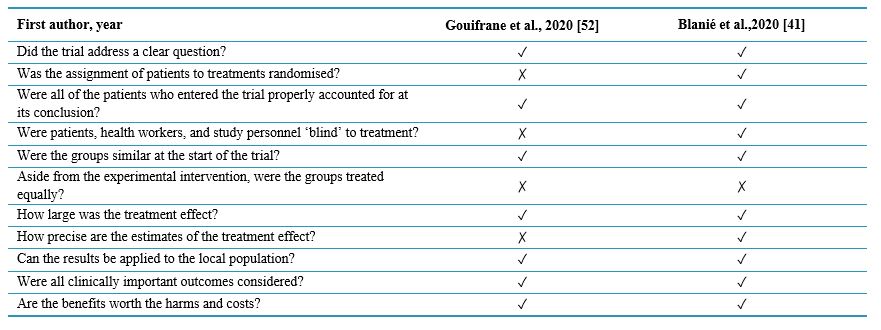

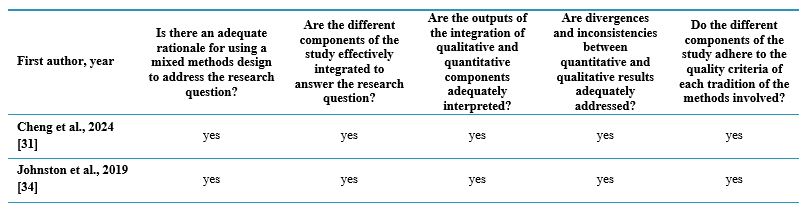

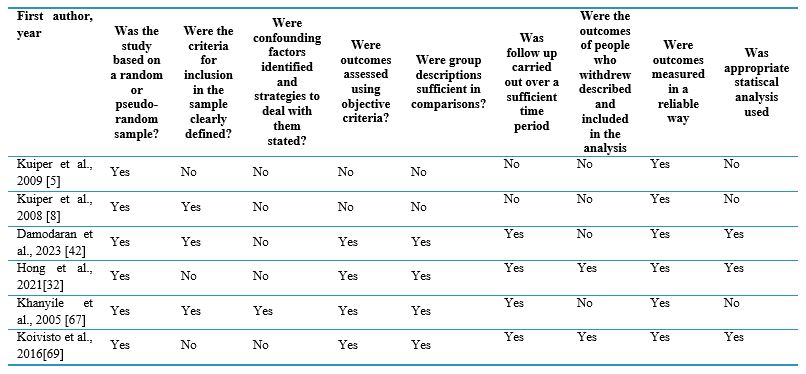

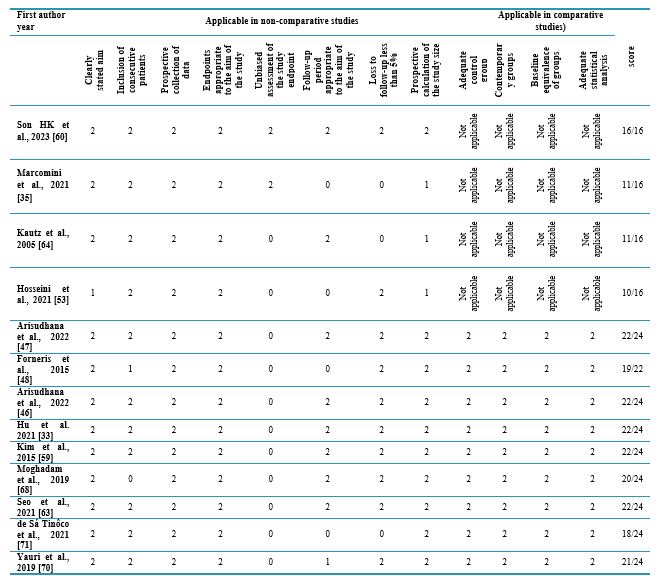

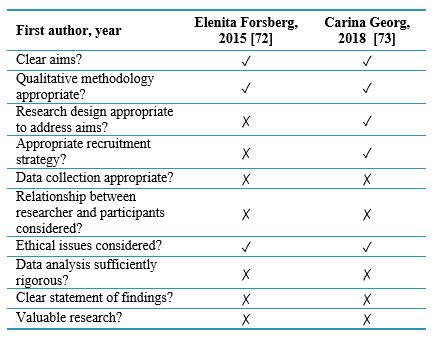

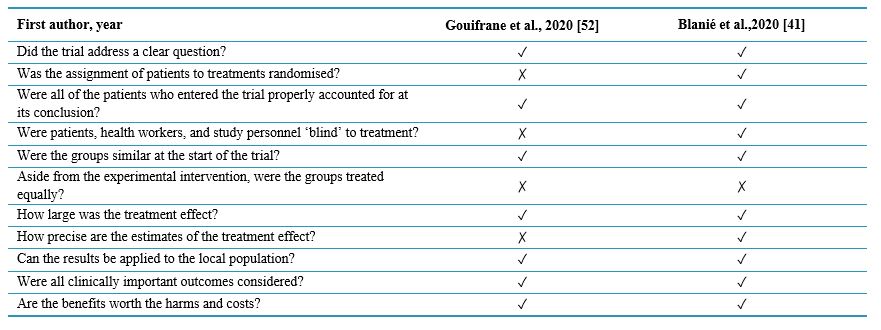

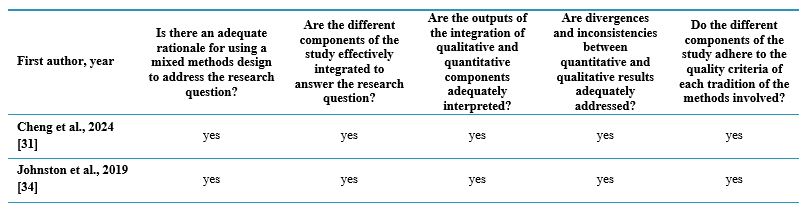

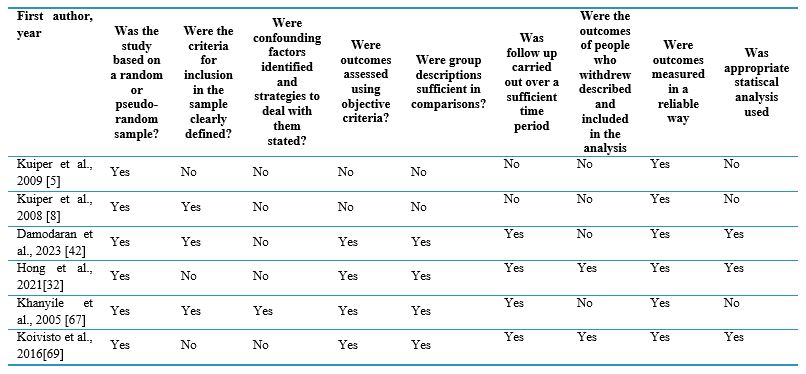

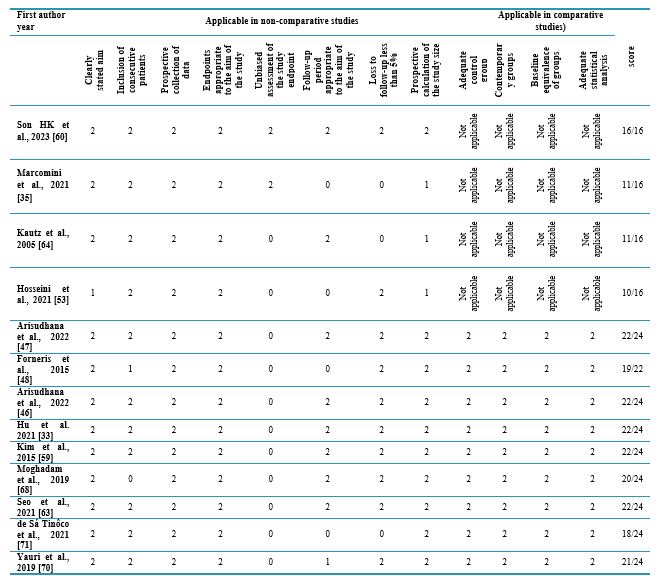

Finally, the quality of 25 articles was evaluated using assessment tools fitting the study design. The qualitative articles were assessed using the Critical Appraisal Skills Programme (CASP) tool for qualitative studies (10 items - Table 2). Interventional articles were evaluated using the CASP tool for clinical trial studies (11 items - Table 3), while mixed methods studies were assessed with the 5-item Mixed Methods Appraisal Tool (MMAT - Table 4). Descriptive articles were reviewed using the Joanna Briggs Institute (JBI) tool (8 items - Table 5), and non-clinical trials were assessed with the Minor tool (12 items - Table 6).

Table 2. Quality qualitative studies

Table 3. Quality clinical trial studies

Table 4. Quality mixed method study

Table 5. Quality cross-sectional studies

Table 6. Quality non randomized studies

Data analysis

Based on the research team members' decision, articles accepted for final evaluation needed to achieve a score of at least 50% of the total possible points. Two researchers reviewed and rated all articles, sharing the results and reaching an agreement. A third rater resolved any disagreement between the two raters.

Results

In the quality assessment, five articles did not meet the criteria for inclusion in the study, resulting in a final selection of 20 articles for analysis (Figure 1). The quality status of the methodology for these 20 approved studies is detailed in Tables 2 to 6.

Background & Objective: Nursing instructors can play a crucial role in enhancing students' clinical reasoning skills by evaluating them and offering timely, constructive feedback. Therefore, identifying effective clinical reasoning assessment tools is vital for accomplishing this objective. To this end, this study aimed to review the methods for assessing clinical reasoning in nursing students.

Materials & Methods: This systematic review was conducted in May 2024 using the keywords "Clinical Reasoning" and "Nursing Students." Eligible articles published in both English and Persian were systematically searched in various national and international online databases, including SID, Magiran, Scopus, Web of Science, PubMed, and ProQuest.

Results: A total of 2893 articles were retrieved from the initial search findings. After removing duplicates and irrelevant articles based on the inclusion criteria, a qualitative assessment was conducted using the Critical Appraisal Skills Programme (CASP), the Mixed Methods Appraisal Tool (MMAT), and the Joanna Briggs Institute (JBI) checklists. Ultimately, 20 articles on clinical reasoning assessment tools for nursing students were reviewed. The findings revealed that researchers utilize a range of tools to assess clinical reasoning, with the most common being the Nurses Clinical Reasoning Scale (NCRS), Script Concordance Tests (SCTs), key feature tests, Outcome-Present State Test (OPT), rubrics, and the triple jump exercise. However, the validity and reliability of the tools used and their acceptability and cost-effectiveness have not been assessed in the literature.

Conclusion: The findings indicated that the NCRS is the most commonly used assessment tool. Therefore, conducting psychometric evaluations of this tool in Iran is recommended. Furthermore, longitudinal studies are needed to evaluate the impact of clinical reasoning assessment tools on nursing students and explore how these tools can be effectively integrated into nursing curricula.

Introduction

In any educational system, moving learners from memorization to reasoning (an innovative way to solve problems) is a fundamental issue [1]. Reasoning is a thinking process that transforms an undesirable or problematic situation into a desirable one by processing the subject matter [2]. Clinical reasoning is one of the important and essential skills in nursing education and practice [3, 4]. Clinical reasoning in nursing is a cognitive process that nurses use to collect, synthesize, and understand information [3] and identify and diagnose patient problems [5] to guide decisions about patient care [3]. In other words, clinical reasoning is the ability of a nurse to look at a large volume of data and then accurately identify and apply effective nursing practices to address the problems identified during patient care [6]. Clinical reasoning is of great importance for learning and developing nursing care. The effective use of clinical reasoning in complex care situations is currently one of the healthcare requirements for rapid assessment of care needs and provision of high-quality care [3, 4].Clinical reasoning helps nurses make the most accurate decisions in clinical settings and provide person-centered, high-quality, effective, and safe patient care [7-10]. In other words, nurses with adequate clinical reasoning skills positively impact patient care outcomes [11]. However, a literature review suggests nurses often have limited clinical reasoning skills and use various cognitive strategies [12, 13]. The most commonly used skill was checking for accuracy and reliability. The reasoning process of nurses encompasses the phases of assessment, analysis, diagnosis, planning/implementation, and evaluation [14].

Nurses with inadequate clinical reasoning skills find decision-making complex, with their ability to triage patients showing accuracy rates ranging from 22% to 89% [12, 13]. These nurses often cannot recognize situations where the patient's condition deteriorates and fails to save the patient [11]. Poor clinical reasoning skills can lead to poor diagnosis, failure to provide effective treatment, inappropriate management, and adverse patient outcomes [15]. In other words, the occurrence of hospital complications in patients is directly related to the quality of care and the clinical reasoning skills of caregivers [16]. Therefore, developing clinical reasoning skills before nurses enter the clinical field is necessary [7, 8].

Strengthening clinical reasoning for nursing students is a goal of nursing education [5] and is considered an important topic in nursing programs and learning outcomes [13]. Nursing instructors are responsible for assessing students' understanding of the logic of clinical actions, and one of the primary goals of clinical nursing educators is to develop clinical reasoning skills in students and bridge the gap between theoretical and practical education [8]. Students must learn to behave in critical situations and make wise decisions [17]. Thus, clinical reasoning is an important learning outcome that requires accurate assessment [3, 18]. Determining the clinical reasoning skills of nursing students can be a window to assessing their ability to make accurate clinical judgments and, thus, will help develop appropriate teaching and learning strategies that promote the clinical reasoning skills of nursing students [19]. Accordingly, assessing clinical reasoning and decision-making is essential to prepare for future professional tasks, and a test that aims to assess clinical competence should be able to measure, among other things, the student's ability to reason clinically [20].

However, there are difficulties in finding an effective method for assessing students' clinical reasoning processes for diagnosis and treatment [21]. This is because clinical reasoning is highly complex, and its assessment poses significant challenges.

Additionally, measuring internal mental processes is inherently difficult since they are not directly observable [22]. There is currently a wide range of clinical reasoning assessments, and the literature on these instruments is widely dispersed, making it challenging for instructors to select and carry out assessments aligned with their specific goals, needs, and resources. These assessments have often been designed in different contexts [23]. Hence, the large number and variety of clinical reasoning assessment methods present challenges in selecting assessments directed at a specific goal [24].

Furthermore, when developing assessments, principles such as assessment objectives, what should be assessed, how to assess, reliability and validity of tests, educational impact, cost-effectiveness, and acceptability should be considered [25-28]. Utilizing a standard guideline for objectively assessing clinical reasoning enhances evaluations' accuracy.

Effective measurement makes it possible to determine the extent to which a researcher's intervention has led to change [29]. Therefore, a combination of the existing evidence is necessary to advance the assessment of this basic competency, and the value of the findings of this study can be better understood through the lens of competency-based education [24]. To this end, the present study aimed to identify methods for assessing clinical reasoning in nursing students.

Materials & Methods

Design and setting(s)

This systematic review was conducted using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) checklist.

Data collection methods (Figure 1)

In this systematic review, the MESH, SNOMED, and EMBASE thesauruses and related literature were examined to select relevant keywords.

Figure 1. PRISMA 2020 flow diagram

Subsequently, a comprehensive search using the keywords "Clinical Reasoning" AND "Nursing Students" was conducted in the PubMed, Scopus, Web of Science, ProQuest, Magiran, and SID databases in May 2024 (Table 1).

It should be noted that gray literature was not reviewed in this study.

Participants and sampling It should be noted that gray literature was not reviewed in this study.

Two thousand eight hundred ninety-three articles were retrieved from all databases and imported into EndNote-9 software. After removing duplicates, two researchers screened the titles and abstracts for relevance and adherence to the inclusion criteria. The articles were independently assessed by both researchers using the appropriate tools, and the results of these evaluations were compared and confirmed during a joint session. In cases of disagreement, a third researcher was consulted for further clarification. The inclusion criteria were original quantitative (Descriptive, analytical, and intervention studies), qualitative, and mixed-methods articles published in English or Persian. To maximize retrieval, no restrictions were applied based on the year of publication, and articles were searched from inception until May 2024. This study included and reviewed research articles that examined clinical reasoning as a variable or primary focus in nursing student populations. Articles that assessed confidence in clinical reasoning or articles that did not focus on clinical reasoning assessment and did not use clinical reasoning assessment tools were excluded from the study. Additionally, short communication articles, letters to the editor, book reviews, review articles, and articles for which the full text was not available were excluded from the study.

Table 1. Keywords and search strategies

Tools/Instruments

Finally, the quality of 25 articles was evaluated using assessment tools fitting the study design. The qualitative articles were assessed using the Critical Appraisal Skills Programme (CASP) tool for qualitative studies (10 items - Table 2). Interventional articles were evaluated using the CASP tool for clinical trial studies (11 items - Table 3), while mixed methods studies were assessed with the 5-item Mixed Methods Appraisal Tool (MMAT - Table 4). Descriptive articles were reviewed using the Joanna Briggs Institute (JBI) tool (8 items - Table 5), and non-clinical trials were assessed with the Minor tool (12 items - Table 6).

Table 2. Quality qualitative studies

Table 3. Quality clinical trial studies

Table 4. Quality mixed method study

Table 5. Quality cross-sectional studies

Table 6. Quality non randomized studies

Data analysis

Based on the research team members' decision, articles accepted for final evaluation needed to achieve a score of at least 50% of the total possible points. Two researchers reviewed and rated all articles, sharing the results and reaching an agreement. A third rater resolved any disagreement between the two raters.

Results

In the quality assessment, five articles did not meet the criteria for inclusion in the study, resulting in a final selection of 20 articles for analysis (Figure 1). The quality status of the methodology for these 20 approved studies is detailed in Tables 2 to 6.

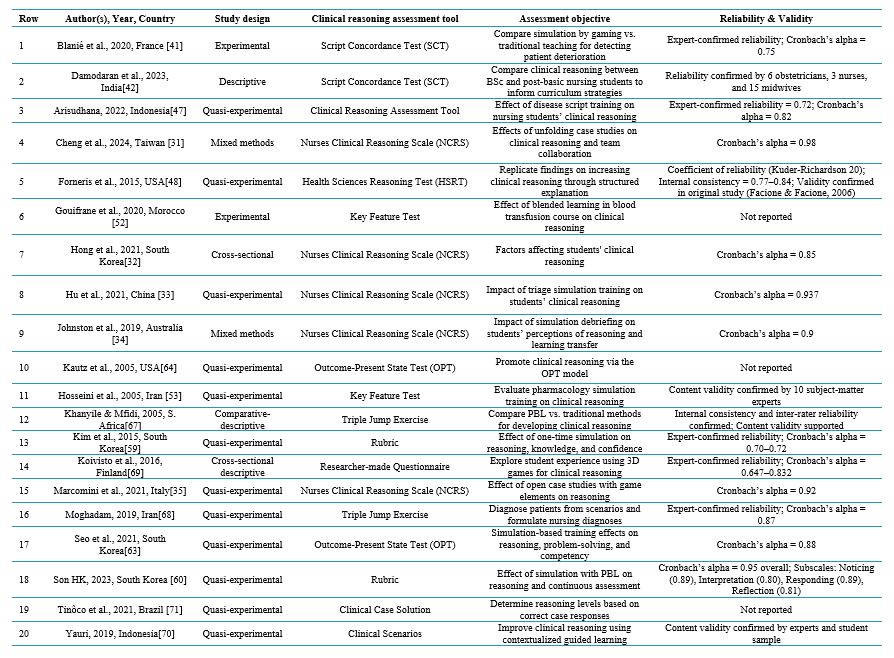

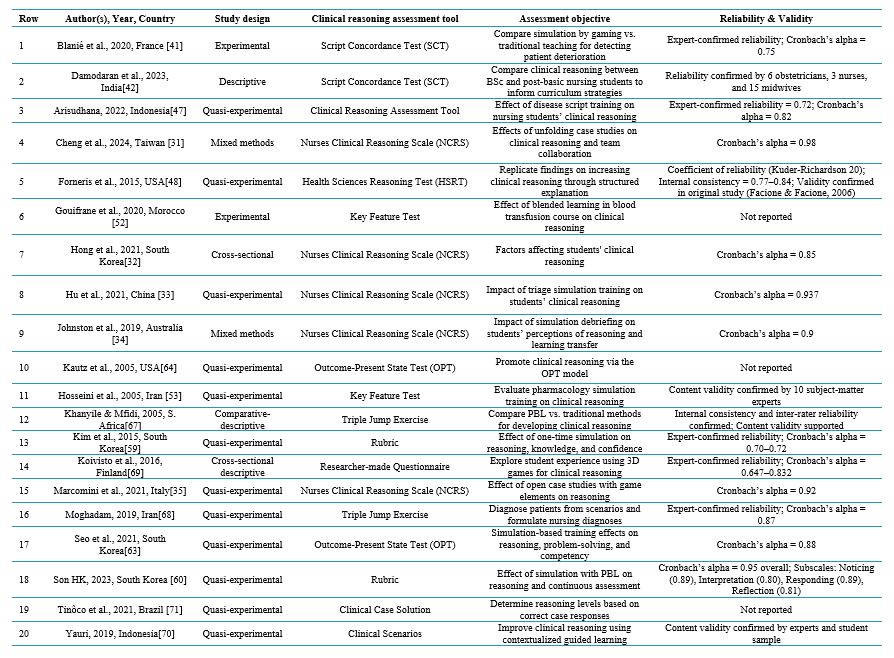

This review comprised 20 articles (Table 7), including 12 interventional studies, two clinical trial articles, four descriptive cross-sectional studies, and two mixed-methods articles. The findings revealed that five articles utilized the Nurses Clinical Reasoning Scale (NCRS) (25%), two articles employed Script Concordance Tests (SCTs) (10%), two articles applied the key feature test (10%), two articles used the Outcome-Present State Test (10%), two articles implemented rubrics (10%), two articles adopted the triple jump exercise (10%), one article used the Health Sciences Reasoning Test (HSRT) (5%), one article applied scenarios (5%), one article employed the Clinical Reasoning Assessment Tool (5%), and one article utilized a researcher-made questionnaire (5%). Additionally, the findings indicated that ten articles (47.61%) did not report the instruments' validity, while six articles did not indicate the reliability of the instruments. Moreover, no studies reported the qualitative assessment of nursing students' clinical reasoning. In addition, no study pointed to the acceptability, costs, or cost-effectiveness of clinical reasoning assessment tools.

Table 7. A review of the studies conducted on clinical reasoning assessment tools

Discussion

In this section, 10 identified tools for assessing clinical reasoning are presented separately:

The Nurses Clinical Reasoning Scale (NCRS)

The review data indicated that the most frequently used clinical reasoning assessment tool was the NCRS. This scale, developed by Liou et al., is available in Chinese and consists of 15 items scored on a 5-point Likert scale. The total score ranges from 25 to 75, with higher scores reflecting a higher level of clinical reasoning. The content validity of this tool was confirmed with a Content Validity Index (CVI) of 1. The construct validity of the tool was examined through factor analysis, and 15 items were confirmed. The instrument's reliability was evaluated and confirmed with Cronbach's alpha coefficient of 0.7, an internal consistency coefficient of 0.7, and an Intraclass Correlation Coefficient (ICC) value of 0.85. It is worth noting that this instrument's interpretation of clinical reasoning is limited because it measures self-perceived competencies rather than proven practical competencies in clinical reasoning [30].This instrument was used in five studies conducted in Taiwan [31], Korea [32], China [33], Australia [34], and Italy [35]. The studies from Taiwan, Korea, and China only addressed the psychometric properties of the original version of the instrument, focusing solely on its reliability. The reported reliability values for these studies were 0.98 [30], 0.85 [32], and 0.93 [33], respectively. A study conducted in Australia only referred to the permission for the instrument and did not assess or report the validity and reliability of the scale [34]. Additionally, a study conducted in Italy did not report the scale's validity but assessed and confirmed its reliability, yielding Cronbach's alpha coefficient of 0.90 [35]. Since data collection is considered one of the most important stages in a research project, it requires reliable and valid instruments [1]. Thus, reliable results cannot be extracted from data collected with little precision. For this reason, data should be collected with valid instruments fitting the cultural context of the research population. However, if no such instrument exists, a valid and reliable instrument fitting the cultural context of the community in question should be translated and used [36]. Studies by Cheng [31], Hu [33], and Johnston [34] have highlighted the limitations of this tool, particularly concerning the self-reporting nature of students' clinical reasoning, which qualifies it as a subjective form of evaluation.

Script Concordance Tests) SCTs(

The second tool was script concordance tests, which are effective for assessing clinical reasoning. Based on script theory [37], these tests are developed based on cognitive psychology, which posits that healthcare providers organize, store, and access their knowledge through "disease scripts." These "scripts" allow the individual to use pattern recognition and previous clinical experience to make decisions during patient care [38]. SCTs evaluate students'students' clinical reasoning in conditions of uncertainty within complex situations [39]. It is important to note that SCTs do not analyze the students' problem-solving approaches but assess whether the outcomes align with expert opinions. This is where the unique value of using such tests to measure clinical reasoning skills lies. One strength of SCTs is that various media, including images, audio, and videos, can be incorporated into the scenarios, allowing them to closely replicate real-life situations. However, developing scenarios to measure clinical reasoning instead of knowledge is difficult. Moreover, it is also not easy to access experts who can score the responses [40]. The review data showed that two studies on nursing students used SCTs to assess clinical reasoning. Blanié et al. developed a script concordance test based on the frameworks of Charlin et al. and Forneris et al., which demonstrated confirmed validity; the reliability of the test was established with a Cronbach'sCronbach's alpha coefficient of 75% [41]. Regarding the limitations of the tool, Blanié notes that it requires prior training before use, and the reliance on self-reporting by students may diminish data reliability. In a study by Damodaran et al., the developed scenarios were given to a panel of experts to answer the items in the scenarios and confirm the validity of the scenarios [42]. It is important to note that SCTs should be evaluated by 10 to 20-panel members to enhance the accuracy of the test and ensure adequate reliability. For a reliable test, it is necessary to develop 25 scenarios and incorporate four items for each scenario [43]. Scholars believe that SCTs have good acceptance as a tool for measuring clinical reasoning because they reflect real clinical situations. Furthermore, training students about this type of test and re-administering it can help improve their understanding of script concordance tests [44]. Considering that a very limited number of studies have explored using SCTs in nursing, these assessments have not garnered significant attention in this field [45].

Clinical Reasoning Assessment Tool

The next tool is the Clinical Reasoning Assessment Tool, developed by Arisudana and Puspawait in Indonesian. This tool consists of 36 items scored on a 3-point Likert scale, with total scores ranging from 25 to 75. The tool's reliability has been confirmed with Cronbach's alpha coefficient of 82%, while its specificity is reported at 65%, and its precision is estimated at 72%. Additionally, the tool's validity has been established [46]. However, in a study conducted in Indonesia, it was only utilized by its developers, Arisudana and Matini. This study identified a potential response bias from students as a limitation due to the self-report nature of the clinical reasoning assessment [47].

Health Sciences Reasoning Test (HSRT)

The fourth tool is the HSRT. This tool was used by Forneris et al. in Minnesota. The HSRT is a multiple-choice test consisting of 33 items, featuring a variety of scenarios in clinical and professional contexts with information presented in both text and graphic formats. The items on this test require test takers to apply their skills to interpret information, analyze data, draw valid inferences, identify claims and reasons, and evaluate the quality of arguments. The tool's reliability has been established with a coefficient of reproducibility of 20, while its internal consistency is supported by Cronbach's alpha coefficient, which ranges from 0.77 to 0.84. A limitation of this tool is that it is not specifically designed for nursing or nursing students; rather, it aims to assess health professionals, which may limit its effectiveness in evaluating clinical reasoning in nursing students [48].

Key Feature

The Key Feature Test is the fifth tool. It is a written or electronic test in which a short scenario containing key and non-key components is presented, and the candidate must make a clinical decision based on it. For example, the candidate might need to determine what key findings are necessary for diagnosis and what actions should be prioritized for the patient's clinical management. The responses to the items can be either brief answers or selections from a list [49]. To ensure that a key feature test possesses good face and content validity, the items should be developed based on a blueprint that clearly outlines the main focuses [50, 51]. The review data indicated that this tool has been utilized in two studies. Gouifrane et al. designed 30 key feature items based on the clinical case of blood transfusion, enabling students to identify relevant clues by linking information and searching for missing details, creating hypotheses and clinical judgments, and ultimately making effective clinical decisions with appropriate explanations. Gouifrane noted the limited ease of applying the tool in clinical settings [52]. Similarly, Hosseini et al. developed and employed 15 key feature questions to assess nursing students' clinical reasoning in a clinical pharmacology course [53].

Rubric

The rubric is the sixth tool. A rubric is a coherent set of specific criteria describing the qualitative performance level [54]. It functions as a blueprint that outlines the mastery of a skill [55] and is regarded as a valid and reliable tool for instructors [56], provided that the developed rubric possesses strong validity [51]. Its validity should be evaluated and confirmed by experts, including nursing education experts, clinical instructors, and students as stakeholders [57]. Rubrics can facilitate the assessment of clinical reasoning in undergraduate students from their first year through their internship. They can also strengthen students' capacity for self-assessment so that they can develop effective thinking skills and provide instructors with the opportunity to monitor their improvement of clinical reasoning skills. Instructors can strengthen this skill in students by providing timely and effective feedback. Rubrics can be utilized in various educational contexts, including online courses, real-world settings, and clinical education [57, 58]. The data showed that two studies have used rubrics. Based on a literature review and expert opinions, Kim et al. designed a rubric that consisted of 4 sections: data collection, diagnosis, problem prioritization, and planning, and these sections were related to the steps of the nursing process. In this rubric, each step had a description of the student's performance and a score range, and the total student score ranged from 2 to 10. Thus, the instructor could obtain information about the student's clinical reasoning status. Kim et al. indicated that the validity of this method diminishes if only a single facilitator is used, making this a key limitation of the tool [59]. In their study, Son et al. used a rubric developed by Tanner. This rubric consisted of four sections: noticing (3 items about understanding the patient's condition and relevant information), interpreting (2 items about interpreting the patient's condition and relevant information), responding (3 items about establishing rapport with the patient), and giving feedback (2 items about reflecting on nursing practice). The rubric had 10 items with a total score ranging from 10 to 40 that measured the student's clinical reasoning at four levels: initiating, developing, achieving, and commendable [60].

Outcome-Present State Test (OPT)

The seventh tool is the OPT. It provides a construct for assessing clinical reasoning and a way for students to simultaneously consider the relationships between diagnoses, interventions, and outcomes, given the evidence used to make judgments [61], and helps to improve the problem-solving ability among nurses concerning the patient's problems in the current situation strengthening thinking skills. In contrast, students analyze nursing problems [62]. The findings showed that two studies have used the OPT to assess nursing students' clinical reasoning. Seo and Eom used the test presented by Kuiper, which had a total score of 0 to 78 for student performance, with higher scores indicating better clinical reasoning. In this study, the tool's reliability was assessed and reported to be 0.88 [63]. Moreover, Kautz et al. used the OPT model. They reported that the model allows for considering many nursing care problems simultaneously, how they are interconnected and influence each other, and that such systems thinking helps identify which problem or issue has the greatest impact and which is most important for planning care. The tool that supports the identification of this "key issue" is called the clinical reasoning web. The clinical reasoning web resembles a conceptual map of the relationships between nursing diagnoses or care needs arising from medical conditions. Once the "key issue" is identified, students are challenged to identify the outcomes that result from the problems. Concerning the tool's limitations, Kautz pointed out that it evaluates nursing students' clinical reasoning based on their coursework analysis, reducing the findings' internal validity [64].

The Triple Jump Exercise

The triple jump exercise is the eighth tool and an assessment method developed within the McMaster curriculum to evaluate students' clinical reasoning skills. This exercise begins with a written clinical scenario, where the student presents a hypothesis related to the scenario. A two-hour self-directed learning session follows, focused on the new topics introduced, and concludes with a 30-minute debriefing and feedback session. The initial version of the exercise was designed as an oral test and was assessed subjectively with a pass-or-fail grading system [65]. The triple jump exercise provides a realistic approach to evaluating students' competence within a problem-based curriculum design [65]. However, this instrument has encountered issues related to validity and stability for standardization purposes [66]. Two studies used triple jump exercises. Khanyile and Mfidi designed a triple exercise, in which the first stage was problem definition, the second stage was information search, and the third stage was intervention. The exercise allowed the student to observe the problem and evaluate the methods of solving it while simultaneously confirming his/her knowledge with the examiner[67]. Another study by Moghadam et al. used a triple jump exercise to assess the clinical reasoning of intern students taking the respiratory course. To do so, first, scenarios based on common respiratory diseases were presented to the students, and they were given a maximum of 20 minutes to extract the required information from electronic sources. Then, 45 minutes were given to answer the questions posed at the end of the scenarios based on the information obtained. A score of 0-20 was assigned to the answers, which the professors corrected to assess the clinical reasoning skills of the students [68].

Researcher-made Questionnaires

The ninth tool is Researcher-made questionnaires. Koivisto et al. used simulated games to strengthen students' clinical reasoning. To this end, they developed a researcher-made instrument based on the clinical reasoning process proposed by Lewett Jones et al. and a review of a qualitative study on students' experiences in the learning process using simulated games. This questionnaire had 14 items scored on a 5-point Likert scale from very much to not at all. These items were initially pilot-tested on five nursing students. Then, content validity was checked and confirmed by two nursing instructors with doctoral degrees and one instructor who was experienced in simulation games. The authors of this study acknowledged the tool's limitations and suggested further validation and reliability testing in future studies [69].

Scenarios/Clinical Cases

Scenarios and clinical cases represent the tenth tool. Yauri et al. developed a scenario focused on high-risk pregnancy, with its content designed based on the nursing curriculum and reference materials for maternal health courses. Five questions were posed regarding the scenario to assess students'students' clinical reasoning. The responses were structured based on the Botti and Reeve system; however, no further details were provided about this system or the score calculation method [70]. Similarly, Tinôco et al. utilized the case presentation method to evaluate clinical reasoning. The students' responses to the presented case were scored across various stages of clinical reasoning: 1.2 points for the initial stages, 4.9 points for diagnostic inference, and 3 points for the prioritization of diagnoses [71].

Conclusion

Given that clinical reasoning skills are crucial for ensuring nursing students are competent in patient care, nursing instructors should assess these skills throughout their studies and actively work to enhance students' clinical reasoning by providing timely and constructive feedback.

Students' clinical reasoning can be evaluated through various clinical reasoning assessment tools. This review presented a list of such tools for nursing instructors to familiarize themselves with the available options and effectively incorporate them into clinical settings. This review study showed a nursing-specific tool called the NCRS was used in five studies and had acceptable validity and reliability.

This tool can be a suitable choice for nursing instructors. However, the reviewed studies have not thoroughly evaluated the validity and reliability of clinical reasoning assessment instruments. This lack of examination raises concerns about the trustworthiness and credibility of the findings derived from assessing students' clinical reasoning. Consequently, such outcomes cannot be confidently relied upon to enhance the necessary competencies of nursing students.

Additionally, the absence of established validity and reliability may restrict the tool's applicability in future research and clinical practice. Therefore, it is crucial for instructors to first assess the validity and reliability of any instrument before proceeding to use it. Appropriate psychometric evaluations must be conducted to adapt clinical reasoning assessment tools to different educational and cultural contexts, or at least validity and reliability should be established through standardized methods to ensure their usability. Among the ten identified tools, the NCRS has been utilized in five studies and recognized as a valid and reliable assessment tool. Consequently, it warrants further evaluation in a psychometric study. If its validity and reliability are confirmed, the NCRS can be a trustworthy instrument for nursing educators to assess students' clinical reasoning abilities.

Ethical considerations

This study was conducted as part of a research project approved by the National Center for Strategic Research on Medical Education in Tehran, with the confirmation code 4000739. To prevent bias in the selection of articles, all articles were reviewed and assessed for relevance by two raters. If there were any doubts regarding the relevance of an article, it was reviewed by a third rater. The authors ensured the confidentiality of the data during the retrieval and review of articles, and all references used were cited meticulously.

Artificial intelligence utilization for article writing

No.

Acknowledgment

The authors thank the National Center for Strategic Research in Medical Education, Tehran, for funding and approving the research proposal (grant number 4000739).

Conflict of interest statement

The authors declare no conflicts of interest.

Author contributions

All authors participated in the process of the study or manuscript, including the Study Idea or Design, Data Collection, Data Analysis and Interpretation, Manuscript Preparation, Review and Editing, and Final Approval.

Funding

National Center for Strategic Research in Medical Education, Tehran.

Data availability statement

The datasets are available from the corresponding author upon request.

Table 7. A review of the studies conducted on clinical reasoning assessment tools

Discussion

In this section, 10 identified tools for assessing clinical reasoning are presented separately:

The Nurses Clinical Reasoning Scale (NCRS)

The review data indicated that the most frequently used clinical reasoning assessment tool was the NCRS. This scale, developed by Liou et al., is available in Chinese and consists of 15 items scored on a 5-point Likert scale. The total score ranges from 25 to 75, with higher scores reflecting a higher level of clinical reasoning. The content validity of this tool was confirmed with a Content Validity Index (CVI) of 1. The construct validity of the tool was examined through factor analysis, and 15 items were confirmed. The instrument's reliability was evaluated and confirmed with Cronbach's alpha coefficient of 0.7, an internal consistency coefficient of 0.7, and an Intraclass Correlation Coefficient (ICC) value of 0.85. It is worth noting that this instrument's interpretation of clinical reasoning is limited because it measures self-perceived competencies rather than proven practical competencies in clinical reasoning [30].This instrument was used in five studies conducted in Taiwan [31], Korea [32], China [33], Australia [34], and Italy [35]. The studies from Taiwan, Korea, and China only addressed the psychometric properties of the original version of the instrument, focusing solely on its reliability. The reported reliability values for these studies were 0.98 [30], 0.85 [32], and 0.93 [33], respectively. A study conducted in Australia only referred to the permission for the instrument and did not assess or report the validity and reliability of the scale [34]. Additionally, a study conducted in Italy did not report the scale's validity but assessed and confirmed its reliability, yielding Cronbach's alpha coefficient of 0.90 [35]. Since data collection is considered one of the most important stages in a research project, it requires reliable and valid instruments [1]. Thus, reliable results cannot be extracted from data collected with little precision. For this reason, data should be collected with valid instruments fitting the cultural context of the research population. However, if no such instrument exists, a valid and reliable instrument fitting the cultural context of the community in question should be translated and used [36]. Studies by Cheng [31], Hu [33], and Johnston [34] have highlighted the limitations of this tool, particularly concerning the self-reporting nature of students' clinical reasoning, which qualifies it as a subjective form of evaluation.

Script Concordance Tests) SCTs(

The second tool was script concordance tests, which are effective for assessing clinical reasoning. Based on script theory [37], these tests are developed based on cognitive psychology, which posits that healthcare providers organize, store, and access their knowledge through "disease scripts." These "scripts" allow the individual to use pattern recognition and previous clinical experience to make decisions during patient care [38]. SCTs evaluate students'students' clinical reasoning in conditions of uncertainty within complex situations [39]. It is important to note that SCTs do not analyze the students' problem-solving approaches but assess whether the outcomes align with expert opinions. This is where the unique value of using such tests to measure clinical reasoning skills lies. One strength of SCTs is that various media, including images, audio, and videos, can be incorporated into the scenarios, allowing them to closely replicate real-life situations. However, developing scenarios to measure clinical reasoning instead of knowledge is difficult. Moreover, it is also not easy to access experts who can score the responses [40]. The review data showed that two studies on nursing students used SCTs to assess clinical reasoning. Blanié et al. developed a script concordance test based on the frameworks of Charlin et al. and Forneris et al., which demonstrated confirmed validity; the reliability of the test was established with a Cronbach'sCronbach's alpha coefficient of 75% [41]. Regarding the limitations of the tool, Blanié notes that it requires prior training before use, and the reliance on self-reporting by students may diminish data reliability. In a study by Damodaran et al., the developed scenarios were given to a panel of experts to answer the items in the scenarios and confirm the validity of the scenarios [42]. It is important to note that SCTs should be evaluated by 10 to 20-panel members to enhance the accuracy of the test and ensure adequate reliability. For a reliable test, it is necessary to develop 25 scenarios and incorporate four items for each scenario [43]. Scholars believe that SCTs have good acceptance as a tool for measuring clinical reasoning because they reflect real clinical situations. Furthermore, training students about this type of test and re-administering it can help improve their understanding of script concordance tests [44]. Considering that a very limited number of studies have explored using SCTs in nursing, these assessments have not garnered significant attention in this field [45].

Clinical Reasoning Assessment Tool

The next tool is the Clinical Reasoning Assessment Tool, developed by Arisudana and Puspawait in Indonesian. This tool consists of 36 items scored on a 3-point Likert scale, with total scores ranging from 25 to 75. The tool's reliability has been confirmed with Cronbach's alpha coefficient of 82%, while its specificity is reported at 65%, and its precision is estimated at 72%. Additionally, the tool's validity has been established [46]. However, in a study conducted in Indonesia, it was only utilized by its developers, Arisudana and Matini. This study identified a potential response bias from students as a limitation due to the self-report nature of the clinical reasoning assessment [47].

Health Sciences Reasoning Test (HSRT)

The fourth tool is the HSRT. This tool was used by Forneris et al. in Minnesota. The HSRT is a multiple-choice test consisting of 33 items, featuring a variety of scenarios in clinical and professional contexts with information presented in both text and graphic formats. The items on this test require test takers to apply their skills to interpret information, analyze data, draw valid inferences, identify claims and reasons, and evaluate the quality of arguments. The tool's reliability has been established with a coefficient of reproducibility of 20, while its internal consistency is supported by Cronbach's alpha coefficient, which ranges from 0.77 to 0.84. A limitation of this tool is that it is not specifically designed for nursing or nursing students; rather, it aims to assess health professionals, which may limit its effectiveness in evaluating clinical reasoning in nursing students [48].

Key Feature

The Key Feature Test is the fifth tool. It is a written or electronic test in which a short scenario containing key and non-key components is presented, and the candidate must make a clinical decision based on it. For example, the candidate might need to determine what key findings are necessary for diagnosis and what actions should be prioritized for the patient's clinical management. The responses to the items can be either brief answers or selections from a list [49]. To ensure that a key feature test possesses good face and content validity, the items should be developed based on a blueprint that clearly outlines the main focuses [50, 51]. The review data indicated that this tool has been utilized in two studies. Gouifrane et al. designed 30 key feature items based on the clinical case of blood transfusion, enabling students to identify relevant clues by linking information and searching for missing details, creating hypotheses and clinical judgments, and ultimately making effective clinical decisions with appropriate explanations. Gouifrane noted the limited ease of applying the tool in clinical settings [52]. Similarly, Hosseini et al. developed and employed 15 key feature questions to assess nursing students' clinical reasoning in a clinical pharmacology course [53].

Rubric

The rubric is the sixth tool. A rubric is a coherent set of specific criteria describing the qualitative performance level [54]. It functions as a blueprint that outlines the mastery of a skill [55] and is regarded as a valid and reliable tool for instructors [56], provided that the developed rubric possesses strong validity [51]. Its validity should be evaluated and confirmed by experts, including nursing education experts, clinical instructors, and students as stakeholders [57]. Rubrics can facilitate the assessment of clinical reasoning in undergraduate students from their first year through their internship. They can also strengthen students' capacity for self-assessment so that they can develop effective thinking skills and provide instructors with the opportunity to monitor their improvement of clinical reasoning skills. Instructors can strengthen this skill in students by providing timely and effective feedback. Rubrics can be utilized in various educational contexts, including online courses, real-world settings, and clinical education [57, 58]. The data showed that two studies have used rubrics. Based on a literature review and expert opinions, Kim et al. designed a rubric that consisted of 4 sections: data collection, diagnosis, problem prioritization, and planning, and these sections were related to the steps of the nursing process. In this rubric, each step had a description of the student's performance and a score range, and the total student score ranged from 2 to 10. Thus, the instructor could obtain information about the student's clinical reasoning status. Kim et al. indicated that the validity of this method diminishes if only a single facilitator is used, making this a key limitation of the tool [59]. In their study, Son et al. used a rubric developed by Tanner. This rubric consisted of four sections: noticing (3 items about understanding the patient's condition and relevant information), interpreting (2 items about interpreting the patient's condition and relevant information), responding (3 items about establishing rapport with the patient), and giving feedback (2 items about reflecting on nursing practice). The rubric had 10 items with a total score ranging from 10 to 40 that measured the student's clinical reasoning at four levels: initiating, developing, achieving, and commendable [60].

Outcome-Present State Test (OPT)

The seventh tool is the OPT. It provides a construct for assessing clinical reasoning and a way for students to simultaneously consider the relationships between diagnoses, interventions, and outcomes, given the evidence used to make judgments [61], and helps to improve the problem-solving ability among nurses concerning the patient's problems in the current situation strengthening thinking skills. In contrast, students analyze nursing problems [62]. The findings showed that two studies have used the OPT to assess nursing students' clinical reasoning. Seo and Eom used the test presented by Kuiper, which had a total score of 0 to 78 for student performance, with higher scores indicating better clinical reasoning. In this study, the tool's reliability was assessed and reported to be 0.88 [63]. Moreover, Kautz et al. used the OPT model. They reported that the model allows for considering many nursing care problems simultaneously, how they are interconnected and influence each other, and that such systems thinking helps identify which problem or issue has the greatest impact and which is most important for planning care. The tool that supports the identification of this "key issue" is called the clinical reasoning web. The clinical reasoning web resembles a conceptual map of the relationships between nursing diagnoses or care needs arising from medical conditions. Once the "key issue" is identified, students are challenged to identify the outcomes that result from the problems. Concerning the tool's limitations, Kautz pointed out that it evaluates nursing students' clinical reasoning based on their coursework analysis, reducing the findings' internal validity [64].

The Triple Jump Exercise

The triple jump exercise is the eighth tool and an assessment method developed within the McMaster curriculum to evaluate students' clinical reasoning skills. This exercise begins with a written clinical scenario, where the student presents a hypothesis related to the scenario. A two-hour self-directed learning session follows, focused on the new topics introduced, and concludes with a 30-minute debriefing and feedback session. The initial version of the exercise was designed as an oral test and was assessed subjectively with a pass-or-fail grading system [65]. The triple jump exercise provides a realistic approach to evaluating students' competence within a problem-based curriculum design [65]. However, this instrument has encountered issues related to validity and stability for standardization purposes [66]. Two studies used triple jump exercises. Khanyile and Mfidi designed a triple exercise, in which the first stage was problem definition, the second stage was information search, and the third stage was intervention. The exercise allowed the student to observe the problem and evaluate the methods of solving it while simultaneously confirming his/her knowledge with the examiner[67]. Another study by Moghadam et al. used a triple jump exercise to assess the clinical reasoning of intern students taking the respiratory course. To do so, first, scenarios based on common respiratory diseases were presented to the students, and they were given a maximum of 20 minutes to extract the required information from electronic sources. Then, 45 minutes were given to answer the questions posed at the end of the scenarios based on the information obtained. A score of 0-20 was assigned to the answers, which the professors corrected to assess the clinical reasoning skills of the students [68].

Researcher-made Questionnaires

The ninth tool is Researcher-made questionnaires. Koivisto et al. used simulated games to strengthen students' clinical reasoning. To this end, they developed a researcher-made instrument based on the clinical reasoning process proposed by Lewett Jones et al. and a review of a qualitative study on students' experiences in the learning process using simulated games. This questionnaire had 14 items scored on a 5-point Likert scale from very much to not at all. These items were initially pilot-tested on five nursing students. Then, content validity was checked and confirmed by two nursing instructors with doctoral degrees and one instructor who was experienced in simulation games. The authors of this study acknowledged the tool's limitations and suggested further validation and reliability testing in future studies [69].

Scenarios/Clinical Cases

Scenarios and clinical cases represent the tenth tool. Yauri et al. developed a scenario focused on high-risk pregnancy, with its content designed based on the nursing curriculum and reference materials for maternal health courses. Five questions were posed regarding the scenario to assess students'students' clinical reasoning. The responses were structured based on the Botti and Reeve system; however, no further details were provided about this system or the score calculation method [70]. Similarly, Tinôco et al. utilized the case presentation method to evaluate clinical reasoning. The students' responses to the presented case were scored across various stages of clinical reasoning: 1.2 points for the initial stages, 4.9 points for diagnostic inference, and 3 points for the prioritization of diagnoses [71].

Conclusion

Given that clinical reasoning skills are crucial for ensuring nursing students are competent in patient care, nursing instructors should assess these skills throughout their studies and actively work to enhance students' clinical reasoning by providing timely and constructive feedback.

Students' clinical reasoning can be evaluated through various clinical reasoning assessment tools. This review presented a list of such tools for nursing instructors to familiarize themselves with the available options and effectively incorporate them into clinical settings. This review study showed a nursing-specific tool called the NCRS was used in five studies and had acceptable validity and reliability.

This tool can be a suitable choice for nursing instructors. However, the reviewed studies have not thoroughly evaluated the validity and reliability of clinical reasoning assessment instruments. This lack of examination raises concerns about the trustworthiness and credibility of the findings derived from assessing students' clinical reasoning. Consequently, such outcomes cannot be confidently relied upon to enhance the necessary competencies of nursing students.

Additionally, the absence of established validity and reliability may restrict the tool's applicability in future research and clinical practice. Therefore, it is crucial for instructors to first assess the validity and reliability of any instrument before proceeding to use it. Appropriate psychometric evaluations must be conducted to adapt clinical reasoning assessment tools to different educational and cultural contexts, or at least validity and reliability should be established through standardized methods to ensure their usability. Among the ten identified tools, the NCRS has been utilized in five studies and recognized as a valid and reliable assessment tool. Consequently, it warrants further evaluation in a psychometric study. If its validity and reliability are confirmed, the NCRS can be a trustworthy instrument for nursing educators to assess students' clinical reasoning abilities.

Ethical considerations

This study was conducted as part of a research project approved by the National Center for Strategic Research on Medical Education in Tehran, with the confirmation code 4000739. To prevent bias in the selection of articles, all articles were reviewed and assessed for relevance by two raters. If there were any doubts regarding the relevance of an article, it was reviewed by a third rater. The authors ensured the confidentiality of the data during the retrieval and review of articles, and all references used were cited meticulously.

Artificial intelligence utilization for article writing

No.

Acknowledgment

The authors thank the National Center for Strategic Research in Medical Education, Tehran, for funding and approving the research proposal (grant number 4000739).

Conflict of interest statement

The authors declare no conflicts of interest.

Author contributions

All authors participated in the process of the study or manuscript, including the Study Idea or Design, Data Collection, Data Analysis and Interpretation, Manuscript Preparation, Review and Editing, and Final Approval.

Funding

National Center for Strategic Research in Medical Education, Tehran.

Data availability statement

The datasets are available from the corresponding author upon request.

Article Type : Review |

Subject:

Medical Education

Received: 2024/12/7 | Accepted: 2025/05/21 | Published: 2025/07/13

Received: 2024/12/7 | Accepted: 2025/05/21 | Published: 2025/07/13

References

1. Connor DM, Durning SJ, Rencic JJ. Clinical reasoning as a core competency. Academic Medicine. 2020;95 (8):1166-71. [DOI]

2. Monajemi A, Adibi P, Arabshahi KS, et al. The battery for assessment of clinical reasoning in the Olympiad for medical sciences students. Iranian Journal of Medical Education. 2011;10 (5):1056-67.

3. Levett-Jones T, Hoffman K, Dempsey J, et al. The ‘five rights’ of clinical reasoning: an educational model to enhance nursing students’ ability to identify and manage clinically ‘at risk’patients. Nurse Education Today. 2010;30(6):515-20. [DOI]

4. Luo QQ, Petrini MA. A review of clinical reasoning in nursing education: based on high-fidelity simulation teaching method. Frontiers of Nursing. 2018;5(3):175-83 [DOI]

5. Kuiper R, Pesut D, Kautz D. Promoting the self-regulation of clinical reasoning skills in nursing students. The Open Nursing Journal. 2009;3:76–85. [DOI]

6. Jensen R. Clinical reasoning during simulation: Comparison of student and faculty ratings. Nurse Education in Practice. 2013;13(1):23-8. [DOI]

7. Gonzalez L. Teaching clinical reasoning piece by piece: A clinical reasoning concept-based learning method. Journal of Nursing Education. 2018;57(12):727-35 [DOI]

8. Kuiper R, Heinrich C, Matthias A, Graham MJ, Bell-Kotwall L. Debriefing with the OPT model of clinical reasoning during high fidelity patient simulation. International Journal of Nursing Education Scholarship. 2008;5 (1) [DOI]

9. Harmon MM, Thompson C. Clinical reasoning in pre-licensure nursing students. Teaching and Learning in Nursing. 2015;10(2):63-70. [DOI]

10. Hunter S, Arthur C. Clinical reasoning of nursing students on clinical placement: clinical educators' perceptions. Nurse Education in Practice. 2016;18:73-9 [DOI]

11. Aitken LM. Critical care nurses' use of decision‐making strategies. Journal of Clinical Nursing. 2003;12(4):476-83 [DOI]

12. Göransson KE, Ehnfors M, Fonteyn ME, Ehrenberg A. Thinking strategies used by registered nurses during emergency department triage. Journal of Advanced Nursing. 2008;61(2):163-72. [DOI]

13. Göransson KE, Ehrenberg A, Marklund B, Ehnfors M. Emergency department triage: is there a link between nurses’ personal characteristics and accuracy in triage decisions? Accident and Emergency Nursing. 2006;14(2):83-8. [DOI]

14. Lee J, Lee YJ, Bae J, Seo M. Registered nurses' clinical reasoning skills and reasoning process: a think-aloud study. Nurse Education Today. 2016;46:75-80 [DOI]

15. 15. NSW Ministry of Health. Patient Safety and Clinical Quality Program. [Online]. Available from: [Accessed: May 10, 2025]. [DOI]

16. Lapkin S, Levett-Jones T, Bellchambers H, Fernandez R. Effectiveness of patient simulation manikins in teaching clinical reasoning skills to undergraduate nursing students: a systematic review. Clinical Simulation in Nursing. 2010;6(6):207-22. [DOI]

17. Banning M. Clinical reasoning and its application to nursing: concepts and research studies. Nurse Education in Practice. 2008;8(3):177-83. [DOI]

18. Forsberg E, Georg C, Ziegert K, Fors U. Virtual patients for assessment of clinical reasoning in nursing—a pilot study. Nurse Education Today. 2011;31(8):757-62 [DOI]

19. Latha Damodaran SB, Jaideep Mahendra, Aruna S. Assessment of clinical reasoning in b sc nursing students. International Journal of Science and Research 2017;6(8):1792-4.

20. Forsberg E, Ziegert K, Hult H, Fors U. Clinical reasoning in nursing, a think-aloud study using virtual patients–a base for an innovative assessment. Nurse Education Today. 2014;34 (4):538-42. [DOI]

21. Cook DA, Triola MM. Virtual patients: a critical literature review and proposed next steps. Medical Education. 2009;43(4):303-11. [DOI]

22. Jael SA. Use of Outcome-Present state test model of clinical reasoning with Filipino nursing students: Loma Linda University Electronic Theses, Dissertations & Projects. 2016:387.

23. Ilgen JS, Humbert AJ, Kuhn G, et al. Assessing diagnostic reasoning: a consensus statement summarizing theory, practice, and future needs. Academic Emergency Medicine. 2012;19(12):1454-61. [DOI]

24. Daniel M, Rencic J, Durning SJ, et al. Clinical reasoning assessment methods: a scoping review and practical guidance. Academic Medicine. 2019;94(6):902-12. [DOI]

25. Al-Kadri HM, Al-Moamary MS, Roberts C, Van der Vleuten CP. Exploring assessment factors contributing to students' study strategies: literature review. Medical Teacher. 2012;34(1): 42-50. [DOI]

26. Epstein RM. Assessment in medical education. New England Journal of Medicine. 2007;356(4):387-96. [DOI]

27. Van der Vleuten C, Schuwirth L, Scheele F, Driessen E, Hodges B. The assessment of professional competence: building blocks for theory development. Best Practice & Research Clinical Obstetrics & Gynecology. 2010;24(6):703-19. [DOI]

28. van der Vleuten CP, Schuwirth L, Driessen E, Dijkstra J, Tigelaar D, Baartman L, Van Tartwijk J. A model for programmatic assessment fit for purpose. Medical Teacher. 2012;34(3):205-14 [DOI]

29. Lee J, Park CG, Kim SH, Bae J. Psychometric properties of a clinical reasoning assessment rubric for nursing education. BMC Nursing. 2021;20(1):1-9 [DOI]

30. Liou SR, Liu HC, Tsai HM, et al. The development and psychometric testing of a theory‐based instrument to evaluate nurses’ perception of clinical reasoning competence. Journal of Advanced Nursing. 2016;72(3):707-17. [DOI]

31. Cheng CY, Hung CC, Chen YJ, Liou SR, Chu TP. Effects of an unfolding case study on clinical reasoning, self-directed learning, and team collaboration of undergraduate nursing students: a mixed methods study. Nurse Education Today. 2024;137:106168 [DOI]

32. Hong S, Lee J, Jang Y, Lee Y. A cross-sectional study: what contributes to nursing students’ clinical reasoning competence? International Journal of Environmental Research and Public Health. 2021;18(13):6833. [DOI]

33. Hu F, Yang J, Yang BX, et al. The impact of simulation-based triage education on nursing students' self-reported clinical reasoning ability: a quasi-experimental study. Nurse Education in Practice. 2021;50:102949. [DOI]

34. Johnston S, Nash R, Coyer F. An evaluation of simulation debriefings on student nurses’ perceptions of clinical reasoning and learning transfer: a mixed methods study. International Journal of Nursing Education Scholarship. 2019;16(1):20180045 [DOI]

35. Marcomini I, Terzoni S, Destrebecq A. Fostering nursing students’ clinical reasoning: a QSEN-based teaching strategy. Teaching and Learning in Nursing. 2021;16(4):338-41 [DOI]

36. Taghizadeh Z, Ebadi A, Montazeri A, Shahvari Z, Tavousi M, Bagherzadeh R. Psychometric properties of health-related measures. Part 1: translation, development, and content and face validity. Payesh (Health Monitor). 2017;16(3):343-57.

37. Deschênes M-F, Goudreau J. Addressing the development of both knowledge and clinical reasoning in nursing through the perspective of script concordance: an integrative literature review. Journal of Nursing Education and Practice. 2017;7(12):28-38. [DOI]

38. Thampy H, Willert E, Ramani S. Assessing clinical reasoning: targeting the higher levels of the pyramid. Journal of General Internal Medicine. 2019;34:1631-6. [DOI]

39. Belhomme N, Jego P, Pottier P. Gestion de l’incertitude et compétence médicale: une réflexion clinique et pédagogique. La Revue de Médecine Interne. 2019;40(6):361-7. [DOI]

40. Nouh T, Boutros M, Gagnon R, et al. The script concordance test as a measure of clinical reasoning: a national validation study. The American Journal of Surgery. 2012;203(4):530-4. [DOI]

41. Blanié A, Amorim M-A, Benhamou D. Comparative value of a simulation by gaming and a traditional teaching method to improve clinical reasoning skills necessary to detect patient deterioration: a randomized study in nursing students. BMC Medical Education. 2020;20:1-11 [DOI]

42. Damodaran L, Mahendra J, Aruna S, Dave PH, Little M. Exploration of clinical reasoning skills in undergraduate nursing students. NeuroQuantology. 2023;21(5):776 [DOI]

43. Dory V, Gagnon R, Vanpee D, Charlin B. How to construct and implement script concordance tests: insights from a systematic review. Medical Education. 2012;46(6):552-63. [DOI]

44. Leclerc A-A, Nguyen LH, Charlin B, Lubarsky S, Ayad T. Assessing the acceptability of script concordance testing: a nationwide study in otolaryngology. Canadian Journal of Surgery. 2021;64(3): 317. [DOI]

45. Hekmatipour N, Jooybari L, Sanagoo A. Can we use the “script concordance” test as a valid alternative method to evaluate clinical reasoning skills in nursing students? Iranian Journal of Medical Education. 2017;17:381-3.

46. Arisudhana GB, Anggayani AN, Kadiwanu AO, Cahyanti NE. Kemampuan penalaran klinis mahasiswa perawat tahun keempat pada masalah keperawatan medikal medah. Journal Center of Research Publication in Midwifery and Nursing. 2019;3(1):58-62. [DOI]

47. Arisudhana GA, Martini NM. Implementation of the blended learning method to enhance clinical reasoning among nursing students. Jurnal Keperawatan Soedirman. 2022;17(2):57-62 [DOI]

48. Forneris SG, Neal DO, Tiffany J, et al. Enhancing clinical reasoning through simulation debriefing: a multisite study. Nursing Education Perspectives. 2015;36(5):304-10 [DOI]

49. Hrynchak P, Glover Takahashi S, Nayer M. Key‐feature questions for assessment of clinical reasoning: a literature review. Medical Education. 2014;48(9):870-83 [DOI]

50. Hinchy J, Farmer E. Assessing general practice clinical decision-making skills: the key features approach. Australian Family Physician. 2005;34(12):1059-61.

51. D'Souza P, Renjith V, George A, Renu G. Rubrics in nursing education. International Journal of Advanced Research. 2015;3(5):423-8.

52. Gouifrane R, Lajane H, Belaaouad S, Benmokhtar S, Lotfi S, Dehbi F, Radid M. Effects of a blood transfusion course using a blended learning approach on the acquisition of clinical reasoning skills among nursing students in Morocco. International Journal of Emerging Technologies in Learning. 2020;15(18):260-9. [DOI]

53. Hosseini A, Keshmiri F, Rooddehghan Z, Mokhtari Z, Gaznag ES, Bahramnezhad F. Design, implementation and evaluation of clinical pharmacology simulation training method for nursing students of Tehran school of nursing and midwifery. Journal of Medical Education and Development. 2021;16(3):151-162. [DOI]

54. Brookhart SM. How to create and use rubrics for formative assessment and grading: Ascd; 2013.

55. Stanley T. Using rubrics for performance-based assessment: a practical guide to evaluating student work. Routledge; 2021: 162.

56. Stevens DD, Levi AJ. Introduction to rubrics: an assessment tool to save grading time, convey effective feedback, and promote student learning. Routledge; 2023 [DOI]

57. Ramazanzadeh N, Ghahramanian A, Zamanzadeh V, Valizadeh L, Ghaffarifar S. Development and psychometric testing of a clinical reasoning rubric based on the nursing process. BMC Medical Education. 2023;23(1):98. [DOI]

58. Ramazanzadeh N, Ghahramanian A, Zamanzadeh V, Valizadeh L, Ghaffarifar S. Development and psychometric testing of a clinical reasoning rubric based on the nursing process. BMC Medical Education. 2023;23(1):98 [DOI]

59. Kim JY, Kim EJ. Effects of simulation on nursing students' knowledge, clinical reasoning, and self-confidence: a quasi-experimental study. Korean Journal of Adult Nursing. 2015;27(5):604-11. [DOI]

60. Son HK. Effects of simulation with problem-based learning (S-PBL) on nursing students’ clinical reasoning ability: based on Tanner’s clinical judgment model. BMC Medical Education. 2023;23(1):601 [DOI]

61. Kuiper RA, Pesut DJ. Promoting cognitive and metacognitive reflective reasoning skills in nursing practice: self‐regulated learning theory. Journal of Advanced Nursing. 2004;45(4):381-91 [DOI]

62. Kautz D, Kuiper R, Bartlett R, Buck R, Williams R, Knight-Brown P. Building evidence for the development of clinical reasoning using a rating tool with the outcome-present state-test (OPT) model. Southern Online Journal of Nursing Research. 2009;9(1):1-8.

63. Seo YH, Eom MR, editors. The effect of simulation nursing education using the outcome-present state-test model on clinical reasoning, the problem-solving process, self-efficacy, and clinical competency in Korean nursing students. Healthcare; 2021;9(3):243 [DOI]

64. Kautz DD, Kuiper R, Pesut DJ, Knight-Brown P, Daneker D. Promoting clinical reasoning in undergraduate nursing students: application and evaluation of the outcome present state test (OPT) model of clinical reasoning. International Journal of Nursing Education Scholarship. 2005;2(1). [DOI]

65. Van Der Vleuten CP. The assessment of professional competence: developments, research, and practical implications. Advances in Health Sciences Education. 1996;1(1):41-67. [DOI]

66. Smith RM. The triple-jump examination as an assessment tool in the problem-based medical curriculum at the university of Hawaii. Academic Medicine. 1993;68(5):366-72. [DOI]

67. Khanyile T, Mfidi F. The effect of curricula approaches to the development of the student’s clinical reasoning ability. Curationis. 2005;28(2):70-6 [DOI]

68. Moghadam MP. The effect of triple-jump examination (TJE)-based assessment on clinical reasoning of nursing interns in Zabol during 2017-2018. Education. 2012;2010. [DOI]

69. Koivisto J-M, Multisilta J, Niemi H, Katajisto J, Eriksson E. Learning by playing: a cross-sectional descriptive study of nursing students' experiences of learning clinical reasoning. Nurse Education Today. 2016;45:22-8. [DOI]

70. Yauri I. Improving student nurses’ clinical-reasoning skills: implementation of a contextualized, guided learning experience. JKP: Jurnal Keperawatan Padjadjaran. 2019;7(2):152-63.

71. de Sá Tinôco JD, Cossi MS, Fernandes MI, Paiva AC, de Oliveira Lopes MV, de Carvalho Lira AL. Effect of educational intervention on clinical reasoning skills in nursing: a quasi-experimental study. Nurse Education Today. 2021;105:105027. [DOI]

72. Forsberg E, Kristina Z, Håkan H, Uno F. Assessing progression of clinical reasoning through virtual patients: An exploratory study. Nurse education in practice. 2016; 16(1): 97-103. [DOI]

73. Georg C, Karlgren K, Ulfvarson J, Jirwe M, Welin to assess students' clinical reasoning when encountering virtual patients. Journal of Nursing Education. 2018; 57(7), 408-415 [DOI]

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |