Sun, Feb 22, 2026

[Archive]

Volume 18, Issue 3 (2025)

J Med Edu Dev 2025, 18(3): 90-98 |

Back to browse issues page

Ethics code: IR.ZAUMS.REC.1402.235

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Arab Borzu Z, Hegazi I, Amiri Moghadam M H, Bakhtiyari M, Hosseini Koukamari P. Translation and psychometric evaluation of a self-regulated learning questionnaire for blended learning among Iranian students. J Med Edu Dev 2025; 18 (3) :90-98

URL: http://edujournal.zums.ac.ir/article-1-2315-en.html

URL: http://edujournal.zums.ac.ir/article-1-2315-en.html

Zahra Arab Borzu1  , Iman Hegazi2

, Iman Hegazi2  , Mohammad Hassan Amiri Moghadam3

, Mohammad Hassan Amiri Moghadam3  , Mahdieh Bakhtiyari3

, Mahdieh Bakhtiyari3  , Parisa Hosseini Koukamari *4

, Parisa Hosseini Koukamari *4

, Iman Hegazi2

, Iman Hegazi2  , Mohammad Hassan Amiri Moghadam3

, Mohammad Hassan Amiri Moghadam3  , Mahdieh Bakhtiyari3

, Mahdieh Bakhtiyari3  , Parisa Hosseini Koukamari *4

, Parisa Hosseini Koukamari *4

1- Department of Epidemiology & Biostatistics, Zahedan University of Medical Sciences, Zahedan, Iran

2- Medical Education Unit, School of Medicine, Western Sydney University, Campbelltown, Australia

3- Student Research Committee, Zahedan University of Medical Sciences, Zahedan, Iran

4- Social Determinants of Health Research Center, Saveh University of Medical Sciences, Saveh, Iran

2- Medical Education Unit, School of Medicine, Western Sydney University, Campbelltown, Australia

3- Student Research Committee, Zahedan University of Medical Sciences, Zahedan, Iran

4- Social Determinants of Health Research Center, Saveh University of Medical Sciences, Saveh, Iran

Full-Text [PDF 577 kb]

(456 Downloads)

| Abstract (HTML) (1277 Views)

Full-Text: (42 Views)

Abstract

Background & Objective: The rapid advancement of the Internet and technology has enabled the widespread adoption of blended learning in medical education. However, there is no validated Persian scale to measure self-regulated learning in blended learning among Iranian students. This study aims to fill this gap by translating and validating an existing tool for assessing self-regulated learning in a blended learning environment among Iranian students.

Materials & Methods: The forward-backward method was used to translate the original English questionnaire into Persian. After assessing face and content validity, the Persian version was evaluated for its psychometric properties among 330 students from Zahedan Medical University in Iran. Construct validity was analyzed using Exploratory Factor Analysis (EFA) and Confirmatory Factor Analysis (CFA). To ensure reliability, we calculated the Average Inter-Item Correlation (AIC), Cronbach's alpha, and McDonald's omega. Additionally, convergent and discriminant validity were examined using Average Variance Extracted (AVE), Maximum Shared Variance (MSV), and Fornell and Larcker's criteria.

Results: The findings revealed that the Persian version of the Blended Learning Questionnaire (BLQ) consists of four distinct factors: Accessibility and Guidance (4 items), Social and Contextual (4 items), Delivery of Content (6 items), and Intrinsic and Extrinsic (2 items). Together, these factors accounted for 52.43% of the total variance in the BLQ. The results from the CFA indicated that all goodness-of-fit metrics supported the adequacy of the model. Additionally, the Cronbach's alpha, McDonald's omega, and Composite Reliability (CR) scores were all greater than 0.7, demonstrating strong internal consistency. Moreover, the indices showed acceptable levels of both convergent and discriminant validity for the Persian version of the BLQ.

Conclusion: The study's findings indicated that the Persian version of the BLQ demonstrated acceptable validity and reliability among Iranian students, making it suitable for academic and research purposes in Persian-speaking countries.

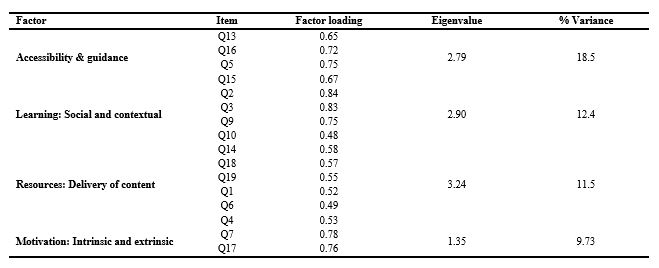

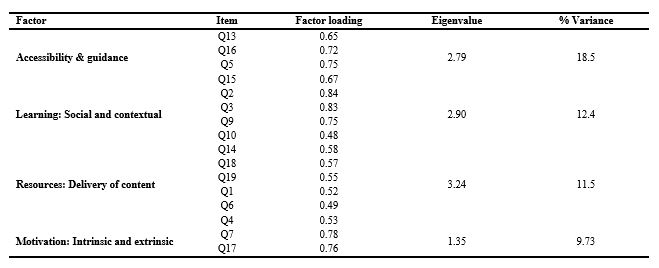

Table 1. Exploratory factor analysis results of the Blended Learning Questionnaire-Persian (BLQ-P)

Note: Exploratory factor analysis with principal component analysis and varimax rotation was performed on 120 participants to examine the factor structure of the BLQ-P.

Abbreviations: n, number of participants; BLQ-P, blended learning questionnaire-Persian; Q, questionnaire item.

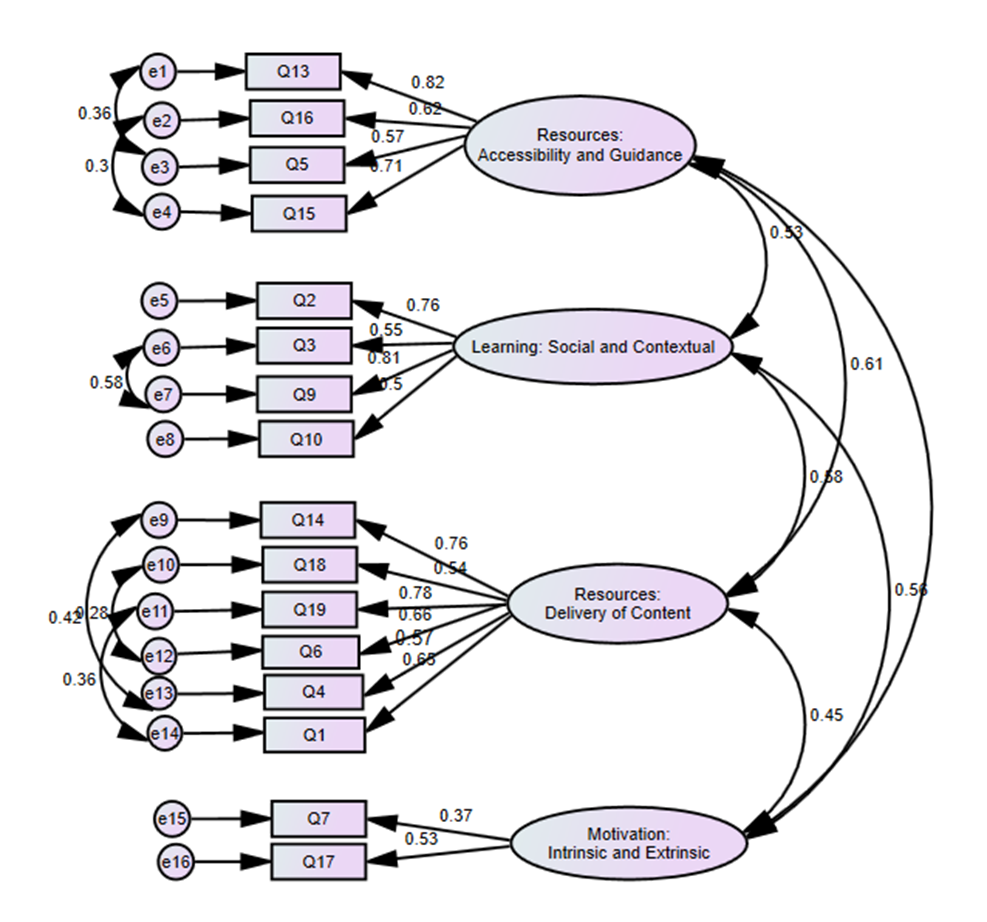

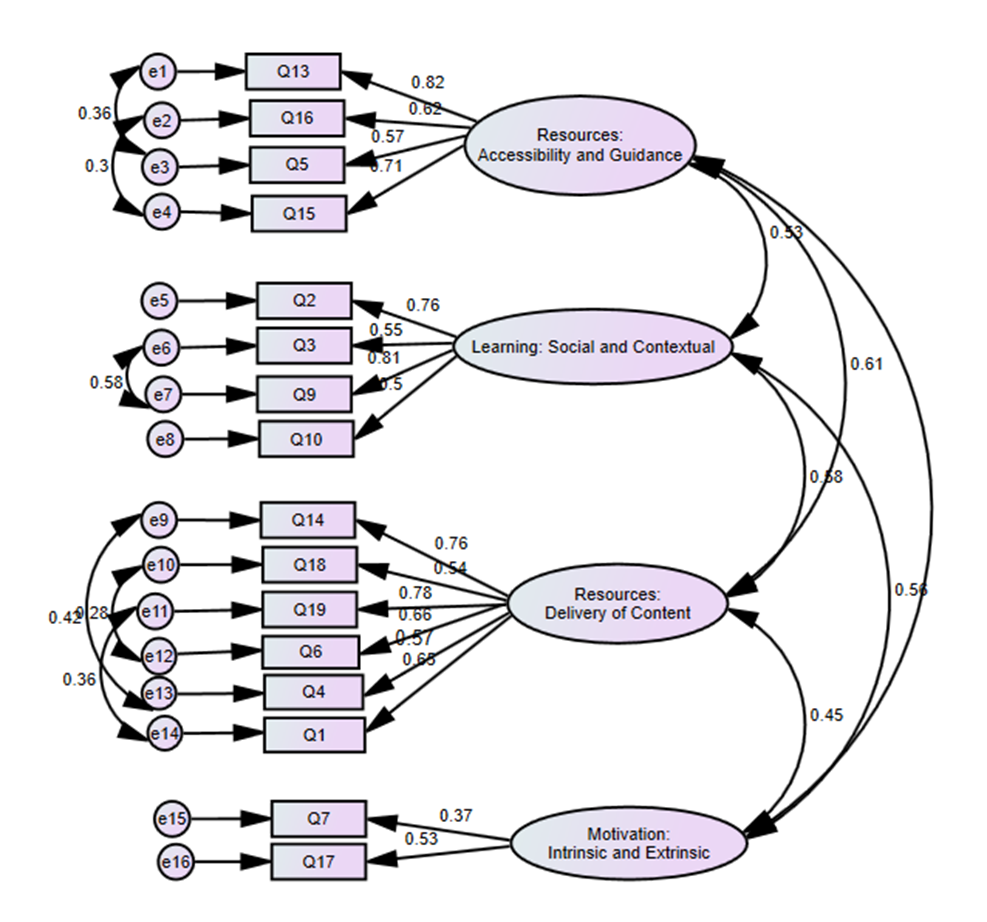

Figure 1. Confirmatory factor analysis path diagram of the four-factor Blended Learning Questionnaire-Persian (BLQ-P) model

Table 2. Exploratory factor analysis results of the Blended Learning Questionnaire-Persian (BLQ-P)

Discussion

The objective of this research was to investigate the psychometric properties of the BLQ among Iranian students. The Persian adaptation of BLQ comprises 16 items, organized into four subscales: Accessibility and Guidance (4 items), Social and Contextual (4 items), Delivery of Content (6 items), and Intrinsic and Extrinsic (2 items). Compared to the original questionnaire, three items were removed. Two items are related to the subscale of motivation, and one to the subscale of Social and Contextual. To the best of our knowledge, the blended learning questionnaire [15] has been validated in a Greek version, in addition to the current study [29]. The findings of that study also demonstrated good psychometric properties similar to those of the original version. In the original study [15], the content validity of the main questionnaire was evaluated by students who participated in focused group discussions to ensure that the items addressed in the identified domains reflected what had been previously discussed. The content validity of the Greek version of the questionnaire was assessed by administering it to 11 students who were not part of the primary study sample. This was done to evaluate any potential difficulties and ambiguities for the target audience. Researchers found no ambiguity in the questionnaire items. Similarly, in the current study, both content and face validity were examined by the participating students, who reported no difficulties in understanding the questionnaire items. In the original study, exploratory factor analysis revealed that out of 19 questions, 10 were related to resources, 5 to learning, and 4 to motivation. Together, these factors explained 51.72% of the total variance. In the current study, four components accounted for a total variance of 52.43%. Notably, one question from the Social and Contextual component and two questions from the Motivation component were removed during this phase of the analysis. In the exploratory factor analysis conducted on the validated Greek version, three questions were removed at this stage from the 19 questions. The percentage of variance explained in the Greek version was higher than that in the current study. Specifically, three resource factors comprised seven items, the learning component included three items, and the motivation component consisted of five items, collectively explaining 86.63% of the variance in composite learning. Researchers attributed the reason for removing one item and obtaining a three-factor structure to cultural background differences and the inherent properties of the questions themselves.

The resource factor in the Greek version has been integrated, and it has been suggested that increasing the number of questions related to these areas could help improve Cronbach's alpha. The indices of the confirmatory factor analysis model showed that the 4-dimensional structure of the questionnaire fits well with the data, indicating that this questionnaire can be used for future research in Iran. The confirmatory factor analysis in the original and Greek versions was not examined.

In the original study, the Spearman test was used to measure the correlation between the items of the designed questionnaire and the Motivated Strategies for Learning Questionnaire [30]. Both questionnaires were categorized based on motivational beliefs, cognitive and metacognitive strategies, and resource management strategies. The range of correlations between the items of the two questionnaires was between 0.3 and 0.5. Convergent validity was not assessed in the Greek version. In the current study, the assessment of internal fit and convergent validity was calculated using composite reliability and AVE. The composite reliability in the tool's constructs was greater than 0.7, and AVE was higher than 0.5, indicating good convergence.

The Cronbach's alpha coefficient of this tool was acceptable, indicating that each of the questionnaire items examines different dimensions. In both the original and Greek versions, the dimensions were reported as follows: the resource dimension (access and guidance) had values of 0.77 and 0.79, respectively; the learning dimension was reported as 0.72 and 0.76; and the motivation dimension was recorded as 0.55 and 0.71. In the Persian version, the reliability of different tool domains in the dimensions of resources (access and guidance) was 0.82, the learning dimension was 0.74, the access to content dimension was 0.72, and motivation was 0.70. The overall reliability of the tool was found to be 0.786. All reliability indices, including Cronbach's alpha, CR, and AIC, under the subscales, were acceptable.

The first factor in this questionnaire is resources for accessibility and guidance, which has been confirmed by confirmatory factor analysis. Studies have indicated that students have a positive perception of the role of online resources and educational materials in supporting their independent learning within a blended learning environment [31, 32]. Support, both technologically and from a socio-psychological perspective, is encouraged to break down the complexity of blended learning designs. Such support has increased students' motivation and active participation in learning activities [33, 34]. The second factor examines the social and contextual behaviors of student learning. This includes teacher-student and peer-to-peer interactions, as well as the learning environment and culture. These factors present both opportunities and potential challenges in students' learning experiences and identity formation [35, 36].

Factor 3 focuses on the role of resources used in content delivery by identifying learning needs and creating as well as testing resources that support academic performance.

Research has demonstrated that a well-designed blended learning framework, incorporating thoughtfully developed and/or collated resources, can significantly enhance student learning, often surpassing the outcomes of traditional face-to-face instruction [36]. The fourth factor centers on exploring student motivation within a learning environment. A blended learning setting fosters self-regulation skills, which can enhance student participation, motivation, and initiative in their learning [37, 38]. Additionally, using self-regulation strategies can increase the likelihood of academic progression and success for students[39] and enhance their performance, learning, and satisfaction[40]. Today, the ability to self-regulate in learning has emerged as a key educational goal [41]. This skill is essential for lifelong learning and has a significant impact on the practical and skill-based education of students, particularly those in medical fields.

Blended learning requires students to equip themselves with self-regulation skills and technological competencies to manage their knowledge at their own pace with less instructor facilitation. At the same time, educators should be competent in utilizing and effectively integrating online resources and various teaching methods to design competency courses that increase student interaction and performance. The BLQ-P enables educators and researchers to identify specific challenges faced by Iranian students in adapting to blended learning formats, such as limited access to digital resources, variability in instructors' digital teaching skills, and students' readiness for self-directed learning.

Conclusion

The translated version can serve as a valuable tool in research and educational settings within the Persian context. The BLQ was initially developed for medical students. However, we also applied it to nursing, medicine, and health sciences students, as some questionnaires, such as DREEM, were initially administered to medical students and pilot-tested within the medical sciences, including nursing and health sciences professions. Such BLQ would provide a more accurate evaluation of the learning environment's effectiveness in promoting self-regulated learning. By utilizing the BLQ-P, stakeholders can systematically evaluate blended learning environments and create targeted interventions to improve student engagement, motivation, and academic outcomes within the Iranian context. It is important to recognize both the strengths and limitations of the current study. In the present study, confirmatory factor analysis was conducted. An exploratory factor analysis was also conducted, which was not performed in the original research. However, further research is needed involving larger and more diverse population samples. Considering the survey was conducted only among students at one university, it is recommended that the designed questionnaire be used in cross-sectional and comparative studies at other universities to investigate the impact of the blended learning environment on students' performance in the classroom and clinical settings. To enhance self-regulation skills among students, instructors should cultivate a student-centered learning environment that encourages students to actively seek appropriate educational materials and resources, thereby strengthening their skills in searching for online resources and materials.

Ethical considerations

Ethical approval for this study was obtained from the Ethics Committee of Zahedan University of Medical Sciences (Code: IR.ZAUMS.REC.1402.235).

The study adhered to all ethical guidelines, which included informing participants in detail about the research objectives, methods, and rationale, ensuring confidentiality of collected data, providing participants the option to withdraw from the study at any stage, and obtaining informed written consent.

Artificial intelligence utilization for article writing

None.

Acknowledgment

The authors would like to express their gratitude to the students who participated in the study.

Conflict of interest statement

There are no conflicts of interest.

Author contributions

ZAB and PHK designed the study and applied for ethics approval. MM and MB collected the data and entered it into the statistical software. ZAB analyzed the data and created the tables and figures. PHK and ZAB wrote the manuscript.

The second draft of the manuscript was organized by IH, who also refined the language and corrected any errors. All authors reviewed and confirmed the final version.

Supporting resources

Not applicable.

Data availability statement

The datasets generated and/or analyzed during this study are not publicly available due to ethical considerations regarding student data and the need to ensure anonymity. However, they can be obtained from the corresponding author upon a reasonable request.

Background & Objective: The rapid advancement of the Internet and technology has enabled the widespread adoption of blended learning in medical education. However, there is no validated Persian scale to measure self-regulated learning in blended learning among Iranian students. This study aims to fill this gap by translating and validating an existing tool for assessing self-regulated learning in a blended learning environment among Iranian students.

Materials & Methods: The forward-backward method was used to translate the original English questionnaire into Persian. After assessing face and content validity, the Persian version was evaluated for its psychometric properties among 330 students from Zahedan Medical University in Iran. Construct validity was analyzed using Exploratory Factor Analysis (EFA) and Confirmatory Factor Analysis (CFA). To ensure reliability, we calculated the Average Inter-Item Correlation (AIC), Cronbach's alpha, and McDonald's omega. Additionally, convergent and discriminant validity were examined using Average Variance Extracted (AVE), Maximum Shared Variance (MSV), and Fornell and Larcker's criteria.

Results: The findings revealed that the Persian version of the Blended Learning Questionnaire (BLQ) consists of four distinct factors: Accessibility and Guidance (4 items), Social and Contextual (4 items), Delivery of Content (6 items), and Intrinsic and Extrinsic (2 items). Together, these factors accounted for 52.43% of the total variance in the BLQ. The results from the CFA indicated that all goodness-of-fit metrics supported the adequacy of the model. Additionally, the Cronbach's alpha, McDonald's omega, and Composite Reliability (CR) scores were all greater than 0.7, demonstrating strong internal consistency. Moreover, the indices showed acceptable levels of both convergent and discriminant validity for the Persian version of the BLQ.

Conclusion: The study's findings indicated that the Persian version of the BLQ demonstrated acceptable validity and reliability among Iranian students, making it suitable for academic and research purposes in Persian-speaking countries.

Introduction

Blended Learning (BL) is a pedagogical approach that integrates traditional face-to-face teaching methods with online educational resources [1, 2]. This approach combines traditional classroom teaching with online learning components, enhancing the learning experience. BL is widely recognized as the most effective and popular instructional method in educational institutions, as it offers flexibility, timeliness, and continuous learning [3]. By combining traditional face-to-face instruction with online components, BL offers unique opportunities for flexible and personalized educational experiences [4]. This educational strategy not only improves accessibility but also promotes active involvement and teamwork among students [5]. Moreover, teacher-student interaction in blended environments has been shown to significantly influence learners’ self-regulatory behaviors such as goal-setting, help-seeking, and effort regulation [6].

Self-Regulated Learning (SRL) emerges as a crucial component of academic success. Defined as the process by which learners autonomously manage their educational activities, SRL includes critical skills such as goal setting, self-monitoring, and adaptive learning strategies [7]. These skills enable students to take control of their learning journeys, leading to improved outcomes across diverse educational settings [8]. The research highlights the significance of SRL, indicating that students who excel in self-regulation are more likely to overcome academic challenges and achieve higher performance levels [9].

This educational approach not only enhances accessibility but also fosters active participation and collaboration among students [5]. Self-regulation becomes even more vital in blended learning environments, where flexibility is key. As students often have to manage their learning, having strong self-regulation skills is crucial for successfully handling the challenges of blended education [10]. Empirical studies suggest that effective self-regulation in these contexts is associated with enhanced academic performance and greater learner satisfaction [8, 11, 12].

The Motivated Strategies for Learning Questionnaire is a validated tool that has been used to assess SRL since 1991, as established by Dent and Pintrich [13, 14]. It is frequently employed to explore the relationship between learners and educators concerning their learning techniques and strategies related to SRL. The Blended Learning Questionnaire (BLQ) is a new questionnaire, developed by Ballouk et al. [15], which evaluated medical students’ SRL in the BL environment. The primary aim of this study is to translate and validate a questionnaire for a blended learning environment, focusing on self-regulated learning among students of the University of Medical Sciences (nursing, medicine, and health sciences). The research objectives include assessing the validity and reliability of this new instrument and examining its applicability within the Iranian educational context. By addressing these aims, the study seeks to enhance understanding of how blended learning environments can be optimized to foster self-regulation and improve overall educational experiences for students.

Materials & Methods

Design and setting(s)

This study employed a cross-sectional methodological approach with students from Zahedan University of Medical Sciences. Data collection took place from May 1, 2023, to August 30, 2023.

Participants and sampling

According to Cattell's (1988) guidelines, the ideal number of participants for Exploratory Factor Analysis (EFA) is between 3 and 10 respondents per item [16, 17]. The construct validity of the Persian version of the Blended Learning Questionnaire was assessed through both exploratory and confirmatory factor analyses. A total of 330 individuals were included in the study through convenience sampling, with 120 samples allocated for EFA and 210 samples used for Confirmatory Factor Analysis (CFA). The inclusion criterion for this study was enrollment as a university student in nursing, medicine, or a health-related field at the Medical Sciences University.

Tools/Instruments

The original version of the Blended Learning Questionnaire comprises 19 items across four subscales: Accessibility and Guidance (4 items), Social and Contextual (5 items), Delivery of Content (6 items), and Intrinsic and Extrinsic (4 items). Each item was assessed using a 7-point Likert scale, ranging from 1 (not true for me at all) to 7 (very true of me) [15].

Translation process

After obtaining the author's permission, the English version of the BLQ was translated into Persian, following Beaton's guidelines [18]. Two experts, one with a background in medical education and the other proficient in Persian and English, autonomously translated the questionnaire. Subsequently, the translators and the primary researcher reviewed and reconciled the two translations, resolving any discrepancies to create a unified Persian version of the questionnaire. In the following phase, the questionnaire was translated back by an English and Farsi speaker. This individual was not provided with the original English version and was instructed not to seek it out. Following this, an expert committee, comprising all the translators, the principal researcher, a health education and promotion specialist, and a biostatistician, reviewed all inconsistencies and endorsed the final version of the questionnaire. A pilot study was conducted to test the feasibility of the questionnaire among 40 students (35% male and 65% female). The subjects identified the translated questions as transparent.

Face validity

The translated version was administered to 37 students. Participants were provided with copies of the questionnaire and asked to evaluate its suitability, clarity, relevance, and comprehensiveness. This stage assessed the accuracy of the students' interpretation and understanding of the questions. In the quantitative assessment, an impact score was calculated for each item using the formula: Item score = frequency (%) × importance. Items were considered suitable if their impact score exceeded 1.5. Employing this dual strategy facilitated a comprehensive evaluation of face validity.

Content validity

Qualitative content validity was assessed by a panel of seven experts in the fields of health education and promotion, medical education, E-learning, health information technology, nursing, and psychometrics. These experts provided feedback on the wording, appropriateness of terms, the importance of the questions, and the placement of the items within their proper context. In quantitative content validity, the Content Validity Ratio (CVR) was calculated to determine the necessity of each item, using a 3-point rating scale with the following values: 1 denoting "not essential," 2 denoting "useful but not essential," and three denoting "essential." [19, 20]. Given the panel of seven experts, a minimum acceptable CVR score of 0.99 was based on Lawshe's model [21].

The Content Validity Index (CVI) was evaluated using a 4-point rating scale, where one indicated "not relevant," 2 indicated "somewhat relevant," 3 indicated "quite relevant," and four indicated "very relevant." A CVI score of 0.79 was deemed satisfactory for each statement, and those with a CVI of 0.7 were validated following minor adjustments.

Construct validity

Construct validity of the Blended Learning Questionnaire-Persian (BLQ-P) was evaluated using both exploratory and confirmatory factor analyses. EFA and CFA were conducted to investigate the relationships between observed variables and their underlying latent constructs. In the EFA, we aimed to uncover the factor structure or underlying constructs by grouping related items according to the original version of the questionnaire [22]. Construct validity was assessed using Maximum Likelihood estimation with Promax rotation. Items with a factor loading below 0.4 were removed from consideration, while those with a factor loading greater than 0.4 were retained [23, 24]. Bartlett's test of sphericity (p < 0.05) was employed to evaluate the adequacy of the sample [25]. A CFA was conducted using the maximum likelihood method and standard goodness-of-fit indices to evaluate the structural factors. Model fit was assessed based on indices such as the Goodness of Fit Index (GFI), chi-square (χ2), Incremental Fit Index (IFI), Tucker-Lewis Index (TLI), Normed-Fit Index (NFI), Comparative Fit Index (CFI), and Root Mean Squared Error of Approximation (RMSEA). A value above 0.90 for CFI, GFI, NFI, TLI, and IFI, RMSEA less than or equal to 0.08, and CMIN/DF below 3 indicates a good model fit [26].

Reliability

The scale's reliability was assessed through internal consistency and Construct Reliability (CR), using measures like Cronbach's alpha, McDonald's omega, and average Inter-Item Correlation (AIC). A Cronbach's alpha above 0.7 indicates that the scale has good internal consistency [24]. A CR equal to or greater than 0.70 indicates strong internal reliability and provides evidence of convergent validity [25]. According to Clark and Watson (1995), Average Inter-Item Correlations (AICs) between 0.15 and 0.50 are acceptable [27].

Convergent and discriminant validity

Convergent validity was supported by examining the values of Average Variance Extracted (AVE) and CR. Furthermore, we applied the approach introduced by Fornell and Larcker (1981) to assess convergent validity. Discriminant validity was confirmed through Maximum Shared Variance (MSV) and Average Shared Variance (ASV). The AVE should be higher than 0.5 to establish convergent validity, and the CR should exceed 0.7. For discriminant validity, the study constructs' AVE values should exceed the ASV and MSV's corresponding values [28].

Multivariate normality and outliers

Skewness (± 3) and kurtosis (± 7) were utilized to assess normal distribution, outliers, missing data, and the univariate and multivariate distributions of the data separately. The Mardia coefficient of multivariate kurtosis (< 8) was used to evaluate multivariate normality, while the Mahalanobis D-squared statistic (p < 0.001) was employed to detect multivariate outliers in this study [25]. Multiple imputations were employed to address missing data in the study. Specifically, two missing values were filled in with the mean response from participants as part of the data imputation process. The software utilized for this research included SPSS version 21 and Amos version 18.

Results

A total of 330 students participated in this study. Of these, 132 (40%) were male, and 198 (60%) were female. The average age of the participants in this study was 24 ± 2.26. The EFA section included 120 participants with an average age of 23 ± 2.01, and the CFA section included 210 participants with an average age of 24 ± 1.90.

All items had an impact score exceeding 1.5, and CVR and CVI were above 0.99 and 0.79, indicating that these values are acceptable. The Kaiser-Meyer-Olkin (KMO) test confirmed the adequacy of the sampling (KMO = 0.74; p = 0.001, χ² = 731.1) and extracted four factors for the EFA. Additionally, three items with factor loadings of less than 0.4 were excluded from the analysis. Based on eigenvalues, the BLQ-P yielded four components (with restrictions). The factor loadings, Eigenvalues, and the percentage of variance explained by the four factors are presented in Table 1. These factors collectively accounted for 52.43% of the variance in the BLQ-P for this sample (Table 1).

The CFA was conducted on the 16 questions in the Persian BLQ to assess the model's fitness obtained from the EFA. Figure 1 also presents a graphical description of Structural Equation Modeling (SEM). The first step in testing SEM is to verify whether the overall sample data align with the measurement model. The Chi-Square test of Goodness of Fit yielded a significant value χ2 = 107, df = 25, p =0.01, which was below the threshold of 0.05. The relative chi-square (χ2/df) was equal to 1.236. The RMSEA for the model was 0.41. All comparative indices of the model, including CFI, GFI, IFI, TLI, and NFI, exceeded 0.90 (0.97, 0.97, 0.96, 0.95, and 0.96, respectively), and all goodness-of-fit indices confirmed the model fit.

Adequate convergent validity is indicated when the AVE values exceed 0.5 and the CR values are greater than 0.7. These findings suggest that the factors exhibit strong convergent validity. Discriminant validity is also supported since the AVE value of factors is greater than the corresponding values of ASV and MSV. Furthermore, the correlation coefficients among factors are less than the square root of the AVE, which provides an acceptable Discriminant validity (Table 2).

Furthermore, Table 2 reports Cronbach's alpha, McDonald's Omega, and CR. All values are greater than 0.70, indicating good reliability of the items within each construct.

Self-Regulated Learning (SRL) emerges as a crucial component of academic success. Defined as the process by which learners autonomously manage their educational activities, SRL includes critical skills such as goal setting, self-monitoring, and adaptive learning strategies [7]. These skills enable students to take control of their learning journeys, leading to improved outcomes across diverse educational settings [8]. The research highlights the significance of SRL, indicating that students who excel in self-regulation are more likely to overcome academic challenges and achieve higher performance levels [9].

This educational approach not only enhances accessibility but also fosters active participation and collaboration among students [5]. Self-regulation becomes even more vital in blended learning environments, where flexibility is key. As students often have to manage their learning, having strong self-regulation skills is crucial for successfully handling the challenges of blended education [10]. Empirical studies suggest that effective self-regulation in these contexts is associated with enhanced academic performance and greater learner satisfaction [8, 11, 12].

The Motivated Strategies for Learning Questionnaire is a validated tool that has been used to assess SRL since 1991, as established by Dent and Pintrich [13, 14]. It is frequently employed to explore the relationship between learners and educators concerning their learning techniques and strategies related to SRL. The Blended Learning Questionnaire (BLQ) is a new questionnaire, developed by Ballouk et al. [15], which evaluated medical students’ SRL in the BL environment. The primary aim of this study is to translate and validate a questionnaire for a blended learning environment, focusing on self-regulated learning among students of the University of Medical Sciences (nursing, medicine, and health sciences). The research objectives include assessing the validity and reliability of this new instrument and examining its applicability within the Iranian educational context. By addressing these aims, the study seeks to enhance understanding of how blended learning environments can be optimized to foster self-regulation and improve overall educational experiences for students.

Materials & Methods

Design and setting(s)

This study employed a cross-sectional methodological approach with students from Zahedan University of Medical Sciences. Data collection took place from May 1, 2023, to August 30, 2023.

Participants and sampling

According to Cattell's (1988) guidelines, the ideal number of participants for Exploratory Factor Analysis (EFA) is between 3 and 10 respondents per item [16, 17]. The construct validity of the Persian version of the Blended Learning Questionnaire was assessed through both exploratory and confirmatory factor analyses. A total of 330 individuals were included in the study through convenience sampling, with 120 samples allocated for EFA and 210 samples used for Confirmatory Factor Analysis (CFA). The inclusion criterion for this study was enrollment as a university student in nursing, medicine, or a health-related field at the Medical Sciences University.

Tools/Instruments

The original version of the Blended Learning Questionnaire comprises 19 items across four subscales: Accessibility and Guidance (4 items), Social and Contextual (5 items), Delivery of Content (6 items), and Intrinsic and Extrinsic (4 items). Each item was assessed using a 7-point Likert scale, ranging from 1 (not true for me at all) to 7 (very true of me) [15].

Translation process

After obtaining the author's permission, the English version of the BLQ was translated into Persian, following Beaton's guidelines [18]. Two experts, one with a background in medical education and the other proficient in Persian and English, autonomously translated the questionnaire. Subsequently, the translators and the primary researcher reviewed and reconciled the two translations, resolving any discrepancies to create a unified Persian version of the questionnaire. In the following phase, the questionnaire was translated back by an English and Farsi speaker. This individual was not provided with the original English version and was instructed not to seek it out. Following this, an expert committee, comprising all the translators, the principal researcher, a health education and promotion specialist, and a biostatistician, reviewed all inconsistencies and endorsed the final version of the questionnaire. A pilot study was conducted to test the feasibility of the questionnaire among 40 students (35% male and 65% female). The subjects identified the translated questions as transparent.

Face validity

The translated version was administered to 37 students. Participants were provided with copies of the questionnaire and asked to evaluate its suitability, clarity, relevance, and comprehensiveness. This stage assessed the accuracy of the students' interpretation and understanding of the questions. In the quantitative assessment, an impact score was calculated for each item using the formula: Item score = frequency (%) × importance. Items were considered suitable if their impact score exceeded 1.5. Employing this dual strategy facilitated a comprehensive evaluation of face validity.

Content validity

Qualitative content validity was assessed by a panel of seven experts in the fields of health education and promotion, medical education, E-learning, health information technology, nursing, and psychometrics. These experts provided feedback on the wording, appropriateness of terms, the importance of the questions, and the placement of the items within their proper context. In quantitative content validity, the Content Validity Ratio (CVR) was calculated to determine the necessity of each item, using a 3-point rating scale with the following values: 1 denoting "not essential," 2 denoting "useful but not essential," and three denoting "essential." [19, 20]. Given the panel of seven experts, a minimum acceptable CVR score of 0.99 was based on Lawshe's model [21].

The Content Validity Index (CVI) was evaluated using a 4-point rating scale, where one indicated "not relevant," 2 indicated "somewhat relevant," 3 indicated "quite relevant," and four indicated "very relevant." A CVI score of 0.79 was deemed satisfactory for each statement, and those with a CVI of 0.7 were validated following minor adjustments.

Construct validity

Construct validity of the Blended Learning Questionnaire-Persian (BLQ-P) was evaluated using both exploratory and confirmatory factor analyses. EFA and CFA were conducted to investigate the relationships between observed variables and their underlying latent constructs. In the EFA, we aimed to uncover the factor structure or underlying constructs by grouping related items according to the original version of the questionnaire [22]. Construct validity was assessed using Maximum Likelihood estimation with Promax rotation. Items with a factor loading below 0.4 were removed from consideration, while those with a factor loading greater than 0.4 were retained [23, 24]. Bartlett's test of sphericity (p < 0.05) was employed to evaluate the adequacy of the sample [25]. A CFA was conducted using the maximum likelihood method and standard goodness-of-fit indices to evaluate the structural factors. Model fit was assessed based on indices such as the Goodness of Fit Index (GFI), chi-square (χ2), Incremental Fit Index (IFI), Tucker-Lewis Index (TLI), Normed-Fit Index (NFI), Comparative Fit Index (CFI), and Root Mean Squared Error of Approximation (RMSEA). A value above 0.90 for CFI, GFI, NFI, TLI, and IFI, RMSEA less than or equal to 0.08, and CMIN/DF below 3 indicates a good model fit [26].

Reliability

The scale's reliability was assessed through internal consistency and Construct Reliability (CR), using measures like Cronbach's alpha, McDonald's omega, and average Inter-Item Correlation (AIC). A Cronbach's alpha above 0.7 indicates that the scale has good internal consistency [24]. A CR equal to or greater than 0.70 indicates strong internal reliability and provides evidence of convergent validity [25]. According to Clark and Watson (1995), Average Inter-Item Correlations (AICs) between 0.15 and 0.50 are acceptable [27].

Convergent and discriminant validity

Convergent validity was supported by examining the values of Average Variance Extracted (AVE) and CR. Furthermore, we applied the approach introduced by Fornell and Larcker (1981) to assess convergent validity. Discriminant validity was confirmed through Maximum Shared Variance (MSV) and Average Shared Variance (ASV). The AVE should be higher than 0.5 to establish convergent validity, and the CR should exceed 0.7. For discriminant validity, the study constructs' AVE values should exceed the ASV and MSV's corresponding values [28].

Multivariate normality and outliers

Skewness (± 3) and kurtosis (± 7) were utilized to assess normal distribution, outliers, missing data, and the univariate and multivariate distributions of the data separately. The Mardia coefficient of multivariate kurtosis (< 8) was used to evaluate multivariate normality, while the Mahalanobis D-squared statistic (p < 0.001) was employed to detect multivariate outliers in this study [25]. Multiple imputations were employed to address missing data in the study. Specifically, two missing values were filled in with the mean response from participants as part of the data imputation process. The software utilized for this research included SPSS version 21 and Amos version 18.

Results

A total of 330 students participated in this study. Of these, 132 (40%) were male, and 198 (60%) were female. The average age of the participants in this study was 24 ± 2.26. The EFA section included 120 participants with an average age of 23 ± 2.01, and the CFA section included 210 participants with an average age of 24 ± 1.90.

All items had an impact score exceeding 1.5, and CVR and CVI were above 0.99 and 0.79, indicating that these values are acceptable. The Kaiser-Meyer-Olkin (KMO) test confirmed the adequacy of the sampling (KMO = 0.74; p = 0.001, χ² = 731.1) and extracted four factors for the EFA. Additionally, three items with factor loadings of less than 0.4 were excluded from the analysis. Based on eigenvalues, the BLQ-P yielded four components (with restrictions). The factor loadings, Eigenvalues, and the percentage of variance explained by the four factors are presented in Table 1. These factors collectively accounted for 52.43% of the variance in the BLQ-P for this sample (Table 1).

The CFA was conducted on the 16 questions in the Persian BLQ to assess the model's fitness obtained from the EFA. Figure 1 also presents a graphical description of Structural Equation Modeling (SEM). The first step in testing SEM is to verify whether the overall sample data align with the measurement model. The Chi-Square test of Goodness of Fit yielded a significant value χ2 = 107, df = 25, p =0.01, which was below the threshold of 0.05. The relative chi-square (χ2/df) was equal to 1.236. The RMSEA for the model was 0.41. All comparative indices of the model, including CFI, GFI, IFI, TLI, and NFI, exceeded 0.90 (0.97, 0.97, 0.96, 0.95, and 0.96, respectively), and all goodness-of-fit indices confirmed the model fit.

Adequate convergent validity is indicated when the AVE values exceed 0.5 and the CR values are greater than 0.7. These findings suggest that the factors exhibit strong convergent validity. Discriminant validity is also supported since the AVE value of factors is greater than the corresponding values of ASV and MSV. Furthermore, the correlation coefficients among factors are less than the square root of the AVE, which provides an acceptable Discriminant validity (Table 2).

Furthermore, Table 2 reports Cronbach's alpha, McDonald's Omega, and CR. All values are greater than 0.70, indicating good reliability of the items within each construct.

Table 1. Exploratory factor analysis results of the Blended Learning Questionnaire-Persian (BLQ-P)

Note: Exploratory factor analysis with principal component analysis and varimax rotation was performed on 120 participants to examine the factor structure of the BLQ-P.

Abbreviations: n, number of participants; BLQ-P, blended learning questionnaire-Persian; Q, questionnaire item.

Figure 1. Confirmatory factor analysis path diagram of the four-factor Blended Learning Questionnaire-Persian (BLQ-P) model

Table 2. Exploratory factor analysis results of the Blended Learning Questionnaire-Persian (BLQ-P)

Note: Convergent validity was assessed using Average Variance Extracted (AVE > 0.50) and Composite Reliability (CR > 0.70). Discriminant validity was evaluated using the Fornell-Larcker criterion where the square root of AVE (diagonal values) should exceed inter-factor correlations.

Abbreviations: MSV, maximum shared squared variance; CR, composite reliability; AVE, average variance extracted; ASV, average shared variance; α, Cronbach's alpha; RAG, resources: accessibility & guidance; LSC, learning: social and contextual; LDC, resources: delivery of content; MIE, motivation: intrinsic and extrinsic; BLQ-P, blended learning questionnaire-Persian.

Abbreviations: MSV, maximum shared squared variance; CR, composite reliability; AVE, average variance extracted; ASV, average shared variance; α, Cronbach's alpha; RAG, resources: accessibility & guidance; LSC, learning: social and contextual; LDC, resources: delivery of content; MIE, motivation: intrinsic and extrinsic; BLQ-P, blended learning questionnaire-Persian.

Discussion

The objective of this research was to investigate the psychometric properties of the BLQ among Iranian students. The Persian adaptation of BLQ comprises 16 items, organized into four subscales: Accessibility and Guidance (4 items), Social and Contextual (4 items), Delivery of Content (6 items), and Intrinsic and Extrinsic (2 items). Compared to the original questionnaire, three items were removed. Two items are related to the subscale of motivation, and one to the subscale of Social and Contextual. To the best of our knowledge, the blended learning questionnaire [15] has been validated in a Greek version, in addition to the current study [29]. The findings of that study also demonstrated good psychometric properties similar to those of the original version. In the original study [15], the content validity of the main questionnaire was evaluated by students who participated in focused group discussions to ensure that the items addressed in the identified domains reflected what had been previously discussed. The content validity of the Greek version of the questionnaire was assessed by administering it to 11 students who were not part of the primary study sample. This was done to evaluate any potential difficulties and ambiguities for the target audience. Researchers found no ambiguity in the questionnaire items. Similarly, in the current study, both content and face validity were examined by the participating students, who reported no difficulties in understanding the questionnaire items. In the original study, exploratory factor analysis revealed that out of 19 questions, 10 were related to resources, 5 to learning, and 4 to motivation. Together, these factors explained 51.72% of the total variance. In the current study, four components accounted for a total variance of 52.43%. Notably, one question from the Social and Contextual component and two questions from the Motivation component were removed during this phase of the analysis. In the exploratory factor analysis conducted on the validated Greek version, three questions were removed at this stage from the 19 questions. The percentage of variance explained in the Greek version was higher than that in the current study. Specifically, three resource factors comprised seven items, the learning component included three items, and the motivation component consisted of five items, collectively explaining 86.63% of the variance in composite learning. Researchers attributed the reason for removing one item and obtaining a three-factor structure to cultural background differences and the inherent properties of the questions themselves.

The resource factor in the Greek version has been integrated, and it has been suggested that increasing the number of questions related to these areas could help improve Cronbach's alpha. The indices of the confirmatory factor analysis model showed that the 4-dimensional structure of the questionnaire fits well with the data, indicating that this questionnaire can be used for future research in Iran. The confirmatory factor analysis in the original and Greek versions was not examined.

In the original study, the Spearman test was used to measure the correlation between the items of the designed questionnaire and the Motivated Strategies for Learning Questionnaire [30]. Both questionnaires were categorized based on motivational beliefs, cognitive and metacognitive strategies, and resource management strategies. The range of correlations between the items of the two questionnaires was between 0.3 and 0.5. Convergent validity was not assessed in the Greek version. In the current study, the assessment of internal fit and convergent validity was calculated using composite reliability and AVE. The composite reliability in the tool's constructs was greater than 0.7, and AVE was higher than 0.5, indicating good convergence.

The Cronbach's alpha coefficient of this tool was acceptable, indicating that each of the questionnaire items examines different dimensions. In both the original and Greek versions, the dimensions were reported as follows: the resource dimension (access and guidance) had values of 0.77 and 0.79, respectively; the learning dimension was reported as 0.72 and 0.76; and the motivation dimension was recorded as 0.55 and 0.71. In the Persian version, the reliability of different tool domains in the dimensions of resources (access and guidance) was 0.82, the learning dimension was 0.74, the access to content dimension was 0.72, and motivation was 0.70. The overall reliability of the tool was found to be 0.786. All reliability indices, including Cronbach's alpha, CR, and AIC, under the subscales, were acceptable.

The first factor in this questionnaire is resources for accessibility and guidance, which has been confirmed by confirmatory factor analysis. Studies have indicated that students have a positive perception of the role of online resources and educational materials in supporting their independent learning within a blended learning environment [31, 32]. Support, both technologically and from a socio-psychological perspective, is encouraged to break down the complexity of blended learning designs. Such support has increased students' motivation and active participation in learning activities [33, 34]. The second factor examines the social and contextual behaviors of student learning. This includes teacher-student and peer-to-peer interactions, as well as the learning environment and culture. These factors present both opportunities and potential challenges in students' learning experiences and identity formation [35, 36].

Factor 3 focuses on the role of resources used in content delivery by identifying learning needs and creating as well as testing resources that support academic performance.

Research has demonstrated that a well-designed blended learning framework, incorporating thoughtfully developed and/or collated resources, can significantly enhance student learning, often surpassing the outcomes of traditional face-to-face instruction [36]. The fourth factor centers on exploring student motivation within a learning environment. A blended learning setting fosters self-regulation skills, which can enhance student participation, motivation, and initiative in their learning [37, 38]. Additionally, using self-regulation strategies can increase the likelihood of academic progression and success for students[39] and enhance their performance, learning, and satisfaction[40]. Today, the ability to self-regulate in learning has emerged as a key educational goal [41]. This skill is essential for lifelong learning and has a significant impact on the practical and skill-based education of students, particularly those in medical fields.

Blended learning requires students to equip themselves with self-regulation skills and technological competencies to manage their knowledge at their own pace with less instructor facilitation. At the same time, educators should be competent in utilizing and effectively integrating online resources and various teaching methods to design competency courses that increase student interaction and performance. The BLQ-P enables educators and researchers to identify specific challenges faced by Iranian students in adapting to blended learning formats, such as limited access to digital resources, variability in instructors' digital teaching skills, and students' readiness for self-directed learning.

Conclusion

The translated version can serve as a valuable tool in research and educational settings within the Persian context. The BLQ was initially developed for medical students. However, we also applied it to nursing, medicine, and health sciences students, as some questionnaires, such as DREEM, were initially administered to medical students and pilot-tested within the medical sciences, including nursing and health sciences professions. Such BLQ would provide a more accurate evaluation of the learning environment's effectiveness in promoting self-regulated learning. By utilizing the BLQ-P, stakeholders can systematically evaluate blended learning environments and create targeted interventions to improve student engagement, motivation, and academic outcomes within the Iranian context. It is important to recognize both the strengths and limitations of the current study. In the present study, confirmatory factor analysis was conducted. An exploratory factor analysis was also conducted, which was not performed in the original research. However, further research is needed involving larger and more diverse population samples. Considering the survey was conducted only among students at one university, it is recommended that the designed questionnaire be used in cross-sectional and comparative studies at other universities to investigate the impact of the blended learning environment on students' performance in the classroom and clinical settings. To enhance self-regulation skills among students, instructors should cultivate a student-centered learning environment that encourages students to actively seek appropriate educational materials and resources, thereby strengthening their skills in searching for online resources and materials.

Ethical considerations

Ethical approval for this study was obtained from the Ethics Committee of Zahedan University of Medical Sciences (Code: IR.ZAUMS.REC.1402.235).

The study adhered to all ethical guidelines, which included informing participants in detail about the research objectives, methods, and rationale, ensuring confidentiality of collected data, providing participants the option to withdraw from the study at any stage, and obtaining informed written consent.

Artificial intelligence utilization for article writing

None.

Acknowledgment

The authors would like to express their gratitude to the students who participated in the study.

Conflict of interest statement

There are no conflicts of interest.

Author contributions

ZAB and PHK designed the study and applied for ethics approval. MM and MB collected the data and entered it into the statistical software. ZAB analyzed the data and created the tables and figures. PHK and ZAB wrote the manuscript.

The second draft of the manuscript was organized by IH, who also refined the language and corrected any errors. All authors reviewed and confirmed the final version.

Supporting resources

Not applicable.

Data availability statement

The datasets generated and/or analyzed during this study are not publicly available due to ethical considerations regarding student data and the need to ensure anonymity. However, they can be obtained from the corresponding author upon a reasonable request.

Article Type : Orginal Research |

Subject:

Medical Education

Received: 2024/10/26 | Accepted: 2025/07/14 | Published: 2025/10/1

Received: 2024/10/26 | Accepted: 2025/07/14 | Published: 2025/10/1

References

1. Allen IE, Seaman J. Changing course: ten years of tracking online education in the United States [Internet]. Sloan Consortium; 2013. Available from: [cited 2025 Jun 10].

2. Van Houten‐Schat MA, Berkhout JJ, Van Dijk N, Endedijk MD, Jaarsma AD, Diemers AD. Self‐regulated learning in the clinical context: a systematic review. Med Educ. 2018;52(10):1008-15. [DOI:10.1111/medu.13615] [PMID] []

3. Porter WW, Graham CR, Spring KA, Welch KR. Blended learning in higher education: institutional adoption and implementation. Comput Educ. 2014;75:185-95. [DOI:10.1016/j.compedu.2014.02.011]

4. Graham CR. Emerging practice and research in blended learning. In: Moore JL, Dickson-Deane C, Galyen K, editors. Handbook of distance education. 3rd ed. New York: Routledge; 2013. p. 333-50.

5. Garrison DR, Vaughan ND. Blended learning in higher education: framework, principles, and guidelines. Hoboken: John Wiley & Sons; 2008. [DOI:10.1002/9781118269558]

6. Bernard RM, Abrami PC, Borokhovski E, Wade CA, Tamim RM, Surkes MA, et al. A meta-analysis of three types of interaction treatments in distance education. Rev Educ Res. 2009;79(3):1243-89. [DOI:10.3102/0034654309333844]

7. Zimmerman BJ. Becoming a self-regulated learner: an overview. Theory Pract. 2002;41(2):64-70. [DOI:10.1207/s15430421tip4102_2]

8. Schunk DH, Zimmerman BJ. Motivation and self-regulated learning: theory, research, and applications. New York: Routledge; 2012. [DOI:10.4324/9780203831076] []

9. Pintrich PR. A conceptual framework for assessing motivation and self-regulated learning in college students. Educ Psychol Rev. 2004;16:385-407. [DOI:10.1007/s10648-004-0006-x]

10. Zhang D, Zhao JL, Zhou L, Nunamaker JF. Can e-learning replace classroom learning? Commun ACM. 2004;47(5):75-9. [DOI:10.1145/986213.986216]

11. Artino Jr AR, Stephens JM. Academic motivation and self-regulation: a comparative analysis of undergraduate and graduate students learning online. Internet High Educ. 2009;12(3-4):146-51. [DOI:10.1016/j.iheduc.2009.02.001]

12. Broadbent J, Poon WL. Self-regulated learning strategies & academic achievement in online higher education learning environments: a systematic review. Internet High Educ. 2015;27:1-13. [DOI:10.1016/j.iheduc.2015.04.007]

13. Dent AL, Koenka AC. The relation between self-regulated learning and academic achievement across childhood and adolescence: a meta-analysis. Educ Psychol Rev. 2016;28:425-74. [DOI:10.1007/s10648-015-9320-8]

14. Pintrich PR, Smith DA, Garcia T, McKeachie WJ. Reliability and predictive validity of the motivated strategies for learning questionnaire (MSLQ). Educ Psychol Meas. 1993;53(3):801-13. [DOI:10.1177/0013164493053003024]

15. Ballouk R, Mansour V, Dalziel B, Hegazi I. The development and validation of a questionnaire to explore medical students' learning in a blended learning environment. BMC Med Educ. 2022;22:1-9. [DOI:10.1186/s12909-021-03045-4] [PMID] []

16. Cattell R. The scientific use of factor analysis in behavioral and life sciences. New York: Springer Science & Business Media; 2012.

17. Nunnally JC. An overview of psychological measurement. In: Wetzler S, Katz MM, editors. Clinical diagnosis of mental disorders: a handbook. New York: Springer; 1978. p. 97-146. [DOI:10.1007/978-1-4684-2490-4_4]

18. Beaton DE, Bombardier C, Guillemin F, Ferraz MB. Guidelines for the process of cross-cultural adaptation of self-report measures. Spine. 2000;25(24):3186-91. [DOI:10.1097/00007632-200012150-00014] [PMID]

19. Costello AB, Osborne J. Best practices in exploratory factor analysis: four recommendations for getting the most from your analysis. Pract Assess Res Eval. 2005;10(1).

20. Field A. Discovering statistics using SPSS: introducing statistical method. 3rd ed. Thousand Oaks: Sage Publications; 2009.

21. Winne PH. A perspective on state-of-the-art research on self-regulated learning. Instr Sci. 2005;33(5-6):559-65. [DOI:10.1007/s11251-005-1280-9]

22. Society of Multivariate Experimental Psychology. Multivariate behavioral research monographs [Internet]. Fort Worth: Society of Multivariate Experimental Psychology; 1967. Available from:[cited 2025 Jun 10].

23. Stevens JP. Exploratory and confirmatory factor analysis. In: Stevens JP, editor. Applied multivariate statistics for the social sciences. 5th ed. New York: Routledge; 2012. p. 337-406. [DOI:10.4324/9780203843130-15]

24. Tavakol M, Dennick R. Making sense of Cronbach's alpha. Int J Med Educ. 2011;2:53. [DOI:10.5116/ijme.4dfb.8dfd] [PMID] []

25. Vinzi VE, Chin WW, Henseler J, Wang H. Perspectives on partial least squares. In: Vinzi VE, Chin WW, Henseler J, Wang H, editors. Handbook of partial least squares: concepts, methods and applications. Berlin: Springer; 2009. p. 1-20. [DOI:10.1007/978-3-540-32827-8_1]

26. Borzu ZA, Karimy M, Leitão M, Pimenta F, Albergaria R, Khoshnazar Z, et al. Validation of the menopause representation questionnaire (MenoSentations-Q) among Iranian women and cross-cultural comparison with Portuguese women. BMC Womens Health. 2025;25(1):87. [DOI:10.1186/s12905-025-03606-5] [PMID] []

27. Clark LA, Watson D. Constructing validity: basic issues in objective scale development. In: Zumbo BD, Chan EK, editors. Validity and validation in social, behavioral, and health sciences. Cham: Springer; 2014. p. 187-203. [DOI:10.1037/14805-012] []

28. Fornell C, Larcker DF. Evaluating structural equation models with unobservable variables and measurement error. J Mark Res. 1981;18(1):39-50.

https://doi.org/10.1177/002224378101800104 [DOI:10.2307/3151312]

29. Nikolopoulou K, Zacharis G. Blended learning in a higher education context: exploring university students' learning behavior. Educ Sci. 2023;13(5):514. [DOI:10.3390/educsci13050514]

30. Duncan T, Pintrich P, Smith D, McKeachie W. Motivated strategies for learning questionnaire (MSLQ) manual. Ann Arbor: University of Michigan; 2015.

31. Van der Westhuizen CP, Maphalala MC, Bailey R, editors. Blended learning environments to foster self-directed learning. Cape Town: AOSIS; 2022.

32. Dahmash NB. I couldn't join the session': benefits and challenges of blended learning amid covid-19 from EFL students. Int J Engl Linguist. 2020;10(5):221-30. [DOI:10.5539/ijel.v10n5p221]

33. Derntl M, Motschnig-Pitrik R. The role of structure, patterns, and people in blended learning. Internet High Educ. 2005;8(2):111-30. [DOI:10.1016/j.iheduc.2005.03.002]

34. De Brito Lima F, Lautert SL, Gomes AS. Learner behaviors associated with uses of resources and learning pathways in blended learning scenarios. Comput Educ. 2022;191:104625. [DOI:10.1016/j.compedu.2022.104625]

35. Wong R. Basic psychological needs of students in blended learning. Interact Learn Environ. 2022;30(6):984-98. [DOI:10.1080/10494820.2019.1703010]

36. Chiu TK. Applying the self-determination theory (SDT) to explain student engagement in online learning during the covid-19 pandemic. J Res Technol Educ. 2022;54(sup1):S14-S30. [DOI:10.1080/15391523.2021.1891998]

37. Green RA, Whitburn LY, Zacharias A, Byrne G, Hughes DL. The relationship between student engagement with online content and achievement in a blended learning anatomy course. Anat Sci Educ. 2018;11(5):471-7. [DOI:10.1002/ase.1761] [PMID]

38. Foerst NM, Klug J, Jöstl G, Spiel C, Schober B. Knowledge vs. action: discrepancies in university students' knowledge about and self-reported use of self-regulated learning strategies. Front Psychol. 2017;8:1288. [DOI:10.3389/fpsyg.2017.01288] [PMID] []

39. Papinczak T. Are deep strategic learners better suited to PBL? A preliminary study. Adv Health Sci Educ. 2009;14:337-53. [DOI:10.1007/s10459-008-9115-5] [PMID]

40. Hosseini Ravesh R, Rezaiee R, Mosalanejad L. Validation of the Persian version of the short self-regulated learning questionnaire for medical students: a descriptive study. J Med Educ Dev. 2022;15(47):1-10. [DOI:10.52547/edcj.15.47.1]

41. Luo Y, Lin J, Yang Y. Students' motivation and continued intention with online self-regulated learning: a self-determination theory perspective. Z Erziehwiss. 2021;24(6):1379-99. [DOI:10.1007/s11618-021-01042-3] [PMID] []

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |