Sat, Jan 31, 2026

[Archive]

Volume 18, Issue 2 (2025)

J Med Edu Dev 2025, 18(2): 144-152 |

Back to browse issues page

Ethics code: The study follows the code of ethics based on Helsinki Declaration. The study is passed through the

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Ismail F, Habib S, Singh Y, Ahmed I, Moin S. Students' insights on the objective structured practical examination as an assessment tool for undergraduates. J Med Edu Dev 2025; 18 (2) :144-152

URL: http://edujournal.zums.ac.ir/article-1-2287-en.html

URL: http://edujournal.zums.ac.ir/article-1-2287-en.html

1- Department of Biochemistry, J.N. Medical College, Faculty of Medicine, Aligarh Muslim University, Aligarh, India. , faizaismail22@gmail.com

2- Department of Biochemistry, J.N. Medical College, Faculty of Medicine, Aligarh Muslim University, Aligarh, India.

2- Department of Biochemistry, J.N. Medical College, Faculty of Medicine, Aligarh Muslim University, Aligarh, India.

Full-Text [PDF 655 kb]

(657 Downloads)

| Abstract (HTML) (1185 Views)

Full-Text: (234 Views)

Abstract

Background & Objective: The Objective Structured Practical Examination (OSPE) is mandatory for undergraduate and postgraduate medical examinations. This is an important component of formative and summative examinations proposed by the National Medical Council, India. OSPE helps evaluate the practical skills of the students in real-time settings. This approach is an innovative method for evaluating and delivering medical education. Moreover, it helps to bring objectivity to the evaluation process. Most studies identify the OSPE as a tailored technique for assessing performance in a realistic educational setting. However, understanding students' perspectives is now a critical need. OSPE relies on a predetermined checklist, which effectively reduces examiner bias. To fully utilize its potential, it is necessary to bridge the current knowledge gap regarding students' perspectives toward the conduct of OSPE. The study aims to gain insights into students' viewpoints, which could help refine and enhance OSPE, making it a more effective assessment tool.

Materials & Methods: A cross-sectional study was conducted to identify the characteristics of the respondents. A structured restricted Google questionnaire was given to collect feedback from MBBS phase I students in the 2023 batch at Jawaharlal Nehru Medical College, Aligarh Muslim University, Aligarh, Uttar Pradesh, on various components of the OSPE. Out of 150 students, 132 provided responses. Data analysis was performed using Microsoft Excel Professional Plus 2016 and R Version 4.3.3. The data obtained was analyzed using descriptive statistics, and the frequency distribution was presented in pie charts and percentages. A chi-square test was done, and data was considered significant at p-value ≤ 0.001.

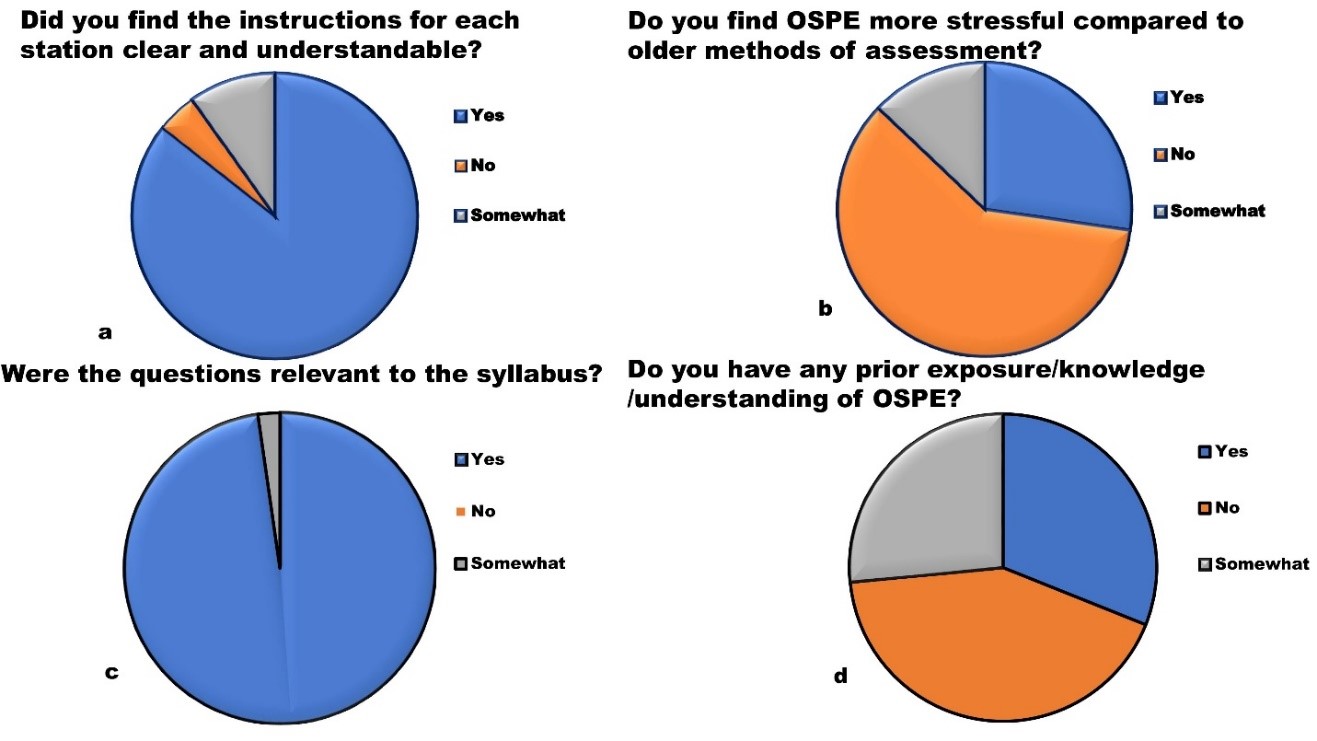

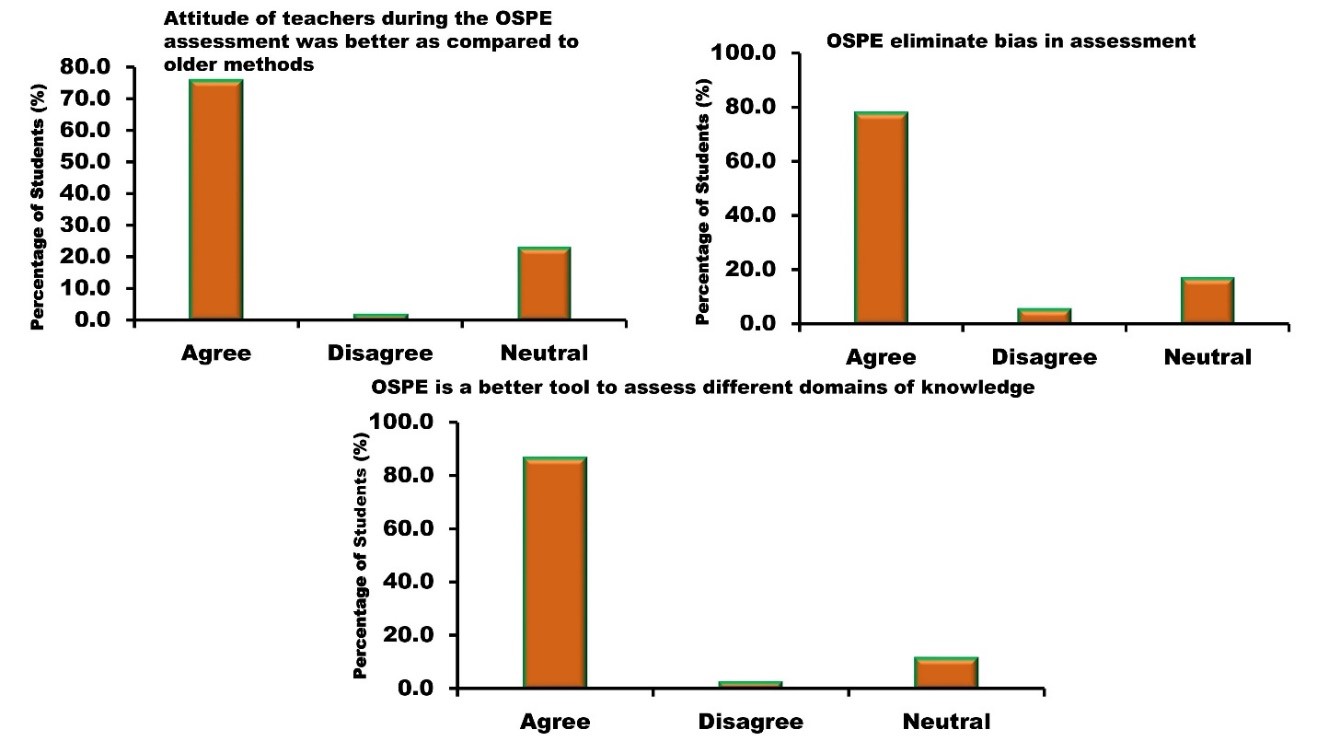

Results: Out of the 132 students, approximately 31.1% were aware of OSPE beforehand. More than 80% reported that the instructions provided before the OSPE were clear and easy to understand. Around 96% felt that the time allocated for each station was sufficient. Additionally, 76% noted that the instructors' attitudes were more positive during the OSPE than traditional exams. Furthermore, 77.7% believed introducing OSPE would eliminate bias in assessment, and 89.4% felt that OSPE encouraged them to focus more on the practical examination.

Conclusion: Students found OSPE a straightforward, bias-free, and aptitude-based mode of examination. OSPE appears to be a fine tool for assessment. It enhances students' laboratory competence and provides objective scores for the evaluation, making it an extremely viable assessment method.

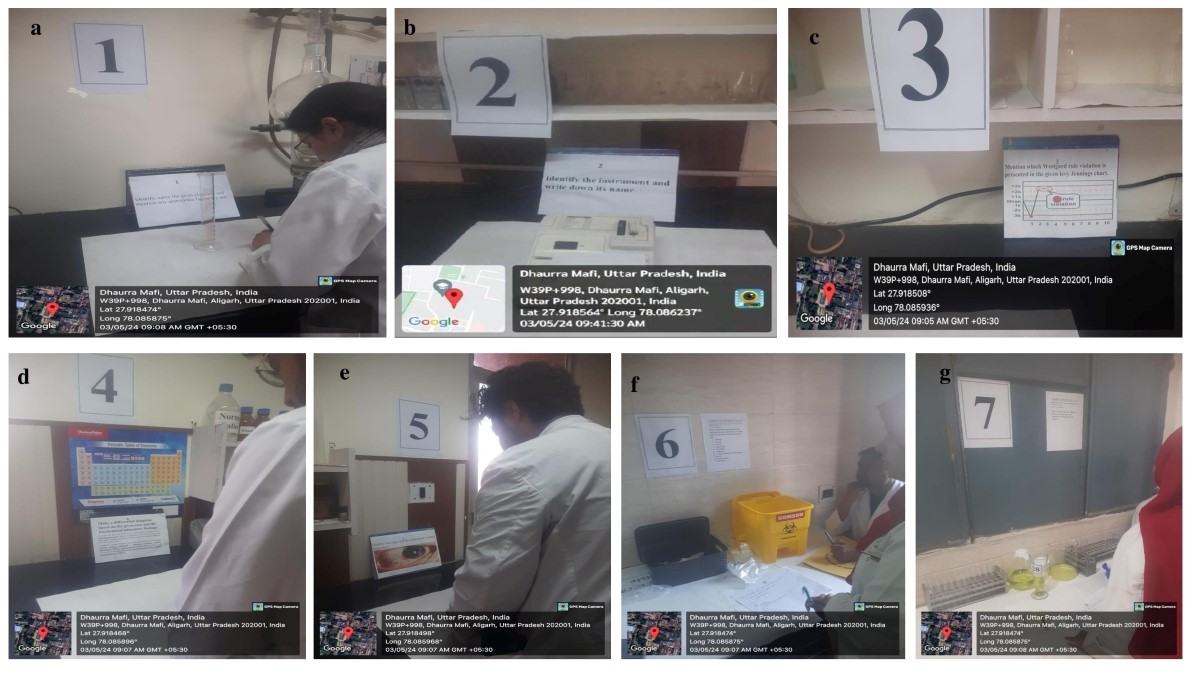

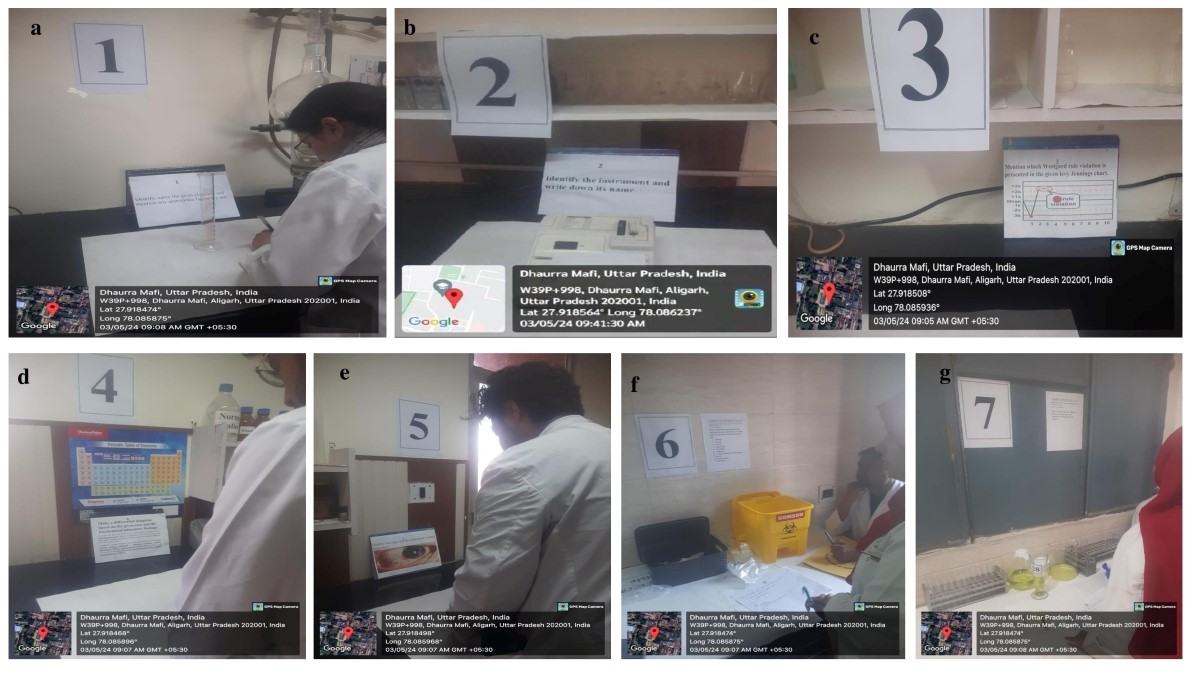

Figure 1. Depicts set-up of seven stations designed for OSPE

Orientation of students and examiners is done before the start of OSPE to familiarize them with the OPSE performance and evaluation process. Several steps are involved in designing and setting OSPE stations. Students were further instructed to rotate clockwise to ensure they attended all stations. Strict measures were implemented to prevent communication among students, ensuring no interaction between those who had completed the OSPE and those awaiting their turn. Each OSPE station included structured and objective questions, with faculty members observing and evaluating students at every station using a provided checklist. The assessment for procedure stations was based on this checklist, while structured questions and corresponding key answers were created for each station. The effectiveness was assessed through seven short assignments designed by the subject expert. Each assignment was framed according to Bloom's taxonomy. This ensured that assessment covered higher cognitive skills for deeper learning and skills transfer to a wide spectrum of applied biochemistry [12]. The procedural stations assessed practical laboratory skills, such as the qualitative estimation of analytes (for example, Hay's sulfur test for bile salts). In contrast, the response stations focused on higher cognitive skills, evaluating the application of knowledge in clinical scenarios. These included tasks such as interpreting lab reports based on case scenarios or identifying and applying laboratory instruments, all aligned with the curriculum's learning objectives.

Participants and sampling

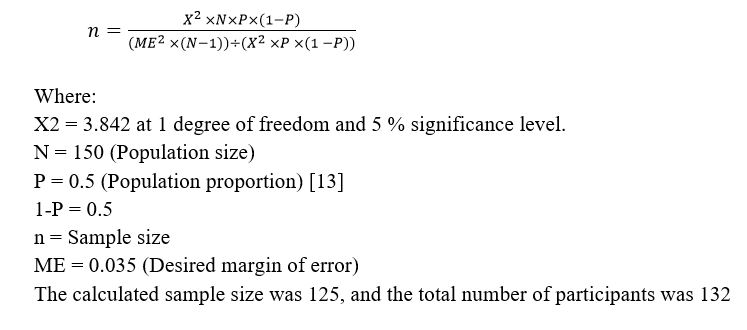

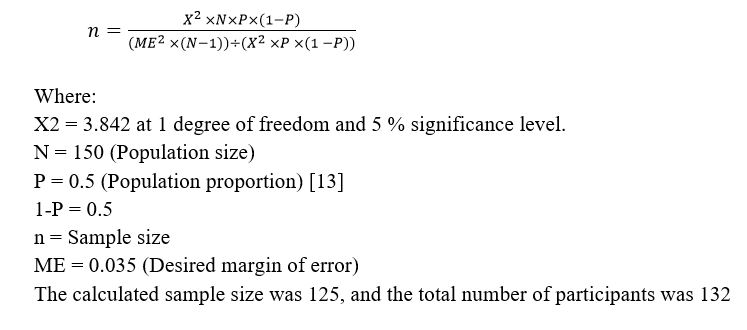

One hundred fifty students were assessed over three days, with a maximum of 50 students evaluated each day. Phase I MBBS students of JNMC, AMU, who were willing to participate from the admitted batch of 2023, were included in the study. Students from other faculties, different professional years, other courses, and those who opted not to participate were excluded. The sample size was calculated using a 95% confidence level, a 5% significance level, and a 3.5% margin of error, following the formula provided below [13].

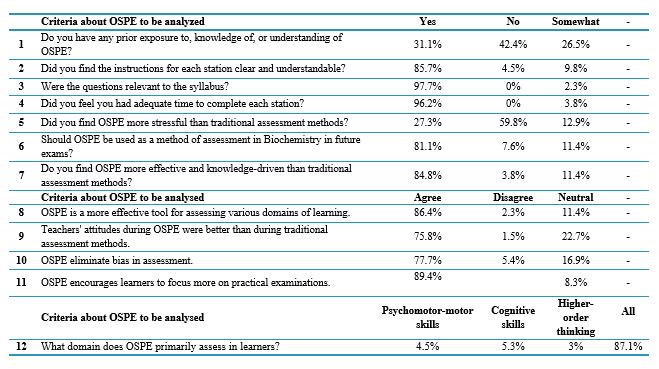

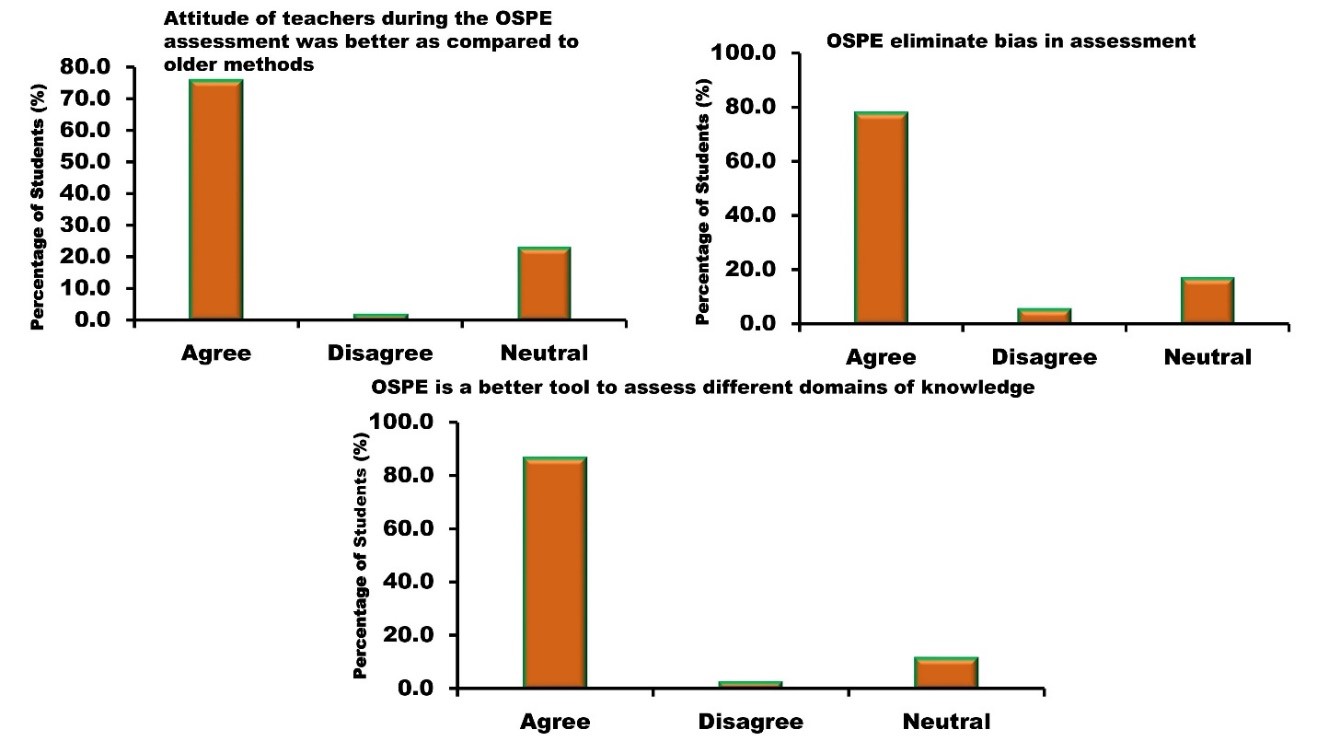

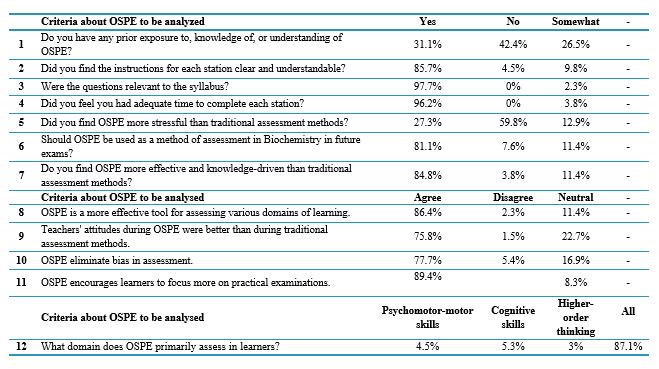

Table 1. Summary analysis of the administered questionnaire.

Abbreviations: OSPE, objective structured practical examination.

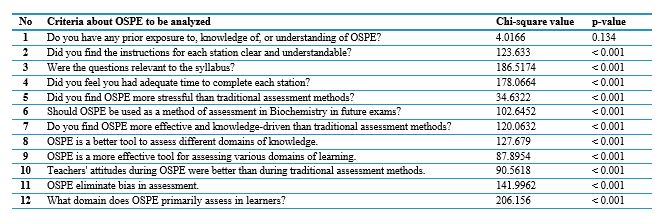

Table 2. Statistical analysis of the responses of the participants to the administered questionnaire.

Note: The table shows the Chi-square values, the corresponding p-values. Cronbach’s alpha for the questionnaire = 0.859.

Abbreviations: p, probability-value; OSPE, objective structured practical examination.

Of 132 students assessed in batches of 50 over three days, 31.1% had prior knowledge of OSPE. More than 80% reported that the instructions provided before starting the OSPE protocol were clear and easy to understand. Additionally, approximately 96% believed that the time allotted for each station was sufficient to complete their tasks. Over 80% of the students felt that OSPE was more effective than traditional assessment methods. Regarding the instructors' attitudes, about 76% considered them to be more positive. However, 27.3% found OSPE stressful compared to the simpler spotting exercises they faced in Biochemistry practical examinations during their internal mock preparatory tests.

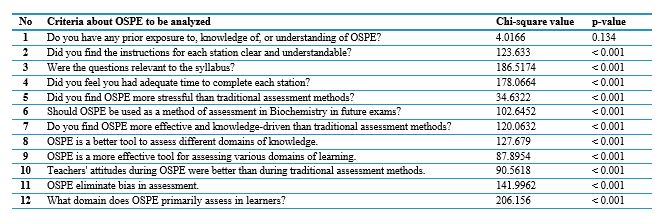

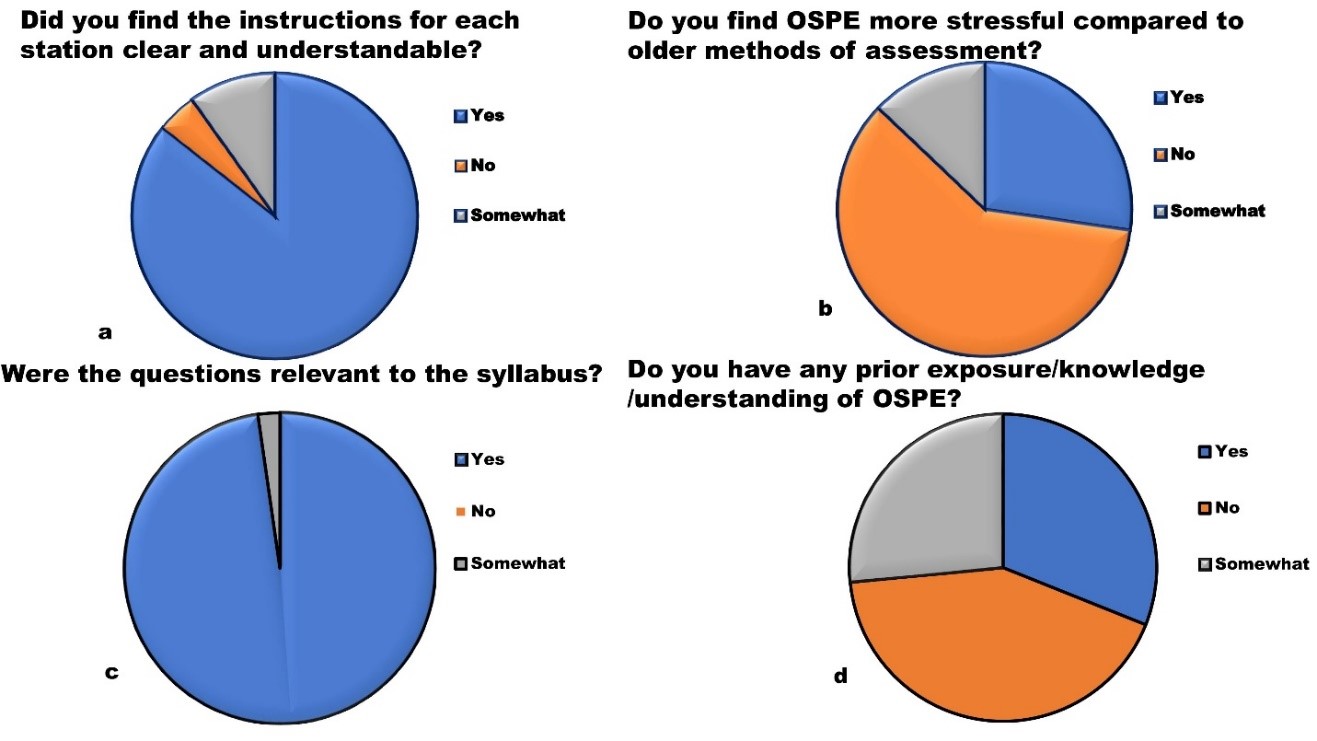

Factors contributing to this anxiety may include the lack of adequate mock exams before the actual assessment, insufficient prior knowledge of OSPE, continuous observation by examiners during the examination, and being evaluated against checklists. Nevertheless, most students expressed confidence that they would perform stress-free during their professional examinations. The analysis of the 27.3% of students who experienced stress will be included as an aspect of this study in future extensions [15]. Figures 2 and 3 show detailed analyses of the OSPE feedback conducted under different headings in pie charts and bar graphs.

Figure 2. Pie-charts depicting the student's perception about the overall conduct of OPSE

Figure 3. Bar graphs depicting the viewpoints of participants on OSPE as an assessment tool

Discussion

OSPE has gained worldwide recognition as an improved method for teaching, learning, and assessment [16]. The highlights of its benefits include a focus on practical skills, a structured and objective way of assessment, constructive feedback, alignment with the competencies, and encouragement of students for active learning [17]. Several studies further pointed out that OSPE provides objective, uniform, and focused assessment, thereby providing an unbiased evaluation to the students [18]. This agrees with the findings reported by Mard & Ghafouri, who mention that OSPE increases the satisfaction of medical students. About 86% of students believed that OSPE tests all the skill domains of the assessment [19]. Rajkumar et al. found that OSPE is a significantly better assessment tool for evaluating practical skills in all preclinical subjects [20]. Ramachandra et al., in their study, emphasized the acceptance of OSPE in the medical curriculum by both students and faculty [21]. A study conducted at King Saud University, Saudi Arabia, by Alsaif et al. observed that mock OSPE sessions conducted before the professional examinations reduced the stress of MBBS students and improved their performance [22].

Regarding bias elimination, 77.7% agreed that introducing OSPE is expected to reduce bias, while 16.9% remained neutral. 89.4% agreed that OSPE improves and encourages the learner to pay more attention towards practical examinations. These findings align with Prasad et al.'s findings, who indicated that "the OSPE format was perceived more favourably by students and resulted in a higher average score" [15]. OSPE could be used to examine some characteristics of postgraduate students, such as performance during procedures, recognition of molecular techniques and biomarkers, interpretation of results, and diagnostics. In addition to the various positive aspects mentioned, implementing OSPE presents significant challenges that must be acknowledged. The OSPE format is often criticized for requiring resources and time in its arrangement and conduct, especially the need for large manpower, preparation of pre-defined checklists, large spaces/halls, skill labs, simulators, and well-trained staff [23]. Yet another aspect is the exhaustion and draining of the examiners by the end of the rotation, resulting in bias or unfair evaluation [24]. Elaborating the findings of our study, except for the first criterion, "Do you have any prior exposure/knowledge/understanding of OSPE?" all criteria from 1-7 given to assess the student's perception of the overall conduct of OPSE showed significant differences in response patterns. The p-value for the first question is greater than 0.001, indicating no statistically significant difference in the distribution of responses. This indicates that students' responses ("Yes", "No", "Somewhat") regarding this question

evenly distributed or exhibited no clear pattern. The results support the effectiveness and acceptability of OSPE as an assessment tool, with positive feedback regarding its relevance, adequacy of time, clarity, and effectiveness. Further, the results for all four criteria from 8-11 showed highly significant differences in responses, emphasizing a strong positive viewpoint of OSPE as an assessment tool. Students recognize its effectiveness in assessing knowledge, eliminating bias, developing positive teacher interactions, and encouraging students to focus on practical skills. This again underlines OSPE's suitability and acceptability as a modern assessment method. An exceptionally small p-value was obtained for the last criterion, demonstrating a very strong and clear pattern in the data. It shows that the responses were not evenly distributed; instead, there was a significant preference for one option. Students agreed on a specific option completely, which is the effectiveness of OSPE in assessing the diverse skills of the learner. This result further validates the robustness of the observations, highlighting a definitive and consistent agreement among the participants. The study tries to evaluate OSPE from students' insights, but a few limitations of this study need to be addressed. Firstly, the lack of a proper large area fixed and identified for each station will revamp the way OSPE is conducted. Secondly, students from different institutions should be included. Future research shall be done across multiple institutions, mainly in different areas with varying resources, to make the findings generalizable. Lastly, the sample size needs to be increased to improve the power of the study. A larger sample size with diverse participants from varying educational and socio-economic backgrounds will give a broader perspective.

Conclusion

Students provided positive feedback, indicating that this evaluation enhances their learning. OSPE was perceived as an effective tool for both teaching and assessment. Therefore, it seems to be a student-friendly, effective, and less biased method for laboratory-based examinations. Overall, students' perceptions tend to favor OSPE. Future research should concentrate on ways to enhance the process of standardizing OPSE; this will pave the way for consistent evaluation criteria.

Ethical considerations

The study, titled "Objective structured practical examination: A better assessment tool to understand the magnitude of perception towards undergraduate biochemistry practical examination over traditional methods," was approved by the Office of the Institutional Ethics Committee, Jawaharlal Nehru Medical College, Aligarh, Uttar Pradesh, under Proposal Reference Number (IECJNMC/1655). Verbal consent was obtained voluntarily from each student who was willing to participate in this research.

Artificial intelligence utilization for article writing

Grammarly was utilized to refine the English language used in the document.

Acknowledgments

Infrastructure facilities provided by the Department of Biochemistry, Jawaharlal Nehru Medical College, under the DST (FIST & PURSE) program are gratefully acknowledged. The authors acknowledge Professor Najmul Islam, Chairperson, Department of Biochemistry, for his able guidance. We would like to thank all the students who participated in this study.

Conflict of interest statement

The authors declare that they have no discernible competing financial interests or personal connections that could be interpreted as influencing the conclusions made in this work.

Author contributions

Study Idea or Design, Data Collection, Data Analysis, and Interpretation: FI, SH, YS, IA and SM. Manuscript Preparation, Review, and Editing: FI, SH. Final Approval: FI, SH, YS, IA and SM.

Funding

This research did not receive a specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Data availability statement

Data will be made available at a reasonable request.

Background & Objective: The Objective Structured Practical Examination (OSPE) is mandatory for undergraduate and postgraduate medical examinations. This is an important component of formative and summative examinations proposed by the National Medical Council, India. OSPE helps evaluate the practical skills of the students in real-time settings. This approach is an innovative method for evaluating and delivering medical education. Moreover, it helps to bring objectivity to the evaluation process. Most studies identify the OSPE as a tailored technique for assessing performance in a realistic educational setting. However, understanding students' perspectives is now a critical need. OSPE relies on a predetermined checklist, which effectively reduces examiner bias. To fully utilize its potential, it is necessary to bridge the current knowledge gap regarding students' perspectives toward the conduct of OSPE. The study aims to gain insights into students' viewpoints, which could help refine and enhance OSPE, making it a more effective assessment tool.

Materials & Methods: A cross-sectional study was conducted to identify the characteristics of the respondents. A structured restricted Google questionnaire was given to collect feedback from MBBS phase I students in the 2023 batch at Jawaharlal Nehru Medical College, Aligarh Muslim University, Aligarh, Uttar Pradesh, on various components of the OSPE. Out of 150 students, 132 provided responses. Data analysis was performed using Microsoft Excel Professional Plus 2016 and R Version 4.3.3. The data obtained was analyzed using descriptive statistics, and the frequency distribution was presented in pie charts and percentages. A chi-square test was done, and data was considered significant at p-value ≤ 0.001.

Results: Out of the 132 students, approximately 31.1% were aware of OSPE beforehand. More than 80% reported that the instructions provided before the OSPE were clear and easy to understand. Around 96% felt that the time allocated for each station was sufficient. Additionally, 76% noted that the instructors' attitudes were more positive during the OSPE than traditional exams. Furthermore, 77.7% believed introducing OSPE would eliminate bias in assessment, and 89.4% felt that OSPE encouraged them to focus more on the practical examination.

Conclusion: Students found OSPE a straightforward, bias-free, and aptitude-based mode of examination. OSPE appears to be a fine tool for assessment. It enhances students' laboratory competence and provides objective scores for the evaluation, making it an extremely viable assessment method.

Introduction

Assessment is a crucial part of evaluating the knowledge and skill of a learner [1]. There have been continuous attempts in medical education to make assessments more objective and reliable. Conventional methods suffer from various shortcomings, like examiner bias, lack of proper communication skills and attitude among students, failure to identify and acknowledge their shortcomings, and absence of a systematic and objective evaluation of all the competencies [2]. Practical examinations are vital in assessing non-clinical subjects, making them essential for cultivating and developing competent physicians [3]. Keeping this point in view, medical colleges in the United Kingdom, the United States of America, and Canada adopted the Objective Structured Practical Examination (OSPE) as a standard assessment method [4]. A study by Harden and his colleagues identified that OSPE has the test of time and has overcome the problems of conventional practical-related examinations [5]. One study reported that OSPE was well-accepted and received widespread appreciation

from students who supported its implementation. The same study also quoted the advantages that OSPE offers for formative (day-to-day) assessment of students to improve their clinical competence and to derive an objective score for internal assessment [6]. Howley reported that faculty feedback on OSPE identified it as a better tool for improving students' performance and teaching methodologies [7]. In 1975, Harden et al. highlighted the promising role of the OSCE in assessing basic clinical skills and established its reliability [2]. Most of the studies tried to evaluate OSPE as an assessment tool for practical examinations and tried to compare it with the traditional methods. However, to our knowledge, a few studies focus on students' perspectives regarding OSPE [8].

Examinations should be fair, comprehensive, and objective, as dictated by the discipline [9], and must assess students' knowledge, skills, and attitudes. Therefore, the assessment methods employed to evaluate students' performance in the Biochemistry laboratory should connect to the course objectives. In India, Competency-Based Medical Education (CBME) was adopted in August 2019, initially implemented for the Bachelor of Medicine and Bachelor of Surgery (MBBS) batch admitted in Phase I (the first year of 2019) (https://www.nmc.org.in/information-desk/for-colleges/ug-curriculum/#).OSPE stations can assess various skills, including laboratory procedures, identification, microscopic skills, and diagnostic and applied medical aspects [10]. This is an organized approach for the students to recognize and identify their deficiencies and refine and enrich their clinical abilities [11]. Since OSPE is a new method for assessing practical competencies, engaging with students and understanding their experiences and insights regarding the entire process is essential.

This approach will help create a fair, reliable, and effective assessment framework. By utilizing student feedback, medical institutions can improve how their assessment methods align with the competencies and enhance the student's learning journey.

In light of the findings and existing literature, the authors aimed to analyze OSPE as a method for assessing practical skills in biochemistry among first-year MBBS students at Jawaharlal Nehru Medical College (JNMC), AMU. The study also sought to evaluate the efficacy of OSPE as a pedagogical tool. Hence, the primary goal of this research was to gain and access students' views on OSPE as an evaluation tool at an undergraduate level.

Material & Methods

Design and setting(s)

The study included MBBS phase I students admitted for the 2023 batch at Jawaharlal Nehru Medical College, Aligarh Muslim University, Aligarh, Uttar Pradesh. These students underwent training in both practical and theoretical topics, including all aspects outlined in the CBME curriculum. Further, before implementing this evaluation method, all faculty members involved in its design were thoroughly briefed and sensitized. Students were also given an orientation about the process in advance. They rotated through seven stations, which included three skill-based stations, two identification (knowledge-based) stations, and two diagnostic stations (assessing knowledge, skills, and attitudes). The time allotted to each station was 2 minutes (Figure 1). All seven stations were developed after an intensive focus group discussion among the faculty members involved in OSPE, keeping in mind the alignment of the questions, procedures, or interpretations at various stations with the competencies studied by the students to date. The whole OSPE carried 14 marks, two for each station. Therefore, it was decided by the Board of Studies of the department to form 7 stations for two marks each and assign a time of 2 minutes. This is according to the National Medical Council guidelines.

Orientation of students and examiners is done before the start of OSPE to familiarize them with the OPSE performance and evaluation process. Several steps are involved in designing and setting OSPE stations. Students were further instructed to rotate clockwise to ensure they attended all stations. Strict measures were implemented to prevent communication among students, ensuring no interaction between those who had completed the OSPE and those awaiting their turn. Each OSPE station included structured and objective questions, with faculty members observing and evaluating students at every station using a provided checklist.

The assessment for procedure stations was based on this checklist, while structured questions and corresponding key answers were created for each station. The effectiveness was assessed through seven short assignments designed by the subject expert. Each assignment was framed according to Bloom's taxonomy. This ensured that assessment covered higher cognitive skills for deeper learning and skills transfer to a wide spectrum of applied biochemistry [12].

Examinations should be fair, comprehensive, and objective, as dictated by the discipline [9], and must assess students' knowledge, skills, and attitudes. Therefore, the assessment methods employed to evaluate students' performance in the Biochemistry laboratory should connect to the course objectives. In India, Competency-Based Medical Education (CBME) was adopted in August 2019, initially implemented for the Bachelor of Medicine and Bachelor of Surgery (MBBS) batch admitted in Phase I (the first year of 2019) (https://www.nmc.org.in/information-desk/for-colleges/ug-curriculum/#).OSPE stations can assess various skills, including laboratory procedures, identification, microscopic skills, and diagnostic and applied medical aspects [10]. This is an organized approach for the students to recognize and identify their deficiencies and refine and enrich their clinical abilities [11]. Since OSPE is a new method for assessing practical competencies, engaging with students and understanding their experiences and insights regarding the entire process is essential.

This approach will help create a fair, reliable, and effective assessment framework. By utilizing student feedback, medical institutions can improve how their assessment methods align with the competencies and enhance the student's learning journey.

In light of the findings and existing literature, the authors aimed to analyze OSPE as a method for assessing practical skills in biochemistry among first-year MBBS students at Jawaharlal Nehru Medical College (JNMC), AMU. The study also sought to evaluate the efficacy of OSPE as a pedagogical tool. Hence, the primary goal of this research was to gain and access students' views on OSPE as an evaluation tool at an undergraduate level.

Material & Methods

Design and setting(s)

The study included MBBS phase I students admitted for the 2023 batch at Jawaharlal Nehru Medical College, Aligarh Muslim University, Aligarh, Uttar Pradesh. These students underwent training in both practical and theoretical topics, including all aspects outlined in the CBME curriculum. Further, before implementing this evaluation method, all faculty members involved in its design were thoroughly briefed and sensitized. Students were also given an orientation about the process in advance. They rotated through seven stations, which included three skill-based stations, two identification (knowledge-based) stations, and two diagnostic stations (assessing knowledge, skills, and attitudes). The time allotted to each station was 2 minutes (Figure 1). All seven stations were developed after an intensive focus group discussion among the faculty members involved in OSPE, keeping in mind the alignment of the questions, procedures, or interpretations at various stations with the competencies studied by the students to date. The whole OSPE carried 14 marks, two for each station. Therefore, it was decided by the Board of Studies of the department to form 7 stations for two marks each and assign a time of 2 minutes. This is according to the National Medical Council guidelines.

Orientation of students and examiners is done before the start of OSPE to familiarize them with the OPSE performance and evaluation process. Several steps are involved in designing and setting OSPE stations. Students were further instructed to rotate clockwise to ensure they attended all stations. Strict measures were implemented to prevent communication among students, ensuring no interaction between those who had completed the OSPE and those awaiting their turn. Each OSPE station included structured and objective questions, with faculty members observing and evaluating students at every station using a provided checklist.

The assessment for procedure stations was based on this checklist, while structured questions and corresponding key answers were created for each station. The effectiveness was assessed through seven short assignments designed by the subject expert. Each assignment was framed according to Bloom's taxonomy. This ensured that assessment covered higher cognitive skills for deeper learning and skills transfer to a wide spectrum of applied biochemistry [12].

Figure 1. Depicts set-up of seven stations designed for OSPE

Orientation of students and examiners is done before the start of OSPE to familiarize them with the OPSE performance and evaluation process. Several steps are involved in designing and setting OSPE stations. Students were further instructed to rotate clockwise to ensure they attended all stations. Strict measures were implemented to prevent communication among students, ensuring no interaction between those who had completed the OSPE and those awaiting their turn. Each OSPE station included structured and objective questions, with faculty members observing and evaluating students at every station using a provided checklist. The assessment for procedure stations was based on this checklist, while structured questions and corresponding key answers were created for each station. The effectiveness was assessed through seven short assignments designed by the subject expert. Each assignment was framed according to Bloom's taxonomy. This ensured that assessment covered higher cognitive skills for deeper learning and skills transfer to a wide spectrum of applied biochemistry [12]. The procedural stations assessed practical laboratory skills, such as the qualitative estimation of analytes (for example, Hay's sulfur test for bile salts). In contrast, the response stations focused on higher cognitive skills, evaluating the application of knowledge in clinical scenarios. These included tasks such as interpreting lab reports based on case scenarios or identifying and applying laboratory instruments, all aligned with the curriculum's learning objectives.

Participants and sampling

One hundred fifty students were assessed over three days, with a maximum of 50 students evaluated each day. Phase I MBBS students of JNMC, AMU, who were willing to participate from the admitted batch of 2023, were included in the study. Students from other faculties, different professional years, other courses, and those who opted not to participate were excluded. The sample size was calculated using a 95% confidence level, a 5% significance level, and a 3.5% margin of error, following the formula provided below [13].

Tools/Instruments

Google questionnaire links were sent to 150 students immediately after their third internal assessment, well

Google questionnaire links were sent to 150 students immediately after their third internal assessment, well

before their professional examinations. (To assess the student's perception of the overall conduct of OPSE, questions 1-7 were asked in the questionnaire with three alternatives- Yes/No/Somewhat. Further, to give an idea about the specific stance of participants on OSPE as an assessment tool, statements 8-11 were given. Students were instructed to give their opinion by marking any one of the three options- Agree, Disagree, or Neutral. Question 12 was a closed-ended multiple choice, with four options based on the overall effectiveness of OSPE in judging the different skills of a learner). The questionnaire was validated by involving subject experts to check the relevance and accuracy of the included questions and conducting a pilot study on 30 students. The questionnaire's reliability coefficient was determined by calculating Cronbach's alpha [14].

Data collection methods

A Google questionnaire was administered to collect feedback from students on various components of the OSPE. The questionnaire comprised close-ended questions covering cognitive, psychomotor, and higher-order thinking domains and students' overall perception of OSPE as an assessment tool. OSPE is designed using NMC guidelines for the undergraduate curriculum

(https://www.nmc.org.in/information-desk/for-colleges/ug- curriculum/#) after being adopted through the Board of Studies of the Department of Biochemistry, Jawaharlal Nehru Medical College, Aligarh, Uttar Pradesh, India. Participant anonymity was ensured to promote honest feedback. To further mitigate the response bias, the language of the questions in the questionnaire was kept neutral to avoid leading answers. A range of options like Agree, Disagree, and Neutral was given to ensure a balanced response. Options like the 'Neutral' or 'NO' option, wherever feasible, were given to the participants to know their true perspective. Of the 150 students, 132 provided responses.

Data analysis

Data management was conducted using Microsoft Excel Professional Plus 2016. Statistical calculations were performed using R Version 4.3.3. The chi-square test was done, and p ≤ 0.001 was considered significant.

Results

The Cronbach's alpha for the administered questions was 0.859, indicating good internal consistency of the questionnaire. Students provided constructive feedback and expressed a high acceptance of the assessment process, as demonstrated by the data in Table 1. The statistical analysis of the participants' responses is shown in Table 2.

Data collection methods

A Google questionnaire was administered to collect feedback from students on various components of the OSPE. The questionnaire comprised close-ended questions covering cognitive, psychomotor, and higher-order thinking domains and students' overall perception of OSPE as an assessment tool. OSPE is designed using NMC guidelines for the undergraduate curriculum

(https://www.nmc.org.in/information-desk/for-colleges/ug- curriculum/#) after being adopted through the Board of Studies of the Department of Biochemistry, Jawaharlal Nehru Medical College, Aligarh, Uttar Pradesh, India. Participant anonymity was ensured to promote honest feedback. To further mitigate the response bias, the language of the questions in the questionnaire was kept neutral to avoid leading answers. A range of options like Agree, Disagree, and Neutral was given to ensure a balanced response. Options like the 'Neutral' or 'NO' option, wherever feasible, were given to the participants to know their true perspective. Of the 150 students, 132 provided responses.

Data analysis

Data management was conducted using Microsoft Excel Professional Plus 2016. Statistical calculations were performed using R Version 4.3.3. The chi-square test was done, and p ≤ 0.001 was considered significant.

Results

The Cronbach's alpha for the administered questions was 0.859, indicating good internal consistency of the questionnaire. Students provided constructive feedback and expressed a high acceptance of the assessment process, as demonstrated by the data in Table 1. The statistical analysis of the participants' responses is shown in Table 2.

Table 1. Summary analysis of the administered questionnaire.

Abbreviations: OSPE, objective structured practical examination.

Table 2. Statistical analysis of the responses of the participants to the administered questionnaire.

Note: The table shows the Chi-square values, the corresponding p-values. Cronbach’s alpha for the questionnaire = 0.859.

Abbreviations: p, probability-value; OSPE, objective structured practical examination.

Of 132 students assessed in batches of 50 over three days, 31.1% had prior knowledge of OSPE. More than 80% reported that the instructions provided before starting the OSPE protocol were clear and easy to understand. Additionally, approximately 96% believed that the time allotted for each station was sufficient to complete their tasks. Over 80% of the students felt that OSPE was more effective than traditional assessment methods. Regarding the instructors' attitudes, about 76% considered them to be more positive. However, 27.3% found OSPE stressful compared to the simpler spotting exercises they faced in Biochemistry practical examinations during their internal mock preparatory tests.

Factors contributing to this anxiety may include the lack of adequate mock exams before the actual assessment, insufficient prior knowledge of OSPE, continuous observation by examiners during the examination, and being evaluated against checklists. Nevertheless, most students expressed confidence that they would perform stress-free during their professional examinations. The analysis of the 27.3% of students who experienced stress will be included as an aspect of this study in future extensions [15]. Figures 2 and 3 show detailed analyses of the OSPE feedback conducted under different headings in pie charts and bar graphs.

Figure 2. Pie-charts depicting the student's perception about the overall conduct of OPSE

Figure 3. Bar graphs depicting the viewpoints of participants on OSPE as an assessment tool

Discussion

OSPE has gained worldwide recognition as an improved method for teaching, learning, and assessment [16]. The highlights of its benefits include a focus on practical skills, a structured and objective way of assessment, constructive feedback, alignment with the competencies, and encouragement of students for active learning [17]. Several studies further pointed out that OSPE provides objective, uniform, and focused assessment, thereby providing an unbiased evaluation to the students [18]. This agrees with the findings reported by Mard & Ghafouri, who mention that OSPE increases the satisfaction of medical students. About 86% of students believed that OSPE tests all the skill domains of the assessment [19]. Rajkumar et al. found that OSPE is a significantly better assessment tool for evaluating practical skills in all preclinical subjects [20]. Ramachandra et al., in their study, emphasized the acceptance of OSPE in the medical curriculum by both students and faculty [21]. A study conducted at King Saud University, Saudi Arabia, by Alsaif et al. observed that mock OSPE sessions conducted before the professional examinations reduced the stress of MBBS students and improved their performance [22].

Regarding bias elimination, 77.7% agreed that introducing OSPE is expected to reduce bias, while 16.9% remained neutral. 89.4% agreed that OSPE improves and encourages the learner to pay more attention towards practical examinations. These findings align with Prasad et al.'s findings, who indicated that "the OSPE format was perceived more favourably by students and resulted in a higher average score" [15]. OSPE could be used to examine some characteristics of postgraduate students, such as performance during procedures, recognition of molecular techniques and biomarkers, interpretation of results, and diagnostics. In addition to the various positive aspects mentioned, implementing OSPE presents significant challenges that must be acknowledged. The OSPE format is often criticized for requiring resources and time in its arrangement and conduct, especially the need for large manpower, preparation of pre-defined checklists, large spaces/halls, skill labs, simulators, and well-trained staff [23]. Yet another aspect is the exhaustion and draining of the examiners by the end of the rotation, resulting in bias or unfair evaluation [24]. Elaborating the findings of our study, except for the first criterion, "Do you have any prior exposure/knowledge/understanding of OSPE?" all criteria from 1-7 given to assess the student's perception of the overall conduct of OPSE showed significant differences in response patterns. The p-value for the first question is greater than 0.001, indicating no statistically significant difference in the distribution of responses. This indicates that students' responses ("Yes", "No", "Somewhat") regarding this question

evenly distributed or exhibited no clear pattern. The results support the effectiveness and acceptability of OSPE as an assessment tool, with positive feedback regarding its relevance, adequacy of time, clarity, and effectiveness. Further, the results for all four criteria from 8-11 showed highly significant differences in responses, emphasizing a strong positive viewpoint of OSPE as an assessment tool. Students recognize its effectiveness in assessing knowledge, eliminating bias, developing positive teacher interactions, and encouraging students to focus on practical skills. This again underlines OSPE's suitability and acceptability as a modern assessment method. An exceptionally small p-value was obtained for the last criterion, demonstrating a very strong and clear pattern in the data. It shows that the responses were not evenly distributed; instead, there was a significant preference for one option. Students agreed on a specific option completely, which is the effectiveness of OSPE in assessing the diverse skills of the learner. This result further validates the robustness of the observations, highlighting a definitive and consistent agreement among the participants. The study tries to evaluate OSPE from students' insights, but a few limitations of this study need to be addressed. Firstly, the lack of a proper large area fixed and identified for each station will revamp the way OSPE is conducted. Secondly, students from different institutions should be included. Future research shall be done across multiple institutions, mainly in different areas with varying resources, to make the findings generalizable. Lastly, the sample size needs to be increased to improve the power of the study. A larger sample size with diverse participants from varying educational and socio-economic backgrounds will give a broader perspective.

Conclusion

Students provided positive feedback, indicating that this evaluation enhances their learning. OSPE was perceived as an effective tool for both teaching and assessment. Therefore, it seems to be a student-friendly, effective, and less biased method for laboratory-based examinations. Overall, students' perceptions tend to favor OSPE. Future research should concentrate on ways to enhance the process of standardizing OPSE; this will pave the way for consistent evaluation criteria.

Ethical considerations

The study, titled "Objective structured practical examination: A better assessment tool to understand the magnitude of perception towards undergraduate biochemistry practical examination over traditional methods," was approved by the Office of the Institutional Ethics Committee, Jawaharlal Nehru Medical College, Aligarh, Uttar Pradesh, under Proposal Reference Number (IECJNMC/1655). Verbal consent was obtained voluntarily from each student who was willing to participate in this research.

Artificial intelligence utilization for article writing

Grammarly was utilized to refine the English language used in the document.

Acknowledgments

Infrastructure facilities provided by the Department of Biochemistry, Jawaharlal Nehru Medical College, under the DST (FIST & PURSE) program are gratefully acknowledged. The authors acknowledge Professor Najmul Islam, Chairperson, Department of Biochemistry, for his able guidance. We would like to thank all the students who participated in this study.

Conflict of interest statement

The authors declare that they have no discernible competing financial interests or personal connections that could be interpreted as influencing the conclusions made in this work.

Author contributions

Study Idea or Design, Data Collection, Data Analysis, and Interpretation: FI, SH, YS, IA and SM. Manuscript Preparation, Review, and Editing: FI, SH. Final Approval: FI, SH, YS, IA and SM.

Funding

This research did not receive a specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Data availability statement

Data will be made available at a reasonable request.

Article Type : Orginal Research |

Subject:

Medical Education

Received: 2024/09/28 | Accepted: 2025/04/23 | Published: 2025/07/13

Received: 2024/09/28 | Accepted: 2025/04/23 | Published: 2025/07/13

References

1. Abbasi M, Shirazi M, Torkmandi H, Homayoon S, Abdi M. Impact of teaching, learning, and assessment of medical law on cognitive, affective and psychomotor skills of medical students: a systematic review. BMC Medical Education. 2023;23(1):703. [DOI]

2. Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment of clinical competence using objective structured examination. British Medical Journal. 1975;1(5955):447-51 [DOI]

3. Lakum NR, Maru AM, Algotar CG, Makwana HH. Objective structured practical examination (OSPE) as a tool for the formative assessment of practical skills in the subject of physiology: a comparative cross-sectional analysis. Cureus. 2023;15(9): e46104. [DOI]

4. Abraham RR, Upadhya S, Torke S, Ramnarayan K. Student perspectives of assessment by TEMM model in physiology. Advances in Physiology Education. 2005;29(2):94-7. [DOI]

5. Harden RM, Gleeson FA. Assessment of clinical competence using an objective structured clinical examination (OSCE). Medical Education. 1979;13(1):39-54. [DOI]

6. Kundu D, Das HN, Sen G, Osta M, Mandal T, Gautam D. Objective structured practical examination in biochemistry: an experience in medical college, Kolkata. Journal of Natural Science, Biology, and Medicine. 2013;4(1):103. [DOI]

7. Howley LD. Performance assessment in medical education: where we’ve been and where we’re going. Evaluation & the Health Professions. 2004;27(3):285-303 [DOI]

8. Nundy S, Kakar A, Bhutta ZA, Nundy S, Kakar A, Bhutta ZA. Developing learning objectives and evaluation: multiple choice questions/objective structured practical examinations. How to Practice Academic Medicine and Publish from Developing Countries? A Practical Guide. 2022:393-404 [DOI]

9. Croft H, Gilligan C, Rasiah R, Levett-Jones T, Schneider J. Current trends and opportunities for competency assessment in pharmacy education–a literature review. Pharmacy. 2019;7(2): 67. [DOI]

10. Faldessai N, Dharwadkar A, Mohanty S. Objective-structured practical examination: a tool to gauge perception and performance of students in biochemistry. Asian Journal of Multidisciplinary Studies. 2014;2(8):32-38.

11. Nayar U, Malik SL, Bijlani RL. Objective structured practical examination: a new concept in assessment of laboratory exercises in preclinical sciences. Medical Education. 1986;20(3):204-209. [DOI]

12. Adams NE. Bloom's taxonomy of cognitive learning objectives. Journal of the Medical Library Association. 2015;103(3):152-153 [DOI]

13. Krejcie RV and Morgan DW. Determining sample size for research activities. Educational and Psychological Measurement. 1970;30:607-610. [DOI]

14. Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16(3):297-334. [DOI]

15. Prasad HK, Prasad HK, Sajitha K, Bhat S, Shetty KJ. Comparison of Objective Structured Practical Examination (OSPE) versus conventional pathology practical examination methods among the second-year medical students—a cross-sectional study. Medical Science Educator. 2020;30:1131-1135. [DOI]

16. Brazeau C, Boyd L, Crosson J. Changing an existing OSCE to a teaching tool: the making of a teaching OSCE. Academic Medicine. 2002;77(9):932. [DOI]

17. Pasi R, Babu TA, Kalidoss VK. Development and validation of structured training module for healthcare workers involved in managing pediatric patients during COVID-19 pandemic using “Objective Structured Clinical Examination” (OSCE). Journal of Education and Health Promotion. 2023;12(1):15. [DOI]

18. Charles J, Janagond A, Thilagavathy R, Ramesh V. Evaluation by OSPE (Objective Structured Practical Examination)–a good tool for assessment of medical undergraduates–a study report from Velammal medical college, Madurai, Tamil Nadu, India. IOSR Journal of Research & Method in Education. 2016;6:1-6. [DOI]

19. Mard SA, Ghafouri S. Objective structured practical examination in experimental physiology increased satisfaction of medical students. Advances in Medical Education and Practice. 2020;651-659 [DOI]

20. Rajkumar KR, Prakash KG, Saniya K, Sailesh KS, Vegi P. OSPE in anatomy, physiology and biochemistry practical examinations: perception of MBBS students. Indian Journal of Clinical Anatomy and Physiology. 2016;3(4):482-4. [DOI]

21. Ramachandra SC, Vishwanath P, Ojha VA, Prashant A. Objective structured practical examination (OSPE) improves student performance: a quasi-experimental study. Journal of Medical Education & Research. 2021;23(3):149-54. [DOI]

22. Alsaif F, Alkuwaiz L, Alhumud M, et al. Evaluation of the experience of peer-led mock objective structured practical examination for first-and second-year medical students. Advances in Medical Education and Practice. 2022;13:987. [DOI]

23. Alkhateeb N, Salih AM, Shabila N, Al-Dabbagh A. Objective structured clinical examination: challenges and opportunities from students' perspective. PLoS One. 2022;17(9):e0274055. [DOI]

24. Furmedge DS, Smith LJ, Sturrock A. Developing doctors: what are the attitudes and perceptions of year 1 and 2 medical students towards a new integrated formative objective structured clinical examination? BMC Medical Education. 2016;16:1-9. [DOI]

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |