Sun, Feb 1, 2026

[Archive]

Volume 18, Issue 1 (2025)

J Med Edu Dev 2025, 18(1): 123-131 |

Back to browse issues page

Ethics code: IEC/2023/06, dated 16.10.23

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Chattopadhyay S. An analytic study of knowledge retention at different time intervals among junior residents following recapitulation of presented slides silently at the end of teaching session. J Med Edu Dev 2025; 18 (1) :123-131

URL: http://edujournal.zums.ac.ir/article-1-2151-en.html

URL: http://edujournal.zums.ac.ir/article-1-2151-en.html

Department of Anaesthesiology, Midnapore Medical College, Midnapore, West Bengal, India. , sumanc24@gmail.com

Keywords: Junior resident, slide presentation, multiple-choice questions, knowledge retention, teaching strategies

Full-Text [PDF 814 kb]

(759 Downloads)

| Abstract (HTML) (1092 Views)

Full-Text: (216 Views)

Abstract

Background & Objective: Facilitators commonly ponder how to effectively conclude a learning session, and there are no clear answers. This novel study analyzes the impact of silent recapitulation of presented slides at session end on immediate and short-term knowledge retention among Junior Residents (JR) tested at different time intervals.

Material & Methods: This single-center, prospective, non-randomized interventional study was conducted at Midnapore Medical College in India. Fifteen postgraduate JR of Anaesthesiology attended 14 knowledge-based teaching sessions, while 22 JR participated in the subsequent 14 sessions. Teaching sessions were allocated in a sequential, non-randomized manner, with 50% of sessions ending with a silent recapitulation of PowerPoint slides following Take-Home Messages (THM) (Study group, n = 14 sessions), while the other 50% of sessions ended with a discussion of THM only (Control group, n = 14 sessions). All participating JRs were assessed with five different Multiple Choice Questions (MCQs) each on the first and seventh day after sessions and again at Internal Assessment (IA) after 2 months. Data analysis was performed using paired t-tests for within-group comparisons, unpaired two-tailed t-tests, and ANOVA tests for between-group comparisons.

Results: The MCQ scores on day 1 were significantly higher in the study group compared to the control group (82.0 vs. 65.2, p < 0.00). However, mean MCQ scores on day 7 in both groups were significantly different from day 1 MCQ scores but similar to each other (71.2 and 73.5, p = 0.29). The scores at IA improved from day 7 MCQ scores in both the study group (71.2 vs. 82.0, p < 0.05) and the control group (73.5 vs. 83.2, p < 0.05).

Conclusion: For JRs, there is significant short-term retention of knowledge after a silent recapitulation of slides at the end of a session compared to the control group.

Introduction

Knowledge retention after a teaching session is an important reference. Generally, facilitators present using PowerPoint slides, interact with the audience, and discuss critical take-home messages at the end of a teaching-learning session. Knowledge retention is usually checked at Internal Assessment (IA). The current study looked into an unknown facet of medical education—whether recapitulation of PowerPoint slides at the end of a teaching session helps in short—and intermediate-term knowledge retention by students.

PowerPoint slides are preferred for most knowledge-based teaching-learning sessions [1, 2]. To be effective as a learning tool, the presentation slides should be clear, concise, to the point, written, and consistent throughout the design, regarding font, spacing, and margin [3]. The limit of lines in a PowerPoint slide is generally six, with no more than six elements per slide, and designed visuals to make the slide readable within one minute [2]. As our study focussed on the effect of PowerPoint slide recapitulation, the slides were prepared as per guidelines.

Students learning factual knowledge should be assessed by actively recalling information and retesting at expanding time intervals to make learning more effective and ensure optimal long-term retention of knowledge [4]. Effective retrieval practice depends on the degree to which the yet-to-be-learned information is vulnerable to forgetting [3, 4]. Spacing repetition with a recall of factual knowledge by testing relevant knowledge content is beneficial when the learning content consists of activities that interfere with learning (like duties, other classes on different topics, and so on) [5]. Evaluating postgraduate students after a class based only on formative assessment is challenging, as it depends on individual perspectives [6]. To reach out to students, it is important to reiterate the important Take-Home Messages (THM) from the session [7]. This is generally done verbally by facilitators at the end of the discussion, with most summarizing the THM of the session. However, verbal discussion takes time, and at the end of class, the focus of most students often needs to be improved. 'Our eyes can read faster than our mouth can tell' [8]. The basic concept of this study is that going through the slides again without vocalization at the end of class will improve student's grasp of concepts without further loading an overburdened auditory input. This study tested whether discussing THM with silent recapitulation is superior to discussing only THM by assessing test scores later at pre-fixed intervals.

Online quizzes used periodically are highly beneficial tools for learning because they compel students to work persistently with other ongoing class activities [9]. Quiz-based learning is more engaging and interactive while increasing curiosity, thus propelling advanced learners toward self-directed learning [10]. Google Forms with Quiz has proven to be an effective tool for learning and assessment [11]. Multiple-Choice Questions (MCQs) can be used just as well as Open-Ended Questions (OEQs) to assess the application of knowledge, and a high correlation exists between MCQs and OEQs when both are fact-based [12]. Short Answer Questions (SAQ) are commonly used as open-ended questions. The use of tests for knowledge enhancement requires that they be given relatively soon after learning exercises and derived specifically from the information learned [13]. Some authors have found Extended Multiple Choice Questions (EMCQs) well suited to clinical reasoning in residents [14]. For this study, we used MCQ scores one day and one week after sessions, while SAQs were used for the first IA and EMCQs for the second IA. More studies need to be conducted to compare these two IA techniques.

The National Medical Commission (NMC) in India has stipulated that presentation should not exceed one-third of the allotted time, while the rest of the time is for interactions and formative feedback. In postgraduate curricula, IA should be held at least once every three months [15]. Based on these guidelines, the class schedule and IA were planned for this prospective study.

Even though we are more accustomed to large or small group teaching sessions by name, the current classification includes medium-sized groups of 15 to 34 learners [16]. This is typical of a postgraduate department's total Junior Resident (JR) strength. In our department, there were 15 to 22 participating JRs at the time of this study, thus conforming to the typical classification of a medium-sized group. When to perform testing is an ongoing question, as spacing between tests is important for knowledge retrieval. Tests conducted on days 1, 7, and 2 months are adequately spaced testing intervals and have been used in earlier studies [14].

This study compares the impact of silent recapitulation of PowerPoint slides at the end of teaching sessions after discussion of THM by assessing knowledge recapitulation using MCQ quizzes one day and one week after the teaching session and SAQ and EMCQ at IA held two months after the start of each session. This is done by comparing these sessions with sessions allotted to end with take-home messages only. To our knowledge, no previous study has analyzed this intervention of silent recapitulation of slides and spaced interval testing, comparing its efficacy to the home messages alone.

The study hypothesis was that there would be no difference in the performance of students regarding the intervention of silent recapitulation of slides at the end of the group teaching session compared to other sessions without slide recapitulation when testing scores on day 1 and day 7 after the teaching session and also at internal assessment (null hypothesis). The alternate hypothesis was there would be a significant difference between the groups at different evaluation times.

Materials & Methods

Design and setting(s)

This prospective, non-randomized, interventional (quasi-experimental) trial of two classroom-based medical education techniques was conducted at the postgraduate department of Anaesthesiology and Critical Care, Midnapore Medical College, in the state of West Bengal, India, from October 2023 to January 2024, a total of four months after approval from the Institutional Ethics Committee (IEC) and written informed consent from all JRs.

Participants and sampling

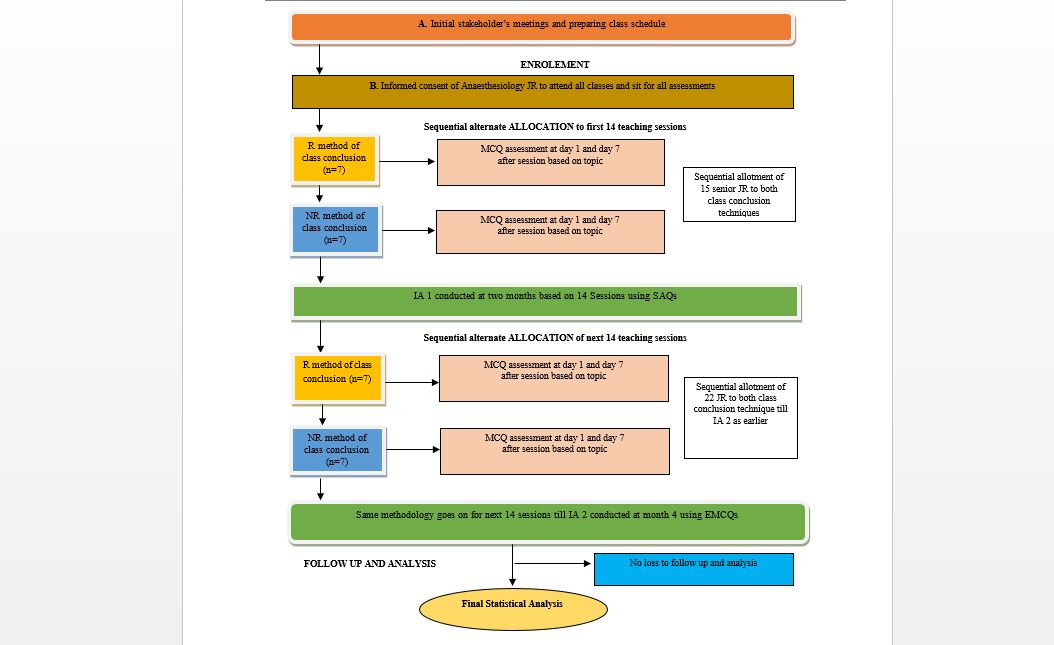

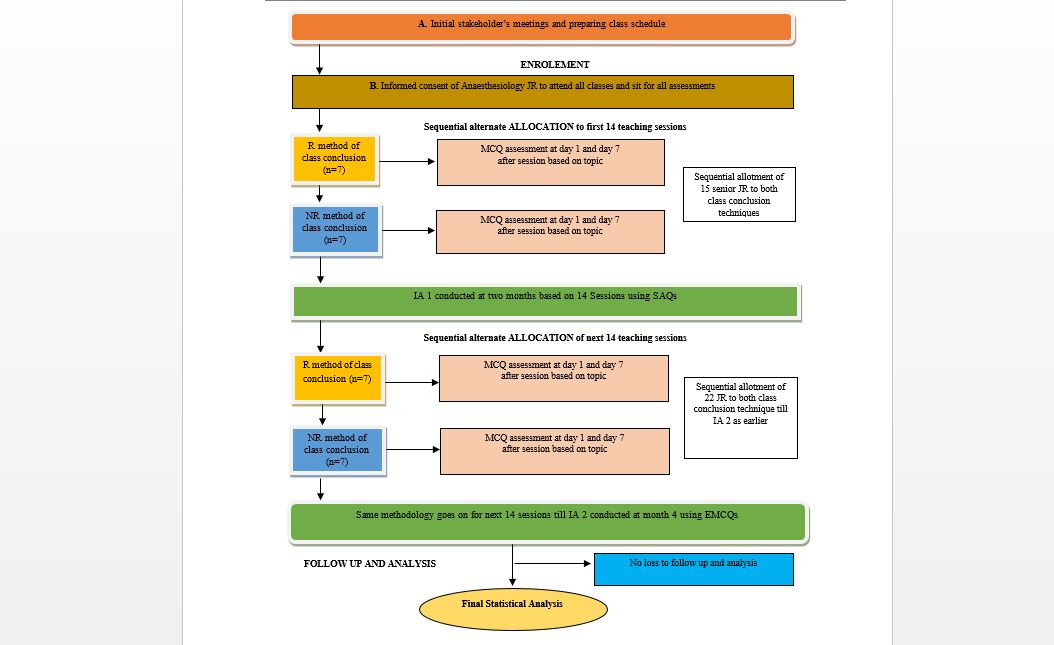

All postgraduate JR of the Department of Anaesthesiology of a tertiary care government medical college in eastern India who attended all medium-sized group knowledge-based sessions with PowerPoint presentations (student seminars, faculty lectures, case presentation tutorials, etc.) were included in the study. In the first half of this study, till the first IA, 15 JR of the second and final year were included as first-year JRs had just joined. In the second half of the study, all 22 JR participated. This included seven consenting first-year JRs. We used purposive sampling to include all eligible and willing JRs in this study. Exclusion criteria of this study were participants attending those sessions not using PowerPoint slides or THM or both and those participants who declined to give consent or were unavailable during testing. 50% of all knowledge-based teaching assignments ended with a silent recapitulation of presented slides following THM by teachers involved (Study group; Group R – Recapitulation group, n = 14 sessions). The other 50% of all knowledge-based teaching assignments ended with a discussion of THM only by teachers involved with no recapitulation of slides (Control group, Group NR – Non-Recapitulation group, n = 14 sessions). All teaching sessions were allocated alternating between the two conclusion techniques, and all JRs were allotted to the two groups (R and NR) alternately (1:1 ratio)in a sequential, non-randomized, purposive manner (Figure 1).

Figure 1. Flow chart of study of sequential allotment and assessment of anaesthesiology JRs

Tools/Instruments

The mean group scores obtained in Google Forms quizzes were noted in an Excel spreadsheet against topics, keeping separate subheadings for day 1, day 7, and at IA for both groups. Mean scores up to one decimal point were recorded. They were crosschecked for proper entry and cleaned if any aberration was found.

Data analysis

All test mean scores were compared, and data analysis was performed. Apart from inter-group comparison, intra-group comparison of mean scores over time was done (i.e., day 1, day 7, and at IA) for both groups R and NR. The data was described as mean, standard deviation, 95% confidence interval, and percentage as appropriate. Paired t-test was used for intragroup comparison, while unpaired two-tailed t-test and ANOVA (analysis of variance) tests compared intergroup variations. Small STATA 14 software (StataCorp LP, 2017 version, Texas, USA) was used for statistical analysis, and Excel software was used for chart preparation. Statistical significance was taken as a p-value of less than 0.05%.

Results

Most junior residents attended the day 1 and day 7 MCQs and two monthly IA sessions. However, in some situations, not all JRs were able to participate due to the emergency nature of patient care in anaesthesiology (emergency surgery going on in the operating room), internet connectivity, and medical issues. The lowest attendance recorded was 72.7%, and the maximum attendance was 100% across the sessions. Most of the assessment sessions had an attendance of 80% or more.

The total no. of classes taken was 28. Half of all teaching assignments ended with a silent recapitulation of PowerPoint slides following THM by teachers involved (Study group; Group R, n =14), while the other 50% of all knowledge-based teaching assignments ended with a discussion of THM only by teachers involved with no recapitulation of presented slides (Control group, Group NR, n =14). All teaching sessions were taken alternating between the two conclusion techniques. All JRs were allotted to the two groups (R and NR) alternately in a 1:1 ratio, a sequential, non-randomized purposive manner, and assessed with 5 MCQs each on day 1 and day 7 and again at IA after 2 months.

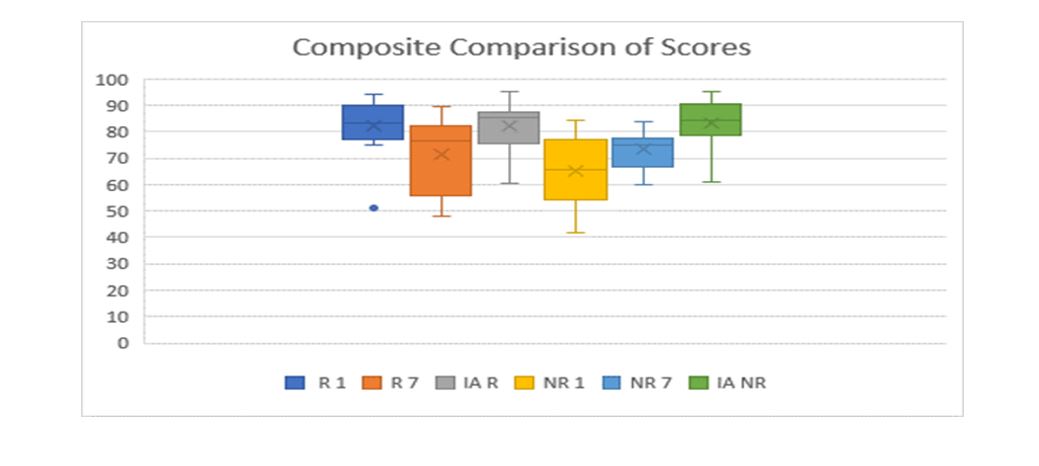

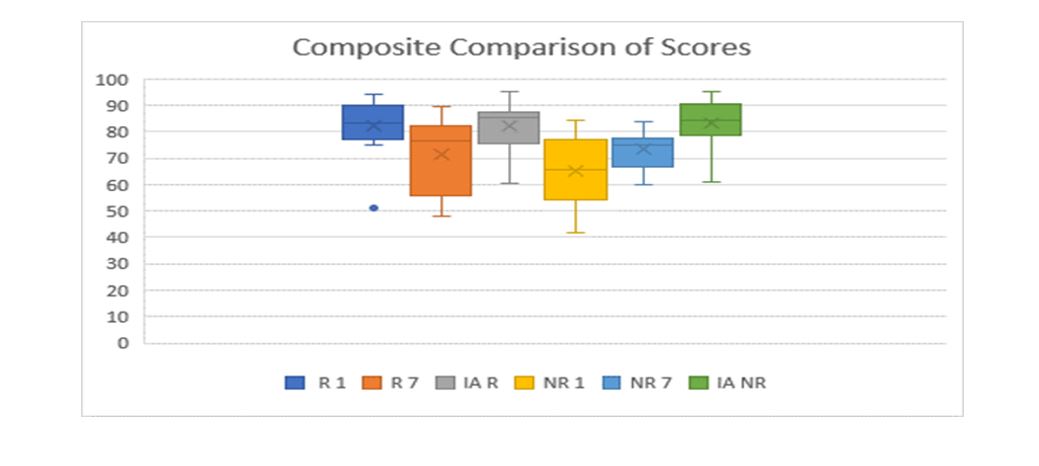

Figure 2 shows a composite box whisker plot of all the scores across groups, showing individual groups' mean, median, and interquartile range values. R1, R7, and IA R are scores obtained on day 1, day 7, and IA in group R, while NR1, NR7, and IA NR are the scores on day 1, day 7, and during IA in group NR, respectively. The Y axis denotes the percentage of marks obtained.

Figure 2. Box-whisker plotting of the group scores showing mean, median and interquartile range

Note: R1, R7 and IA R are scores obtained at day 1, day 7 and IA in group R; NR1, NR7 and IA NR are the scores at day 1, day 7 and during IA in group NR.

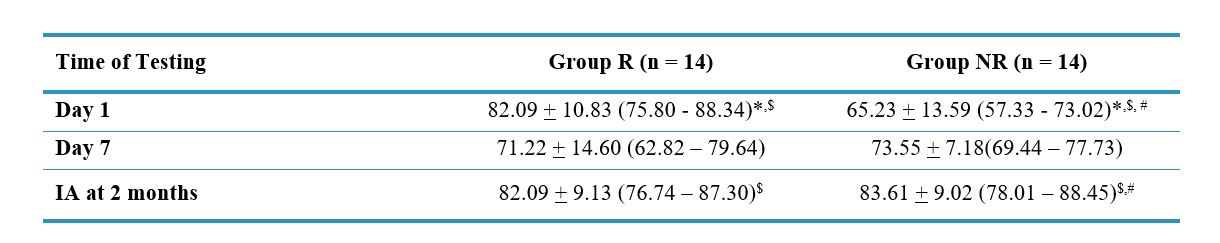

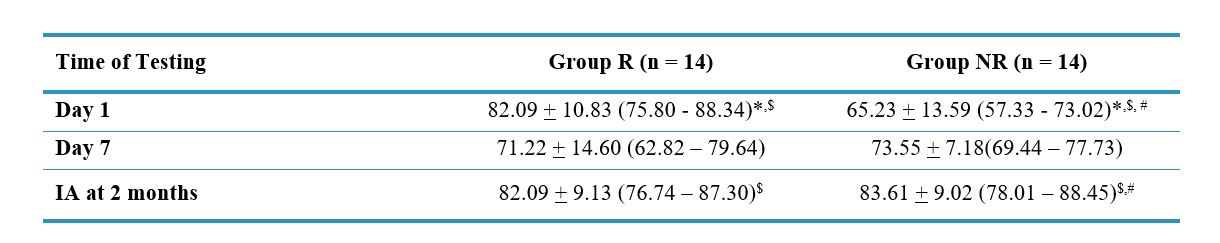

Table 1 shows the mean test scores, mean + standard deviation, and 95% Confidence Interval (CI). Inter and intra-group t-tests were performed based on the mean scores. There was a significant increase in mean MCQ scores in group R compared to group NR for day 1 scores. However, the day 7 mean MCQ scores in both groups differed significantly from the day 1 scores. The score at the time of IA improved from the score on day 7 in both groups. There was no significant difference between the day 7 and IA scores between the groups. This trend can also be seen in Figure 2. There was no difference between the scores of the first and second internal assessments. Table 2 details an analysis of the comparison between the various groups using appropriate statistical tests to compare between the groups using the composite mean scores. 21 students out of 22 responded to the informal part of the second internal assessment containing feedback about the project. All of them (100%) liked the idea of summarizing slides. 19 (90.5%) wanted the interim online assessment to go on, and the favored technique was the MCQ assessment for all students compared to SAQ. 12 students wanted average MCQ assessment (57.1%), and 9 (42.9%) wanted extended matching type MCQs. 17 out of 21 students (81%) opted for the test to be taken after one week of teaching sessions.

Table 1. Scores recorded in the groups at different time intervals

Note: Independent t-test was used to compare scores between groups (R and NR). Repeated-measures ANOVA was used to analyze changes across time points within each group.

*Indicates a significant difference between R and NR groups on Day 1 scores.

$Denotes a significant difference between Day 7 and IA scores in both groups.

#Indicates a significant difference between Day 1 and IA scores in the NR group.

Abbreviations: n, number of participants; ±, standard deviation; IA, interval assessment; R, responsive group; NR, non-responsive group; ANOVA, analysis of variance.

Table 2. Inter and intra-group tests and their respective values

Note: Unpaired t-test was used to compare scores between groups (R and NR) at different time points. Paired t-test was used to analyze within-group changes over time. One-way ANOVA was applied to compare mean scores across three time points within each group.

Abbreviations: R1, day 1 score in group R; NR1, day 1 score in group NR; R7, day 7 score in group R; NR7, day 7 score in group NR; IAR, interval assessment score in group R; IANR, interval assessment score in group NR; IA1, first internal assessment; IA2, second internal assessment; ANOVA, analysis of variance; P, probability-value.

Discussion

This study aimed to determine whether silent recapitulation of PowerPoint slides at the end of teaching sessions enhances knowledge retention among junior residents compared to sessions without this intervention,

testing MCQ scores on day 1 and day 7 afterward and internal assessment conducted after 2 months. There was a significant increase in mean scores in group R compared to group NR for day 1. However, the mean day 7 scores in both groups were significantly different from day 1 scores and similar to each other. The scores at the time of internal assessment (IA) improved from day 7 for both groups. There was no significant difference between the day 7 and IA scores among the

groups. When the variance of scores was assessed for group R and group NR across the three testing intervals, it was found to be significant for both groups. There was no difference between the scores of the first and second internal assessments. All students wanted the silent recapitulation of the slides technique to continue in the future. 90.5% of JR's wanted the interim online assessment to go on after the project, and the favored technique was MCQ over SAQ (100%). 57.1% of JR's wanted MCQ, and 42.9% wanted EMCQ as the preferred method of post-testing assessment. 81% opted for the test to be taken after one week of teaching sessions.

In our study, there was a significant increase in day 1 MCQ mean score when the novel intervention of silent recapitulation was incorporated with PowerPoint presentations compared to sessions not following this intervention. However, this effect tapered off by day 7, so the intergroup scores were insignificant. Initial scores improving after implementation of a medical education technique, which recedes over time, is an observation from previous studies like implementing MCQs or incorporating videos and explanations into PowerPoint presentations to make it interesting [17, 18]. The day 7 score decline was probably affected by not sharing the slides with students till the day 7 assessment was complete. In our study, the scores at IA improved compared to day 7 scores in both groups. In the study group, the scores at IA were even comparable to the day 1 score of the intervention group. In the control group NR, the scores gradually improved over time. This significant improvement in results over time at summative examinations has been proven to result from repeated testing, which enables learning [19]. The MCQs were constructed to highlight the correct response and served as feedback. Feedback is crucial to learning from tests as it enhances the benefits of testing by correcting errors and confirming correct responses [20]. Although testing improves retention in the absence of feedback, providing feedback enhances the benefits of testing by pointing out errors and confirming correct responses [21]. Tests should be given often and spaced out in time to promote better retention of information [19-21]. In our study, tests were conducted on day 1, day 7, and at 2 months, making it spaced out and confirming the concept of test-enhanced learning [14]. A surprising result was no difference in mean scores between the first and second IAs. In the first IA, final and second-year JRs took part, while first-year JRs were included in the mix for the second IA. Providing feedback during MCQ, repeated testing, and the availability of slides pre-test was probably effective at enhancing the retention of first-year JRs irrespective of the existing knowledge base of senior JRs. However, we also took care that only basic classes were taken after first-year JRs joined. The first IA was based on SAQ and conducted in the classroom, while the second was based on online EMCQ in Google Forms. MCQs can be used just as well as SAQs to assess the application of knowledge, and there are high correlations between MCQs and OEQs, such as SAQs, when they are both content-oriented [12]. Similar mean scores using different techniques meant that IA could move from classroom to online. This is particularly important because of faculty shortage and a general reluctance to assess [22]. 90.5% of JRs wanted the MCQ post-tests to be continued. On a further query about the mode of preferred MCQs, 57.1% of JRs wanted MCQs, and 42.9% wanted EMCQs as the preferred method of testing. 81% opted for the test to be taken after one week of teaching sessions. This is also a confirmation of a current 2023 study in which students want to be involved in their own MCQ testing [23]. A systemic analysis of the implementation of technology-based interventions observed that to change professional practice, we must consider the organizational context and clinical workload [24]. We have been rigorous in initial planning, implementation of tests, maintaining test standards, providing feedback, and sequentially taking teaching sessions as per study protocol. This was implemented despite a tremendous workload, faculty shortage, and other hindrances, and it concluded with positive feedback towards continuing with the silent recapitulation of slides at session end and testing to be continued. However, we would like to point out some perceived limitations of this project. As this was a focused, short pilot project completed within a limited time, we needed to assess the student's perception of the quality of MCQ, slides, and interactions of facilitator/moderators. Similarly, teachers/facilitators' overall perceptions of student performance and interaction were not evaluated. Rather than individual performance, the study focused on overall group performance. The other limitation is conducting this study using a medium-sized group in a postgraduate department with advanced learners. Thus, its applicability in large group settings and undergraduate teaching needs further evaluation. Also, 20 minutes for a slide presentation may need to be longer for some topics and have to be chosen carefully without affecting the progression of student learning. A significant limitation is that not all students can answer at a predetermined time because of the emergency nature of the clinical practice, personal illness/issues, etc., as it became apparent that this test was voluntary and optional. Google Forms and other online quizzes are good assessment tools. Still, they have fallacies of being dependent on internet connectivity and answers available over the internet, thus invalidating assessment and, most importantly, question framing. Though MCQ, EMCQ, and SAQ are valid assessment tools, they need to be assessed for validity and reliability post hoc. The final sample size of 22 was purposive to include all JR's. Post-study calculation of total sample size using day 1 MCQ scores from groups R and NR came as 9 per group (total 18) using 1:1 allocation with 95% CI and 80% power. Therefore, the sample size of 22 was adequate. Our study had 14 sessions in each group, which was much more than the minimal sample size. The demographic characteristics or any individual identification of JRs were not recorded, and we did not record individual performances. As email details were not sought in Google Forms, it was impossible to identify individual responders. Future studies should look into the effect of silent recapitulation of the presented slide at the end of the session for a larger sample of students using the randomization technique to compare with another education technique, preferably assessed at different time intervals (for example fortnight, month interval) and included at the final summative exam. This technique may also be tested in small group settings for reiterating skills training and used in undergraduate and super-specialty training - basically, any session using a slide presentation. The only investment is a few minutes to recapitulate the presented slides silently.

Conclusion

For junior residents of anesthesiology, there is significant short-term retention of knowledge after the recapitulation of slides silently at the end of the session compared to the control group. The scores fall by day 7 and improve at internal assessment due to test-enhanced learning with feedback of correct responses to MCQs. Most JRs wanted the innovative technique and tests using MCQs on the seventh day after the teaching session to continue in the future.

Ethical considerations

This work was performed after obtaining permission from the respective IEC (reference no. IEC/2023/06, dated 16.10.23) and written informed consent from all participating JRs of the Department of Anaesthesiology, Midnapore Medical College, India.

Artificial intelligence utilization for article writing

We used the Grammarly application to correct spelling and grammar. No sentence suggestion was accepted.

Acknowledgments

I want to acknowledge the input of Prof. Apul Goel and Prof. Anita Rani of King George Medical University, Lucknow, who helped finalize the study concept and discussion. Dr. Sagnik Datta, Senior Resident in the Department of Anaesthesiology at Midnapore Medical College, helped with statistics and graphical representation. Dr. Debasish Bhar, Associate Professor, framed and collected some Google quizzes. All other faculty and junior residents of the Department of Anaesthesiology at Midnapore Medical College participated wholeheartedly in the study.

Conflict of interest statement

This study was my Advanced Course in Medical Education project. In February 2024, it was presented as a poster at KGMU Lucknow, India, in the presence of assessors appointed by the NMC.

Author contributions

Conception, implementation, collecting, gathering supportive data, data analysis, and final presentation.

Funding

No funds, donations, or external help were made available for this study.

Data availability statement

All data are collected from Google Form quizzes, recorded in an Excel sheet, and available at request. SAQ from the first internal assessment is in physical copy mode and kept in the Department.

Background & Objective: Facilitators commonly ponder how to effectively conclude a learning session, and there are no clear answers. This novel study analyzes the impact of silent recapitulation of presented slides at session end on immediate and short-term knowledge retention among Junior Residents (JR) tested at different time intervals.

Material & Methods: This single-center, prospective, non-randomized interventional study was conducted at Midnapore Medical College in India. Fifteen postgraduate JR of Anaesthesiology attended 14 knowledge-based teaching sessions, while 22 JR participated in the subsequent 14 sessions. Teaching sessions were allocated in a sequential, non-randomized manner, with 50% of sessions ending with a silent recapitulation of PowerPoint slides following Take-Home Messages (THM) (Study group, n = 14 sessions), while the other 50% of sessions ended with a discussion of THM only (Control group, n = 14 sessions). All participating JRs were assessed with five different Multiple Choice Questions (MCQs) each on the first and seventh day after sessions and again at Internal Assessment (IA) after 2 months. Data analysis was performed using paired t-tests for within-group comparisons, unpaired two-tailed t-tests, and ANOVA tests for between-group comparisons.

Results: The MCQ scores on day 1 were significantly higher in the study group compared to the control group (82.0 vs. 65.2, p < 0.00). However, mean MCQ scores on day 7 in both groups were significantly different from day 1 MCQ scores but similar to each other (71.2 and 73.5, p = 0.29). The scores at IA improved from day 7 MCQ scores in both the study group (71.2 vs. 82.0, p < 0.05) and the control group (73.5 vs. 83.2, p < 0.05).

Conclusion: For JRs, there is significant short-term retention of knowledge after a silent recapitulation of slides at the end of a session compared to the control group.

Introduction

PowerPoint slides are preferred for most knowledge-based teaching-learning sessions [1, 2]. To be effective as a learning tool, the presentation slides should be clear, concise, to the point, written, and consistent throughout the design, regarding font, spacing, and margin [3]. The limit of lines in a PowerPoint slide is generally six, with no more than six elements per slide, and designed visuals to make the slide readable within one minute [2]. As our study focussed on the effect of PowerPoint slide recapitulation, the slides were prepared as per guidelines.

Students learning factual knowledge should be assessed by actively recalling information and retesting at expanding time intervals to make learning more effective and ensure optimal long-term retention of knowledge [4]. Effective retrieval practice depends on the degree to which the yet-to-be-learned information is vulnerable to forgetting [3, 4]. Spacing repetition with a recall of factual knowledge by testing relevant knowledge content is beneficial when the learning content consists of activities that interfere with learning (like duties, other classes on different topics, and so on) [5]. Evaluating postgraduate students after a class based only on formative assessment is challenging, as it depends on individual perspectives [6]. To reach out to students, it is important to reiterate the important Take-Home Messages (THM) from the session [7]. This is generally done verbally by facilitators at the end of the discussion, with most summarizing the THM of the session. However, verbal discussion takes time, and at the end of class, the focus of most students often needs to be improved. 'Our eyes can read faster than our mouth can tell' [8]. The basic concept of this study is that going through the slides again without vocalization at the end of class will improve student's grasp of concepts without further loading an overburdened auditory input. This study tested whether discussing THM with silent recapitulation is superior to discussing only THM by assessing test scores later at pre-fixed intervals.

Online quizzes used periodically are highly beneficial tools for learning because they compel students to work persistently with other ongoing class activities [9]. Quiz-based learning is more engaging and interactive while increasing curiosity, thus propelling advanced learners toward self-directed learning [10]. Google Forms with Quiz has proven to be an effective tool for learning and assessment [11]. Multiple-Choice Questions (MCQs) can be used just as well as Open-Ended Questions (OEQs) to assess the application of knowledge, and a high correlation exists between MCQs and OEQs when both are fact-based [12]. Short Answer Questions (SAQ) are commonly used as open-ended questions. The use of tests for knowledge enhancement requires that they be given relatively soon after learning exercises and derived specifically from the information learned [13]. Some authors have found Extended Multiple Choice Questions (EMCQs) well suited to clinical reasoning in residents [14]. For this study, we used MCQ scores one day and one week after sessions, while SAQs were used for the first IA and EMCQs for the second IA. More studies need to be conducted to compare these two IA techniques.

The National Medical Commission (NMC) in India has stipulated that presentation should not exceed one-third of the allotted time, while the rest of the time is for interactions and formative feedback. In postgraduate curricula, IA should be held at least once every three months [15]. Based on these guidelines, the class schedule and IA were planned for this prospective study.

Even though we are more accustomed to large or small group teaching sessions by name, the current classification includes medium-sized groups of 15 to 34 learners [16]. This is typical of a postgraduate department's total Junior Resident (JR) strength. In our department, there were 15 to 22 participating JRs at the time of this study, thus conforming to the typical classification of a medium-sized group. When to perform testing is an ongoing question, as spacing between tests is important for knowledge retrieval. Tests conducted on days 1, 7, and 2 months are adequately spaced testing intervals and have been used in earlier studies [14].

This study compares the impact of silent recapitulation of PowerPoint slides at the end of teaching sessions after discussion of THM by assessing knowledge recapitulation using MCQ quizzes one day and one week after the teaching session and SAQ and EMCQ at IA held two months after the start of each session. This is done by comparing these sessions with sessions allotted to end with take-home messages only. To our knowledge, no previous study has analyzed this intervention of silent recapitulation of slides and spaced interval testing, comparing its efficacy to the home messages alone.

The study hypothesis was that there would be no difference in the performance of students regarding the intervention of silent recapitulation of slides at the end of the group teaching session compared to other sessions without slide recapitulation when testing scores on day 1 and day 7 after the teaching session and also at internal assessment (null hypothesis). The alternate hypothesis was there would be a significant difference between the groups at different evaluation times.

Materials & Methods

Design and setting(s)

This prospective, non-randomized, interventional (quasi-experimental) trial of two classroom-based medical education techniques was conducted at the postgraduate department of Anaesthesiology and Critical Care, Midnapore Medical College, in the state of West Bengal, India, from October 2023 to January 2024, a total of four months after approval from the Institutional Ethics Committee (IEC) and written informed consent from all JRs.

Participants and sampling

All postgraduate JR of the Department of Anaesthesiology of a tertiary care government medical college in eastern India who attended all medium-sized group knowledge-based sessions with PowerPoint presentations (student seminars, faculty lectures, case presentation tutorials, etc.) were included in the study. In the first half of this study, till the first IA, 15 JR of the second and final year were included as first-year JRs had just joined. In the second half of the study, all 22 JR participated. This included seven consenting first-year JRs. We used purposive sampling to include all eligible and willing JRs in this study. Exclusion criteria of this study were participants attending those sessions not using PowerPoint slides or THM or both and those participants who declined to give consent or were unavailable during testing. 50% of all knowledge-based teaching assignments ended with a silent recapitulation of presented slides following THM by teachers involved (Study group; Group R – Recapitulation group, n = 14 sessions). The other 50% of all knowledge-based teaching assignments ended with a discussion of THM only by teachers involved with no recapitulation of slides (Control group, Group NR – Non-Recapitulation group, n = 14 sessions). All teaching sessions were allocated alternating between the two conclusion techniques, and all JRs were allotted to the two groups (R and NR) alternately (1:1 ratio)in a sequential, non-randomized, purposive manner (Figure 1).

Figure 1. Flow chart of study of sequential allotment and assessment of anaesthesiology JRs

Tools/Instruments

Teaching-learning sessions were fixed at around 60 minutes over four months after discussing with stakeholders (i.e., faculty and JRs). The sessions were pre-planned, keeping only one-third of the time for PowerPoint slide presentations as per NMC guidelines. To make this feasible, a maximum of 20 PowerPoint slides with six lines, around six lines per slide, were allowed per session, which were expected to take at most 20 minutes. The rest of the session was used for interactions, explanations, feedback, and commentaries as required by the teaching facilitator concerned for lecture classes and teacher-moderator in case of postgraduate seminars, tutorials, case presentations, etc.

At the end of the presentation, all teaching-learning sessions ended with take-home messages (both Group R and NR). In group R, an additional 5 minutes was allotted for silent recapitulation of slides. Taking a less-than-normal reading speed of 200 words per minute for reading silently, 20 slides with a maximum of around 40 words per slide can be read in 4 minutes [8]. For practical purposes, the last 5 minutes of a teaching session were kept for silent recapitulation of slides at a comfortable pace. The JRs were instructed beforehand to raise their hands individually after carefully going through the individual presented slide silently. When all hands had gone up, the next slide was then projected. One day after the teaching session (day 1), five MCQs were placed in the Departmental WhatsApp portal at a prefixed time using the link generated from the Google Forms quiz option. These questions and options were discussed amongst departmental teachers after class by the class moderator and were from the contents of PowerPoint slides. The overall collective (group) mean MCQ score from Google Forms was shown in percentage for both the groups and recorded session-wise. Exactly a week after teaching (day 7), a separate set of five important MCQs from the same taught topic were put up using the quiz option of Google Forms. These questions were again agreed upon amongst departmental teachers and were from the content of the PowerPoint slides but different from previously asked questions. The overall collective mean MCQ score from Google Forms was calculated using percentages. As before, assessment scores were noted and compared in both groups. Real-time scores were shared with participants on day 1 and day 7 after the quiz with feedback on correct responses to individual quiz questions provided in Google Forms, which was set such that all questions were to be answered mandatorily and the correct answer made known to respondents only after answering. The presenter shared the PowerPoint slides with all JR through the WhatsApp group only after the day 7 quiz was conducted. As reiterated above, the groups were decided on the methodology of class conclusion to groups R and NR, respectively. All available JRs attended the classes and the assessment sessions. The total number of teaching sessions was 28. Half of all teaching assignments ended with silent recapitulation of PowerPoint slides following THM by teachers involved (Group R, n =14), while the other 50% of all knowledge-based teaching assignments ended with discussion of THM only by teachers involved with no recapitulation of slides (Group NR, n =14).

IA examinations were conducted at 2-month intervals based on questions from teaching sessions of both groups, and GroupWise results were compared. In the first IA (IA 1), multiple open-ended SAQs from each topic were given, and the assessment was done in the departmental seminar room. This was performed after seven sessions from groups R and NR were completed, a total of 14 sessions. The JRs themselves assessed the answer scripts of peers based on a premade rubric system. The second IA (IA 2) used a matching-type EMCQ option of Google Forms online. This was performed after 14 sessions, seven from groups R and NR, were completed. These sessions were completely different from those under the purview of IA 1. The composite scores acquired in IAs (IA 1 and IA 2) were also compared. As the study concluded at the second IA, we formulated the Google Forms of the second IA to contain some informal questions regarding whether the JRs would like to continue with slide recapitulation and interim testing in the future, their choice of assessment modality among the three options (MCQ, EMCQ, and SAQ), and the preferred interval of testing. The study methodology is detailed in Figure 1.

Data collection methods At the end of the presentation, all teaching-learning sessions ended with take-home messages (both Group R and NR). In group R, an additional 5 minutes was allotted for silent recapitulation of slides. Taking a less-than-normal reading speed of 200 words per minute for reading silently, 20 slides with a maximum of around 40 words per slide can be read in 4 minutes [8]. For practical purposes, the last 5 minutes of a teaching session were kept for silent recapitulation of slides at a comfortable pace. The JRs were instructed beforehand to raise their hands individually after carefully going through the individual presented slide silently. When all hands had gone up, the next slide was then projected. One day after the teaching session (day 1), five MCQs were placed in the Departmental WhatsApp portal at a prefixed time using the link generated from the Google Forms quiz option. These questions and options were discussed amongst departmental teachers after class by the class moderator and were from the contents of PowerPoint slides. The overall collective (group) mean MCQ score from Google Forms was shown in percentage for both the groups and recorded session-wise. Exactly a week after teaching (day 7), a separate set of five important MCQs from the same taught topic were put up using the quiz option of Google Forms. These questions were again agreed upon amongst departmental teachers and were from the content of the PowerPoint slides but different from previously asked questions. The overall collective mean MCQ score from Google Forms was calculated using percentages. As before, assessment scores were noted and compared in both groups. Real-time scores were shared with participants on day 1 and day 7 after the quiz with feedback on correct responses to individual quiz questions provided in Google Forms, which was set such that all questions were to be answered mandatorily and the correct answer made known to respondents only after answering. The presenter shared the PowerPoint slides with all JR through the WhatsApp group only after the day 7 quiz was conducted. As reiterated above, the groups were decided on the methodology of class conclusion to groups R and NR, respectively. All available JRs attended the classes and the assessment sessions. The total number of teaching sessions was 28. Half of all teaching assignments ended with silent recapitulation of PowerPoint slides following THM by teachers involved (Group R, n =14), while the other 50% of all knowledge-based teaching assignments ended with discussion of THM only by teachers involved with no recapitulation of slides (Group NR, n =14).

IA examinations were conducted at 2-month intervals based on questions from teaching sessions of both groups, and GroupWise results were compared. In the first IA (IA 1), multiple open-ended SAQs from each topic were given, and the assessment was done in the departmental seminar room. This was performed after seven sessions from groups R and NR were completed, a total of 14 sessions. The JRs themselves assessed the answer scripts of peers based on a premade rubric system. The second IA (IA 2) used a matching-type EMCQ option of Google Forms online. This was performed after 14 sessions, seven from groups R and NR, were completed. These sessions were completely different from those under the purview of IA 1. The composite scores acquired in IAs (IA 1 and IA 2) were also compared. As the study concluded at the second IA, we formulated the Google Forms of the second IA to contain some informal questions regarding whether the JRs would like to continue with slide recapitulation and interim testing in the future, their choice of assessment modality among the three options (MCQ, EMCQ, and SAQ), and the preferred interval of testing. The study methodology is detailed in Figure 1.

The mean group scores obtained in Google Forms quizzes were noted in an Excel spreadsheet against topics, keeping separate subheadings for day 1, day 7, and at IA for both groups. Mean scores up to one decimal point were recorded. They were crosschecked for proper entry and cleaned if any aberration was found.

Data analysis

All test mean scores were compared, and data analysis was performed. Apart from inter-group comparison, intra-group comparison of mean scores over time was done (i.e., day 1, day 7, and at IA) for both groups R and NR. The data was described as mean, standard deviation, 95% confidence interval, and percentage as appropriate. Paired t-test was used for intragroup comparison, while unpaired two-tailed t-test and ANOVA (analysis of variance) tests compared intergroup variations. Small STATA 14 software (StataCorp LP, 2017 version, Texas, USA) was used for statistical analysis, and Excel software was used for chart preparation. Statistical significance was taken as a p-value of less than 0.05%.

Results

Most junior residents attended the day 1 and day 7 MCQs and two monthly IA sessions. However, in some situations, not all JRs were able to participate due to the emergency nature of patient care in anaesthesiology (emergency surgery going on in the operating room), internet connectivity, and medical issues. The lowest attendance recorded was 72.7%, and the maximum attendance was 100% across the sessions. Most of the assessment sessions had an attendance of 80% or more.

The total no. of classes taken was 28. Half of all teaching assignments ended with a silent recapitulation of PowerPoint slides following THM by teachers involved (Study group; Group R, n =14), while the other 50% of all knowledge-based teaching assignments ended with a discussion of THM only by teachers involved with no recapitulation of presented slides (Control group, Group NR, n =14). All teaching sessions were taken alternating between the two conclusion techniques. All JRs were allotted to the two groups (R and NR) alternately in a 1:1 ratio, a sequential, non-randomized purposive manner, and assessed with 5 MCQs each on day 1 and day 7 and again at IA after 2 months.

Figure 2 shows a composite box whisker plot of all the scores across groups, showing individual groups' mean, median, and interquartile range values. R1, R7, and IA R are scores obtained on day 1, day 7, and IA in group R, while NR1, NR7, and IA NR are the scores on day 1, day 7, and during IA in group NR, respectively. The Y axis denotes the percentage of marks obtained.

Figure 2. Box-whisker plotting of the group scores showing mean, median and interquartile range

Note: R1, R7 and IA R are scores obtained at day 1, day 7 and IA in group R; NR1, NR7 and IA NR are the scores at day 1, day 7 and during IA in group NR.

Table 1 shows the mean test scores, mean + standard deviation, and 95% Confidence Interval (CI). Inter and intra-group t-tests were performed based on the mean scores. There was a significant increase in mean MCQ scores in group R compared to group NR for day 1 scores. However, the day 7 mean MCQ scores in both groups differed significantly from the day 1 scores. The score at the time of IA improved from the score on day 7 in both groups. There was no significant difference between the day 7 and IA scores between the groups. This trend can also be seen in Figure 2. There was no difference between the scores of the first and second internal assessments. Table 2 details an analysis of the comparison between the various groups using appropriate statistical tests to compare between the groups using the composite mean scores. 21 students out of 22 responded to the informal part of the second internal assessment containing feedback about the project. All of them (100%) liked the idea of summarizing slides. 19 (90.5%) wanted the interim online assessment to go on, and the favored technique was the MCQ assessment for all students compared to SAQ. 12 students wanted average MCQ assessment (57.1%), and 9 (42.9%) wanted extended matching type MCQs. 17 out of 21 students (81%) opted for the test to be taken after one week of teaching sessions.

Table 1. Scores recorded in the groups at different time intervals

Note: Independent t-test was used to compare scores between groups (R and NR). Repeated-measures ANOVA was used to analyze changes across time points within each group.

*Indicates a significant difference between R and NR groups on Day 1 scores.

$Denotes a significant difference between Day 7 and IA scores in both groups.

#Indicates a significant difference between Day 1 and IA scores in the NR group.

Abbreviations: n, number of participants; ±, standard deviation; IA, interval assessment; R, responsive group; NR, non-responsive group; ANOVA, analysis of variance.

Table 2. Inter and intra-group tests and their respective values

Note: Unpaired t-test was used to compare scores between groups (R and NR) at different time points. Paired t-test was used to analyze within-group changes over time. One-way ANOVA was applied to compare mean scores across three time points within each group.

Abbreviations: R1, day 1 score in group R; NR1, day 1 score in group NR; R7, day 7 score in group R; NR7, day 7 score in group NR; IAR, interval assessment score in group R; IANR, interval assessment score in group NR; IA1, first internal assessment; IA2, second internal assessment; ANOVA, analysis of variance; P, probability-value.

Discussion

This study aimed to determine whether silent recapitulation of PowerPoint slides at the end of teaching sessions enhances knowledge retention among junior residents compared to sessions without this intervention,

testing MCQ scores on day 1 and day 7 afterward and internal assessment conducted after 2 months. There was a significant increase in mean scores in group R compared to group NR for day 1. However, the mean day 7 scores in both groups were significantly different from day 1 scores and similar to each other. The scores at the time of internal assessment (IA) improved from day 7 for both groups. There was no significant difference between the day 7 and IA scores among the

groups. When the variance of scores was assessed for group R and group NR across the three testing intervals, it was found to be significant for both groups. There was no difference between the scores of the first and second internal assessments. All students wanted the silent recapitulation of the slides technique to continue in the future. 90.5% of JR's wanted the interim online assessment to go on after the project, and the favored technique was MCQ over SAQ (100%). 57.1% of JR's wanted MCQ, and 42.9% wanted EMCQ as the preferred method of post-testing assessment. 81% opted for the test to be taken after one week of teaching sessions.

In our study, there was a significant increase in day 1 MCQ mean score when the novel intervention of silent recapitulation was incorporated with PowerPoint presentations compared to sessions not following this intervention. However, this effect tapered off by day 7, so the intergroup scores were insignificant. Initial scores improving after implementation of a medical education technique, which recedes over time, is an observation from previous studies like implementing MCQs or incorporating videos and explanations into PowerPoint presentations to make it interesting [17, 18]. The day 7 score decline was probably affected by not sharing the slides with students till the day 7 assessment was complete. In our study, the scores at IA improved compared to day 7 scores in both groups. In the study group, the scores at IA were even comparable to the day 1 score of the intervention group. In the control group NR, the scores gradually improved over time. This significant improvement in results over time at summative examinations has been proven to result from repeated testing, which enables learning [19]. The MCQs were constructed to highlight the correct response and served as feedback. Feedback is crucial to learning from tests as it enhances the benefits of testing by correcting errors and confirming correct responses [20]. Although testing improves retention in the absence of feedback, providing feedback enhances the benefits of testing by pointing out errors and confirming correct responses [21]. Tests should be given often and spaced out in time to promote better retention of information [19-21]. In our study, tests were conducted on day 1, day 7, and at 2 months, making it spaced out and confirming the concept of test-enhanced learning [14]. A surprising result was no difference in mean scores between the first and second IAs. In the first IA, final and second-year JRs took part, while first-year JRs were included in the mix for the second IA. Providing feedback during MCQ, repeated testing, and the availability of slides pre-test was probably effective at enhancing the retention of first-year JRs irrespective of the existing knowledge base of senior JRs. However, we also took care that only basic classes were taken after first-year JRs joined. The first IA was based on SAQ and conducted in the classroom, while the second was based on online EMCQ in Google Forms. MCQs can be used just as well as SAQs to assess the application of knowledge, and there are high correlations between MCQs and OEQs, such as SAQs, when they are both content-oriented [12]. Similar mean scores using different techniques meant that IA could move from classroom to online. This is particularly important because of faculty shortage and a general reluctance to assess [22]. 90.5% of JRs wanted the MCQ post-tests to be continued. On a further query about the mode of preferred MCQs, 57.1% of JRs wanted MCQs, and 42.9% wanted EMCQs as the preferred method of testing. 81% opted for the test to be taken after one week of teaching sessions. This is also a confirmation of a current 2023 study in which students want to be involved in their own MCQ testing [23]. A systemic analysis of the implementation of technology-based interventions observed that to change professional practice, we must consider the organizational context and clinical workload [24]. We have been rigorous in initial planning, implementation of tests, maintaining test standards, providing feedback, and sequentially taking teaching sessions as per study protocol. This was implemented despite a tremendous workload, faculty shortage, and other hindrances, and it concluded with positive feedback towards continuing with the silent recapitulation of slides at session end and testing to be continued. However, we would like to point out some perceived limitations of this project. As this was a focused, short pilot project completed within a limited time, we needed to assess the student's perception of the quality of MCQ, slides, and interactions of facilitator/moderators. Similarly, teachers/facilitators' overall perceptions of student performance and interaction were not evaluated. Rather than individual performance, the study focused on overall group performance. The other limitation is conducting this study using a medium-sized group in a postgraduate department with advanced learners. Thus, its applicability in large group settings and undergraduate teaching needs further evaluation. Also, 20 minutes for a slide presentation may need to be longer for some topics and have to be chosen carefully without affecting the progression of student learning. A significant limitation is that not all students can answer at a predetermined time because of the emergency nature of the clinical practice, personal illness/issues, etc., as it became apparent that this test was voluntary and optional. Google Forms and other online quizzes are good assessment tools. Still, they have fallacies of being dependent on internet connectivity and answers available over the internet, thus invalidating assessment and, most importantly, question framing. Though MCQ, EMCQ, and SAQ are valid assessment tools, they need to be assessed for validity and reliability post hoc. The final sample size of 22 was purposive to include all JR's. Post-study calculation of total sample size using day 1 MCQ scores from groups R and NR came as 9 per group (total 18) using 1:1 allocation with 95% CI and 80% power. Therefore, the sample size of 22 was adequate. Our study had 14 sessions in each group, which was much more than the minimal sample size. The demographic characteristics or any individual identification of JRs were not recorded, and we did not record individual performances. As email details were not sought in Google Forms, it was impossible to identify individual responders. Future studies should look into the effect of silent recapitulation of the presented slide at the end of the session for a larger sample of students using the randomization technique to compare with another education technique, preferably assessed at different time intervals (for example fortnight, month interval) and included at the final summative exam. This technique may also be tested in small group settings for reiterating skills training and used in undergraduate and super-specialty training - basically, any session using a slide presentation. The only investment is a few minutes to recapitulate the presented slides silently.

Conclusion

For junior residents of anesthesiology, there is significant short-term retention of knowledge after the recapitulation of slides silently at the end of the session compared to the control group. The scores fall by day 7 and improve at internal assessment due to test-enhanced learning with feedback of correct responses to MCQs. Most JRs wanted the innovative technique and tests using MCQs on the seventh day after the teaching session to continue in the future.

Ethical considerations

This work was performed after obtaining permission from the respective IEC (reference no. IEC/2023/06, dated 16.10.23) and written informed consent from all participating JRs of the Department of Anaesthesiology, Midnapore Medical College, India.

Artificial intelligence utilization for article writing

We used the Grammarly application to correct spelling and grammar. No sentence suggestion was accepted.

Acknowledgments

I want to acknowledge the input of Prof. Apul Goel and Prof. Anita Rani of King George Medical University, Lucknow, who helped finalize the study concept and discussion. Dr. Sagnik Datta, Senior Resident in the Department of Anaesthesiology at Midnapore Medical College, helped with statistics and graphical representation. Dr. Debasish Bhar, Associate Professor, framed and collected some Google quizzes. All other faculty and junior residents of the Department of Anaesthesiology at Midnapore Medical College participated wholeheartedly in the study.

Conflict of interest statement

This study was my Advanced Course in Medical Education project. In February 2024, it was presented as a poster at KGMU Lucknow, India, in the presence of assessors appointed by the NMC.

Author contributions

Conception, implementation, collecting, gathering supportive data, data analysis, and final presentation.

Funding

No funds, donations, or external help were made available for this study.

Data availability statement

All data are collected from Google Form quizzes, recorded in an Excel sheet, and available at request. SAQ from the first internal assessment is in physical copy mode and kept in the Department.

Article Type : Orginal Research |

Subject:

Medical Education

Received: 2024/03/30 | Accepted: 2025/01/27 | Published: 2025/01/27

Received: 2024/03/30 | Accepted: 2025/01/27 | Published: 2025/01/27

References

1. Harolds JA. Tips for giving a memorable presentation, Part IV: Using and composing PowerPoint slides. Clinical Nuclear Medicine. 2012;37(10):977-80. [DOI]

2. Naegle KM. Ten simple rules for effective presentation slides. PLoS Computational Biology. 2021;17(12):e1009554. [DOI]

3. Blome C, Sondermann H, Augustin M. Accepted standards on how to give a medical research presentation: a systematic review of expert opinion papers. GMS Journal for Medical Education. 2017;34(1):Doc11. [DOI]

4. Augustin M. How to learn effectively in medical school: test yourself, learn actively, and repeat in intervals. The Yale Journal of Biology and Medicine. 2014;87:207‐12.

Augustin M. How to learn effectively in medical school: test yourself, learn actively, and repeat in intervals. The Yale Journal of Biology and Medicine. 2014;87:207‐12.

5. Greving, S, Lenhard W, Richter T. The testing effect in university teaching: using multiple-choice testing to promote retention of highly retrievable information. Teaching of Psychology. 2023; 50(4), 332-41.

5. Greving, S, Lenhard W, Richter T. The testing effect in university teaching: using multiple-choice testing to promote retention of highly retrievable information. Teaching of Psychology. 2023; 50(4), 332-41. [DOI]

6. Sharma S, Sharma V, Sharma M, Awasthi B, Chaudhary S. Formative assessment in postgraduate medical education - perceptions of students and teachers. International Journal of Applied and Basic Medical Research. 2015;5(Suppl 1):S 66-70. [DOI]

7. Lautrette, A, Boyer, A, Gruson, D. et al. Impact of take-home messages written into slide presentations delivered during lectures on the retention of messages and the residents’ knowledge: a randomized controlled study. BMC Medical Education. 2020; 20:180. [DOI]

8. Brysbaert M. How many words do we read per minute? A review and meta-analysis of reading rate. Journal of Memory and Language. 2019;109:104047. [DOI]

9. Yang BW, Razo J, Persky AM. Using testing as a learning tool. American Journal of Pharmaceutical Education. 2019;83(9):7324. [DOI]

10. Vegi VA, Sudhakar PV, Bhimarasetty DM, et al. Multiple-choice questions in assessment: Perceptions of medical students from low-resource setting. Journal of Education and Health Promotion. 2022;11(1):103. [DOI]

11. Bachu VS, Mahjoub H, Holler AE, et al. Assessing covid-19 health information on google using the quality evaluation scoring tool (QUEST): cross-sectional and readability analysis. JMIR Formative Research. 2022;6(2):e32443. [DOI]

12. Liu Q, Wald N, Daskon C, Harland, T. Multiple-choice questions (MCQs) for higher-order cognition: perspectives of university teachers. Innovations in Education and Teaching International. 2023; 61(4): 802–14. [DOI]

13. Yeh DD, Park YS. Improving learning efficiency of factual knowledge in medical education. Journal of Surgical Education. 2015;72(5):882-9. [DOI]

14. Frey A, Leutritz T, Backhaus J, Hörnlein A, König S. Item format statistics and readability of extended matching questions as an effective tool to assess medical students. Scientific Reports. 2022;12(1):20982. [DOI]

15. Competency Based Medical Education(CBME) Curriculum Guidelines, 2024 of National Medical Commission of India. Available from: [DOI]

16. Wang M, Jiang L, Luo H. Dyads or quads? Impact of group size and learning context on collaborative learning. Frontiers in Psychology. 2023;14:1168208. [DOI]

17. Nair GG, Feroze M. Effectiveness of multiple-choice questions (MCQS) discussion as a learning enhancer in conventional lecture class of undergraduate medical students. Medical Journal of Dr. DY Patil University.

2023 Jun 2. [DOI]

18. Abdulla MH, O'Sullivan E. The impact of supplementing powerpoint with detailed notes and explanatory videos on student attendance and performance in a physiology module in medicine. Medical Science Educator. 2019;29(4):959-68. [DOI]

19. Binks S. Testing enhances learning: a review of the literature. Journal of Professional Nursing. 2018;34(3):205-10. [DOI]

20. Roediger HL 3, Butler AC. The critical role of retrieval practice in long-term retention. Trends Cogn Sci. 2011 Jan;15(1):20-7. [DOI]

21. Ryan A, Judd T, Swanson D, Larsen DP, Elliott S, Tzanetos K, Kulasegaram K. Beyond right or wrong: more effective feedback for formative multiple-choice tests. Perspectives on Medical Education. 2020;9:307-13. [DOI]

22. Guzzardo MT, Khosla N, Adams AL, et al. The ones that care make all the difference: perspectives on student-faculty relationships. Innovative Higher Education. 2021;46(1):41-58. [DOI]

23. Badali, S. Rawson KA, Dunlosky J. How do students regulate their use of multiple choice practice tests? Educational Psychology Review. 2023;35:43 [DOI]

24. Keyworth C, Hart J, Armitage CJ, Tully MP. What maximizes the effectiveness and implementation of technology-based interventions to support healthcare professional practice? A systematic literature review. BMC Medical Informatics and Decision Making. 2018;18:1-21. [DOI]

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |